I think because the reward structure of being a bio-ethicist rewards saying level-headed sounding, cautious-sounding, conventional wisdom?

Though I'm not sure why that is.

But this is starting from a place of "The world could be vastly better. How do we get there?"

And there are not enough people who are up for that, to reach a consensus?

To do it right, bioethics would have to, at least in many areas, _assume_ utilitarianism.

And this works. It isn't necessarily philosophically grounded, but it works.

But having correct beliefs about what beliefs are turns out to not be the same thing as having solid irrefutable arguments for your belief about beliefs.

(To be clear, I DON'T think that I definitely understood the points they made, and I may be responding to a straw-man.)

https://t.co/TfCQgRqUtv

Culturally, I am clearly part of the "Yudkowsky cluster". And as near as I can tell, Bayes is actually the true foundation of epistemology.

— Eli Tyre (@EpistemicHope) December 13, 2020

But my personal PRACTICE is much closer to the sort of thing the critical rationalists talk about (assuming I'm understanding them).

What crit rats can\u2019t get past re bayesianism is what then justifies the specific probabilities? Isn\u2019t it an infinite regress?

— Cam Peters (@campeters4) December 14, 2020

I have an 80% confidence in X. Does one then have a 100% confidence in the 80% estimate?

For many things, we can't prove that it works, but it does work, and it is more interesting to move on to more advanced problems by assuming some things we can't prove.

Getting back to the original question, I think my answer was incomplete, and part of what is happening here is some self-selection regarding who becomes a bio-ethicist that I don't understand in detail.

Basically, I imagine that they tend towards conventional-mindedness.

More from Eli Tyre

I started by simply stating that I thought that the arguments that I had heard so far don't hold up, and seeing if anyone was interested in going into it in depth with

CritRats!

— Eli Tyre (@EpistemicHope) December 26, 2020

I think AI risk is a real existential concern, and I claim that the CritRat counterarguments that I've heard so far (keywords: universality, person, moral knowledge, education, etc.) don't hold up.

Anyone want to hash this out with me?https://t.co/Sdm4SSfQZv

So far, a few people have engaged pretty extensively with me, for instance, scheduling video calls to talk about some of the stuff, or long private chats.

(Links to some of those that are public at the bottom of the thread.)

But in addition to that, there has been a much more sprawling conversation happening on twitter, involving a much larger number of people.

Having talked to a number of people, I then offered a paraphrase of the basic counter that I was hearing from people of the Crit Rat persuasion.

ELI'S PARAPHRASE OF THE CRIT RAT STORY ABOUT AGI AND AI RISK

— Eli Tyre (@EpistemicHope) January 5, 2021

There are two things that you might call "AI".

The first is non-general AI, which is a program that follows some pre-set algorithm to solve a pre-set problem. This includes modern ML.

I think AI risk is a real existential concern, and I claim that the CritRat counterarguments that I've heard so far (keywords: universality, person, moral knowledge, education, etc.) don't hold up.

Anyone want to hash this out with

In general, I am super up for short (1 to 10 hour) adversarial collaborations.

— Eli Tyre (@EpistemicHope) December 23, 2020

If you think I'm wrong about something, and want to dig into the topic with me to find out what's up / prove me wrong, DM me.

For instance, while I heartily agree with lots of what is said in this video, I don't think that the conclusion about how to prevent (the bad kind of) human extinction, with regard to AGI, follows.

There are a number of reasons to think that AGI will be more dangerous than most people are, despite both people and AGIs being qualitatively the same sort of thing (explanatory knowledge-creating entities).

And, I maintain, that because of practical/quantitative (not fundamental/qualitative) differences, the development of AGI / TAI is very likely to destroy the world, by default.

(I'm not clear on exactly how much disagreement there is. In the video above, Deutsch says "Building an AGI with perverse emotions that lead it to immoral actions would be a crime."

More from Society

Controversy Has Been Caused By The Digging Of A Narrow Channel By A Resort On A Sandbank Near K. Hinmafushi.

Hinmafushi Council President Shan Ibrahim Stated To Sun That The Resort, Which Dug The Trench Creating A River On The Sandbank, Did Not Have Ownership Over The Sandbank.

Officials From The Island Of Hinmafushi Had Traveled To The Sandbank To Stop The Process Of Digging The Trench When They Became Aware Of It, Said Shan.

Officials Were Now Redepositing The Sand Removed From The Sandbank.

— Ahmed Aznil (@AhmedAznil) January 21, 2021

https://t.co/eXLNam2gv4

Good. Fuck Rush Limbaugh, and let the celebration about his death be a reminder to the rest of the racists and bigots that we\u2019ll happily dance on your graves too.

— Chris Kluwe, Irredeemable Pudgy Nobody (@ChrisWarcraft) February 17, 2021

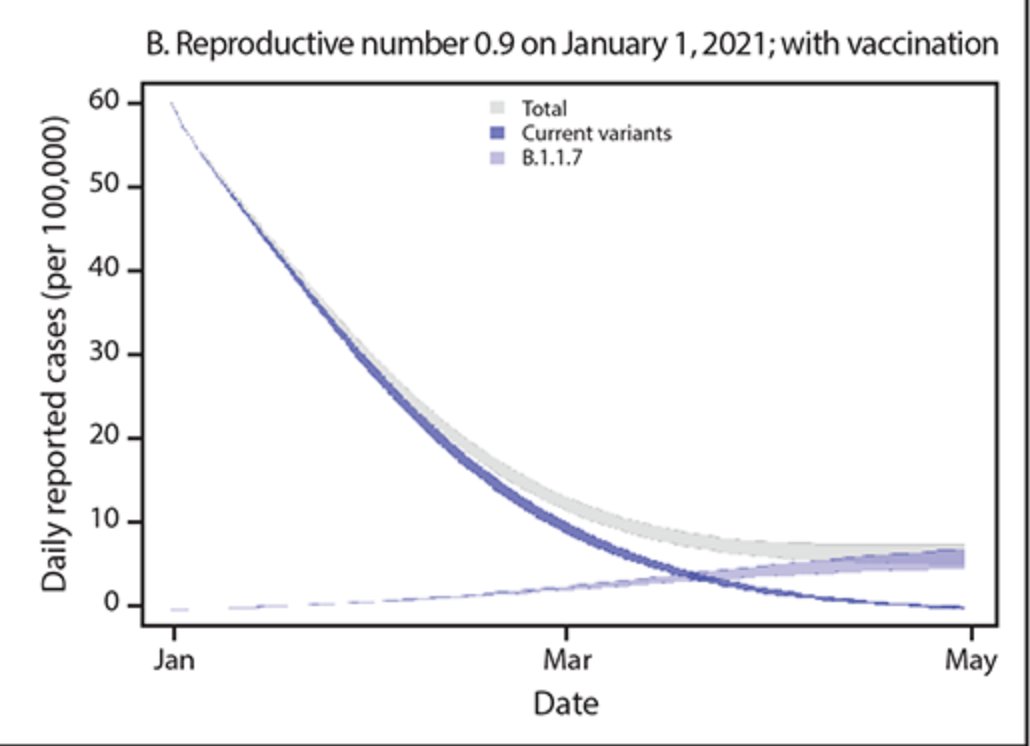

While this will likely to be the case, this should not be an automatic cause for concern. Cases could still remain contained.

Here's how: 🧵

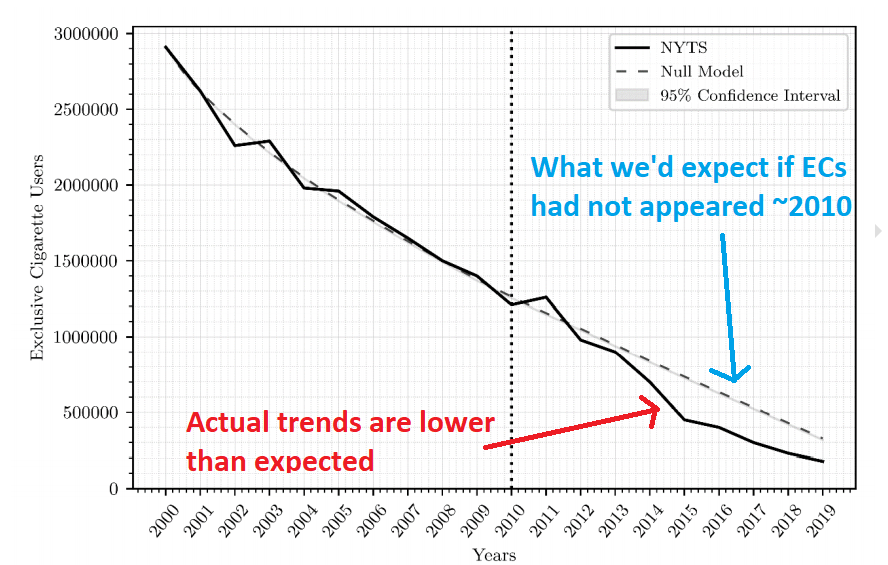

One of @CDCgov's own models has tracked the true decline in cases quite accurately thus far.

Their projection shows that the B.1.1.7 variant will become the dominant variant in March. But interestingly... there's no fourth wave. Cases simply level out:

https://t.co/tDce0MwO61

Just because a variant becomes the dominant strain does not automatically mean we will see a repeat of Fall 2020.

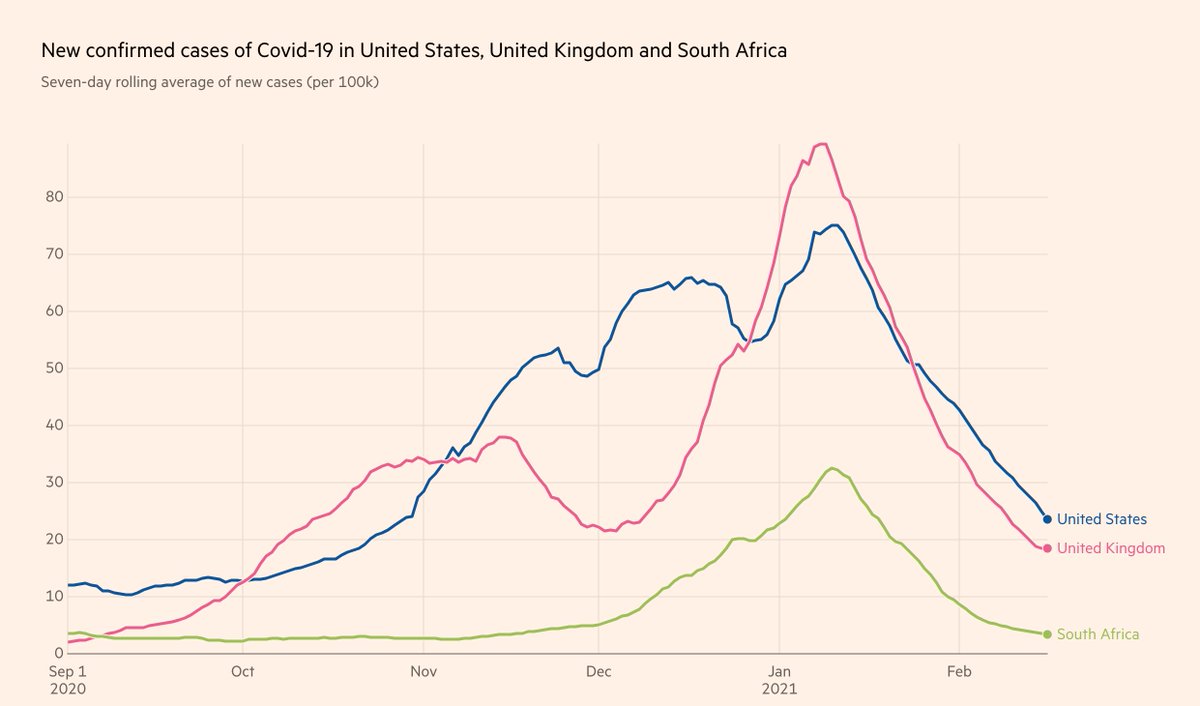

Let's look at UK and South Africa, where cases have been falling for the past month, in unison with the US (albeit with tougher restrictions):

Furthermore, the claim that the "variant is doubling every 10 days" is false. It's the *proportion of the variant* that is doubling every 10 days.

If overall prevalence drops during the studied time period, the true doubling time of the variant is actually much longer 10 days.

Simple example:

Day 0: 10 variant / 100 cases -> 10% variant

Day 10: 15 variant / 75 cases -> 20% variant

Day 20: 20 variant / 50 cases -> 40% variant

1) Proportion of variant doubles every 10 days

2) Doubling time of variant is actually 20 days

3) Total cases still drop by 50%