I was reading something that suggested that trauma "tries" to spread itself. ie that the reason why intergenerational trauma is a thing is that the traumatized part in a parent will take action to recreate that trauma in the child.

More like, "here's a story for how this could work.")

Why on earth would trauma be agenty, in that way? It sounds like too much to swallow.

If some traumas try to replicate them selves in other minds, but most don't pretty soon the world will be awash in the replicator type.

Its unclear how high the fidelity of transmission is.

Thinking through this has given me a new appreciation of what @DavidDeutschOxf calls "anti-rational memes". I think he might be on to something that they are more-or-less at the core of all our problems on earth.

More from Eli Tyre

I think AI risk is a real existential concern, and I claim that the CritRat counterarguments that I've heard so far (keywords: universality, person, moral knowledge, education, etc.) don't hold up.

Anyone want to hash this out with

In general, I am super up for short (1 to 10 hour) adversarial collaborations.

— Eli Tyre (@EpistemicHope) December 23, 2020

If you think I'm wrong about something, and want to dig into the topic with me to find out what's up / prove me wrong, DM me.

For instance, while I heartily agree with lots of what is said in this video, I don't think that the conclusion about how to prevent (the bad kind of) human extinction, with regard to AGI, follows.

There are a number of reasons to think that AGI will be more dangerous than most people are, despite both people and AGIs being qualitatively the same sort of thing (explanatory knowledge-creating entities).

And, I maintain, that because of practical/quantitative (not fundamental/qualitative) differences, the development of AGI / TAI is very likely to destroy the world, by default.

(I'm not clear on exactly how much disagreement there is. In the video above, Deutsch says "Building an AGI with perverse emotions that lead it to immoral actions would be a crime."

I started by simply stating that I thought that the arguments that I had heard so far don't hold up, and seeing if anyone was interested in going into it in depth with

CritRats!

— Eli Tyre (@EpistemicHope) December 26, 2020

I think AI risk is a real existential concern, and I claim that the CritRat counterarguments that I've heard so far (keywords: universality, person, moral knowledge, education, etc.) don't hold up.

Anyone want to hash this out with me?https://t.co/Sdm4SSfQZv

So far, a few people have engaged pretty extensively with me, for instance, scheduling video calls to talk about some of the stuff, or long private chats.

(Links to some of those that are public at the bottom of the thread.)

But in addition to that, there has been a much more sprawling conversation happening on twitter, involving a much larger number of people.

Having talked to a number of people, I then offered a paraphrase of the basic counter that I was hearing from people of the Crit Rat persuasion.

ELI'S PARAPHRASE OF THE CRIT RAT STORY ABOUT AGI AND AI RISK

— Eli Tyre (@EpistemicHope) January 5, 2021

There are two things that you might call "AI".

The first is non-general AI, which is a program that follows some pre-set algorithm to solve a pre-set problem. This includes modern ML.

More from Culture

As someone\u2019s who\u2019s read the book, this review strikes me as tremendously unfair. It mostly faults Adler for not writing the book the reviewer wishes he had! https://t.co/pqpt5Ziivj

— Teresa M. Bejan (@tmbejan) January 12, 2021

The meat of the criticism is that the history Adler gives is insufficiently critical. Adler describes a few figures who had a great influence on how the modern US university was formed. It's certainly critical: it focuses on the social Darwinism of these figures. 2/x

Other insinuations and suggestions in the review seem wildly off the mark, distorted, or inappropriate-- for example, that the book is clickbaity (it is scholarly) or conservative (hardly) or connected to the events at the Capitol (give me a break). 3/x

The core question: in what sense is classics inherently racist? Classics is old. On Adler's account, it begins in ancient Rome and is revived in the Renaissance. Slavery (Christiansen's primary concern) is also very old. Let's say classics is an education for slaveowners. 4/x

It's worth remembering that literacy itself is elite throughout most of this history. Literacy is, then, also the education of slaveowners. We can honor oral and musical traditions without denying that literacy is, generally, good. 5/x

I'll begin with the ancient history ... and it goes way back. Because modern humans - and before that, the ancestors of humans - almost certainly originated in Ethiopia. 🇪🇹 (sub-thread):

The famous \u201cLucy\u201d, an early ancestor of modern humans (Australopithecus) that lived 3.2 million years ago, and was discovered in 1974 in Ethiopia, displayed in the national museum in Addis Ababa \U0001f1ea\U0001f1f9 pic.twitter.com/N3oWqk1SW2

— Patrick Chovanec (@prchovanec) November 9, 2018

The first likely historical reference to Ethiopia is ancient Egyptian records of trade expeditions to the "Land of Punt" in search of gold, ebony, ivory, incense, and wild animals, starting in c 2500 BC 🇪🇹

Ethiopians themselves believe that the Queen of Sheba, who visited Israel's King Solomon in the Bible (c 950 BC), came from Ethiopia (not Yemen, as others believe). Here she is meeting Solomon in a stain-glassed window in Addis Ababa's Holy Trinity Church. 🇪🇹

References to the Queen of Sheba are everywhere in Ethiopia. The national airline's frequent flier miles are even called "ShebaMiles". 🇪🇹

You May Also Like

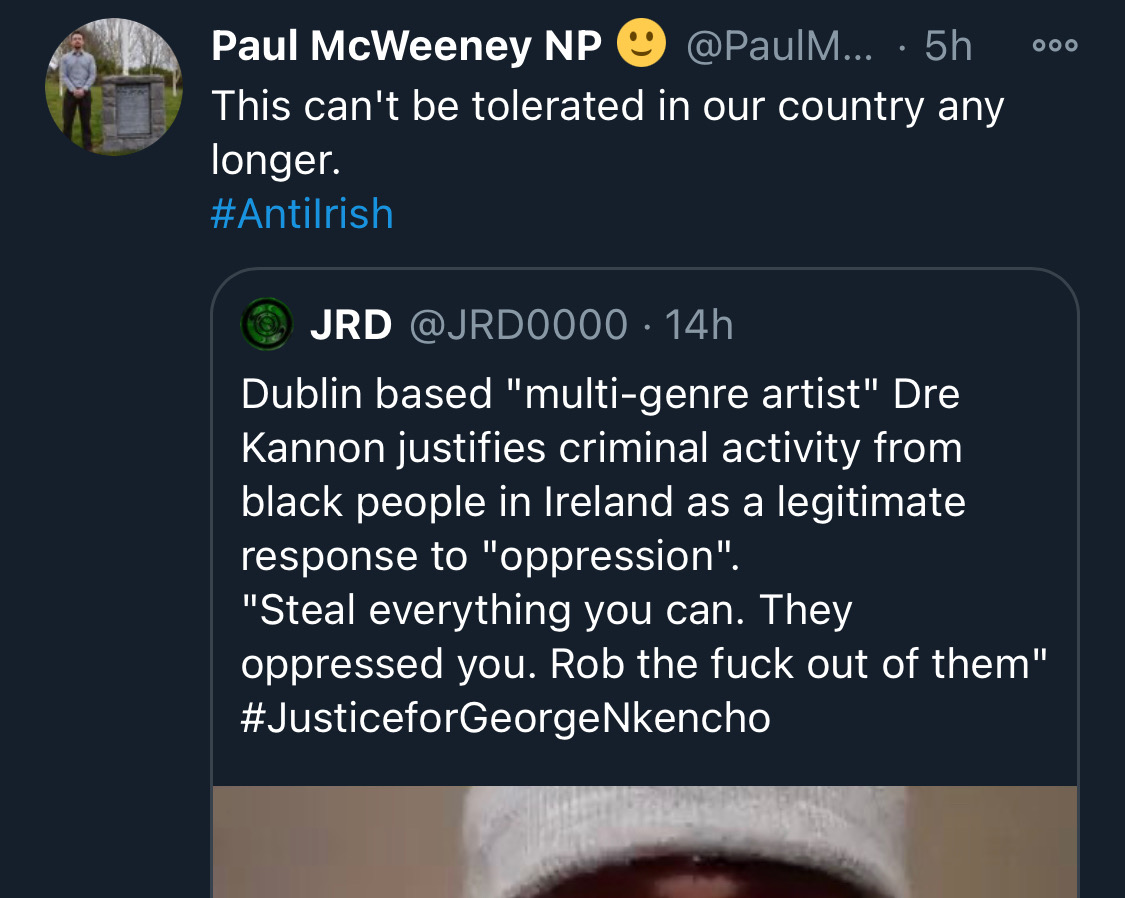

There is co-ordination across the far right in Ireland now to stir both left and right in the hopes of creating a race war. Think critically! Fascists see the tragic killing of #georgenkencho, the grief of his community and pending investigation as a flashpoint for action.

Across Telegram, Twitter and Facebook disinformation is being peddled on the back of these tragic events. From false photographs to the tactics ofwhite supremacy, the far right is clumsily trying to drive hate against minority groups and figureheads.

Be aware, the images the #farright are sharing in the hopes of starting a race war, are not of the SPAR employee that was punched. They\u2019re older photos of a Everton fan. Be aware of the information you\u2019re sharing and that it may be false. Always #factcheck #GeorgeNkencho pic.twitter.com/4c9w4CMk5h

— antifa.drone (@antifa_drone) December 31, 2020

Declan Ganley’s Burkean group and the incel wing of National Party (Gearóid Murphy, Mick O’Keeffe & Co.) as well as all the usuals are concerted in their efforts to demonstrate their white supremacist cred. The quiet parts are today being said out loud.

There is a concerted effort in far-right Telegram groups to try and incite violence on street by targetting people for racist online abuse following the killing of George Nkencho

— Mark Malone (@soundmigration) January 1, 2021

This follows on and is part of a misinformation campaign to polarise communities at this time.

The best thing you can do is challenge disinformation and report posts where engagement isn’t appropriate. Many of these are blatantly racist posts designed to drive recruitment to NP and other Nationalist groups. By all means protest but stay safe.

==========================

Module 1

Python makes it very easy to analyze and visualize time series data when you’re a beginner. It's easier when you don't have to install python on your PC (that's why it's a nano course, you'll learn python...

... on the go). You will not be required to install python in your PC but you will be using an amazing python editor, Google Colab Visit https://t.co/EZt0agsdlV

This course is for anyone out there who is confused, frustrated, and just wants this python/finance thing to work!

In Module 1 of this Nano course, we will learn about :

# Using Google Colab

# Importing libraries

# Making a Random Time Series of Black Field Research Stock (fictional)

# Using Google Colab

Intro link is here on YT: https://t.co/MqMSDBaQri

Create a new Notebook at https://t.co/EZt0agsdlV and name it AnythingOfYourChoice.ipynb

You got your notebook ready and now the game is on!

You can add code in these cells and add as many cells as you want

# Importing Libraries

Imports are pretty standard, with a few exceptions.

For the most part, you can import your libraries by running the import.

Type this in the first cell you see. You need not worry about what each of these does, we will understand it later.