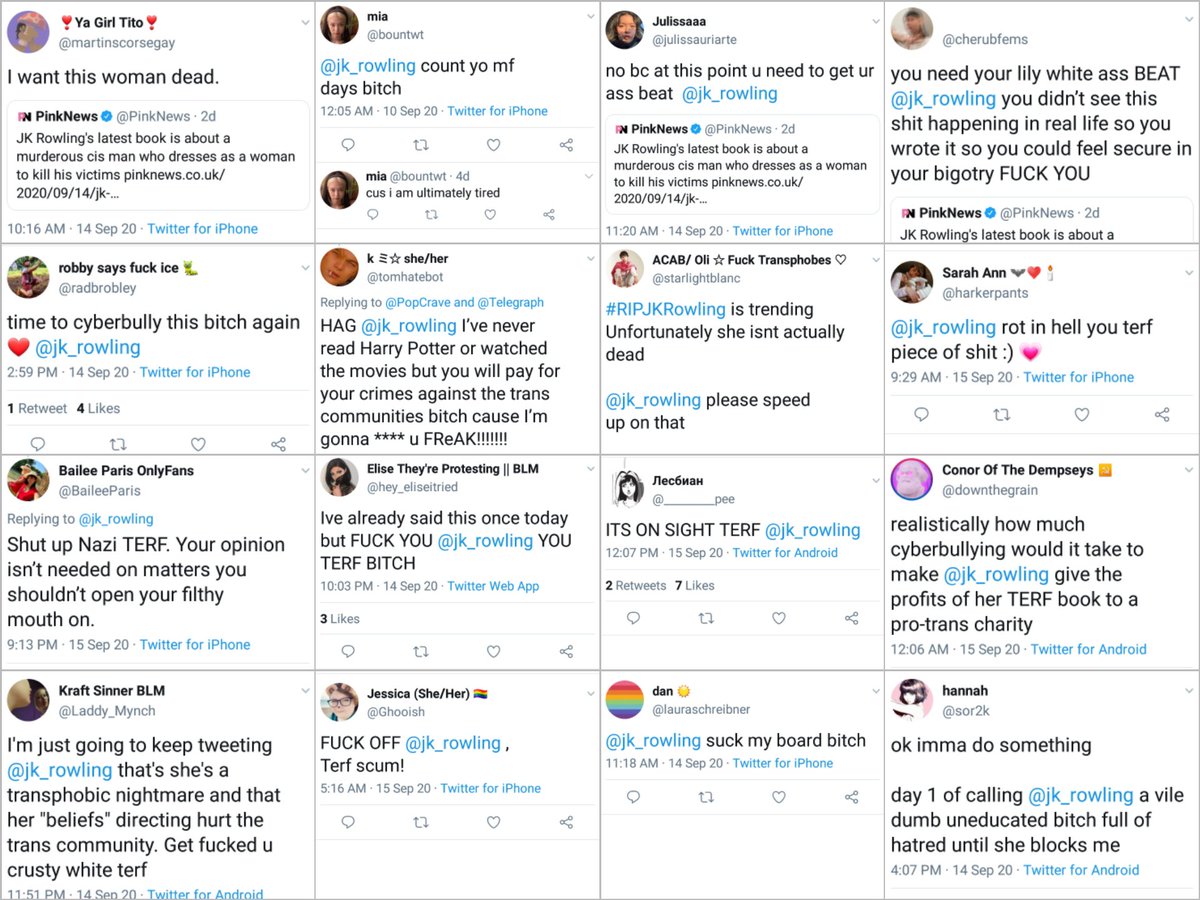

LRT: One of the problems with Twitter moderation - and I'm not suggesting this is an innocent cause that is accidentally enabling abuse, but rather that it's a feature, from their point of view - is that the reporting categories available for us do not match up to the rules.

Because the rule's existence creates the impression that we have protections we don't.

Meanwhile, people who aren't acting in good faith can, will, and DO game the automated aspects of the system to suppress and harm their targets.

A death threat is not supposed to be allowed on here even if it's a joke. That's Twitter's premise, not mine.

A guy going "Haha get raped." is part of the plan. It's normal.

His target replying "FUCK OFF" is not. It's radical.

Things that strike the moderator as "That's just how it is on this bitch of an earth." get a pass.

And then the ultra-modern ones like "Banned from Minecraft in real life."

https://t.co/5xdHZmqLmM

Helicopter rides.

— azteclady (@HerHandsMyHands) October 3, 2020

More from 12 Foot Tall Giant Alexandra Erin

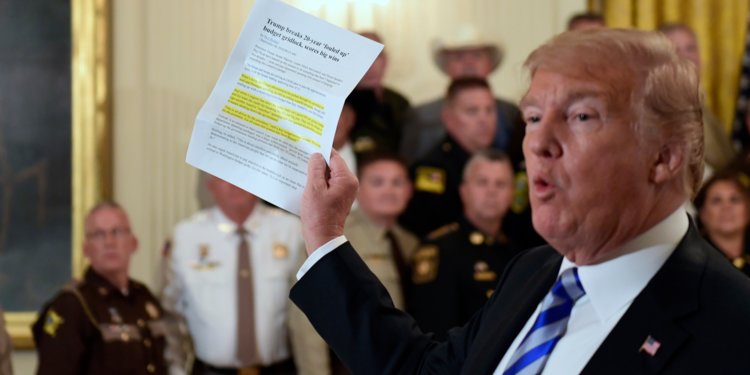

On the subject of the apparent Trump family strategy of keeping Trump in a protective bubble for the next 12 days... Hicks is one of his deftest handlers.

News \u2014 One of President Trump's closest confidants and top aides, Hope Hicks, is discussing resigning before he leaves office, according to two people. She has told people if she does, she would likely leave within the next 48 hours. It's not clear she has made a decision.

— Kaitlan Collins (@kaitlancollins) January 8, 2021

More from Social media

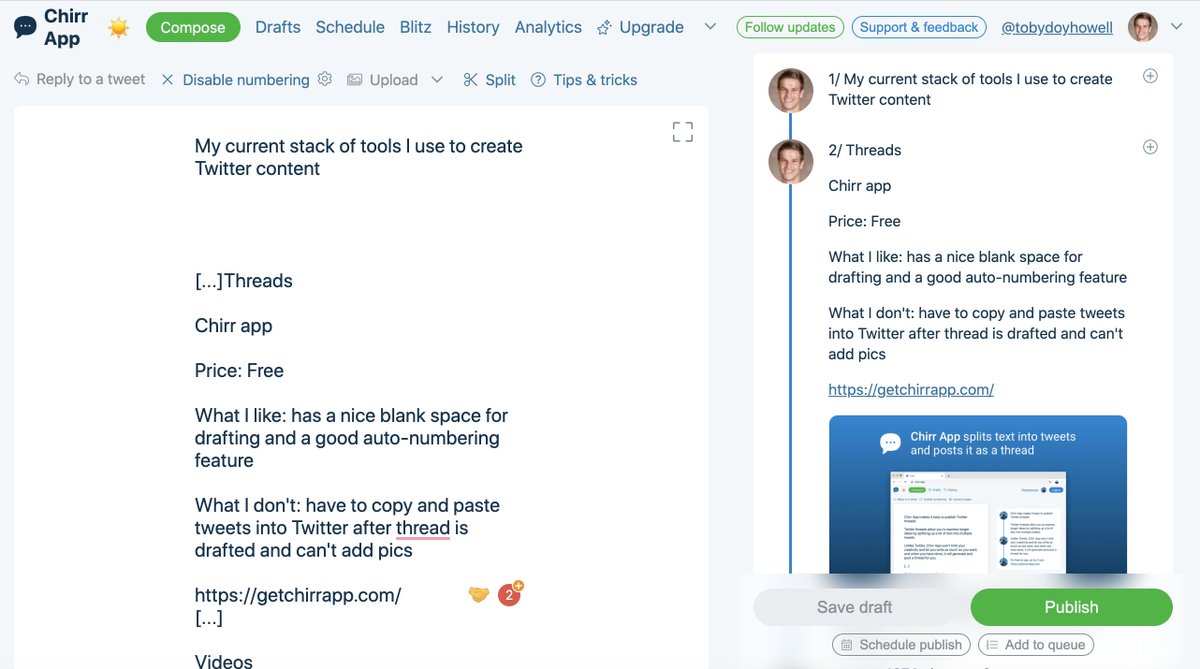

1/ Creating content on Twitter can be difficult. A thread on the stack of tools I use to make my life easier

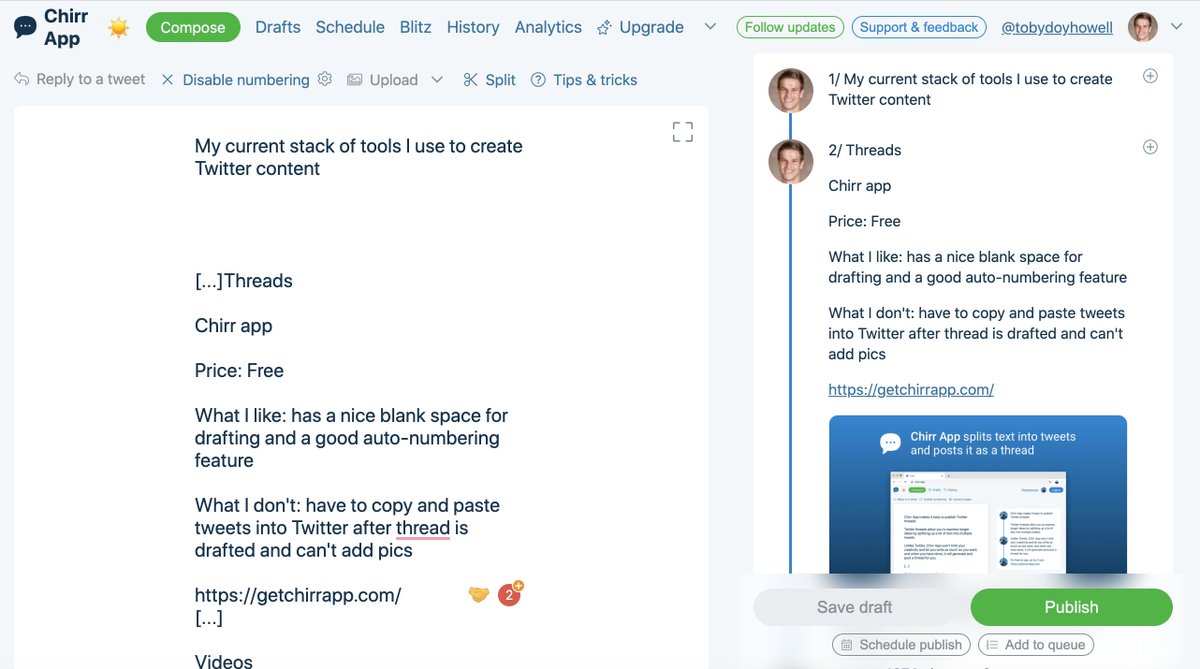

2/ Thread writing

Chirr app

Price: Free

What I like: has a nice blank space for drafting and a good auto-numbering feature

What I don't: have to copy and paste tweets into Twitter after thread is drafted and can't add pics

https://t.co/YlljnF5eNd

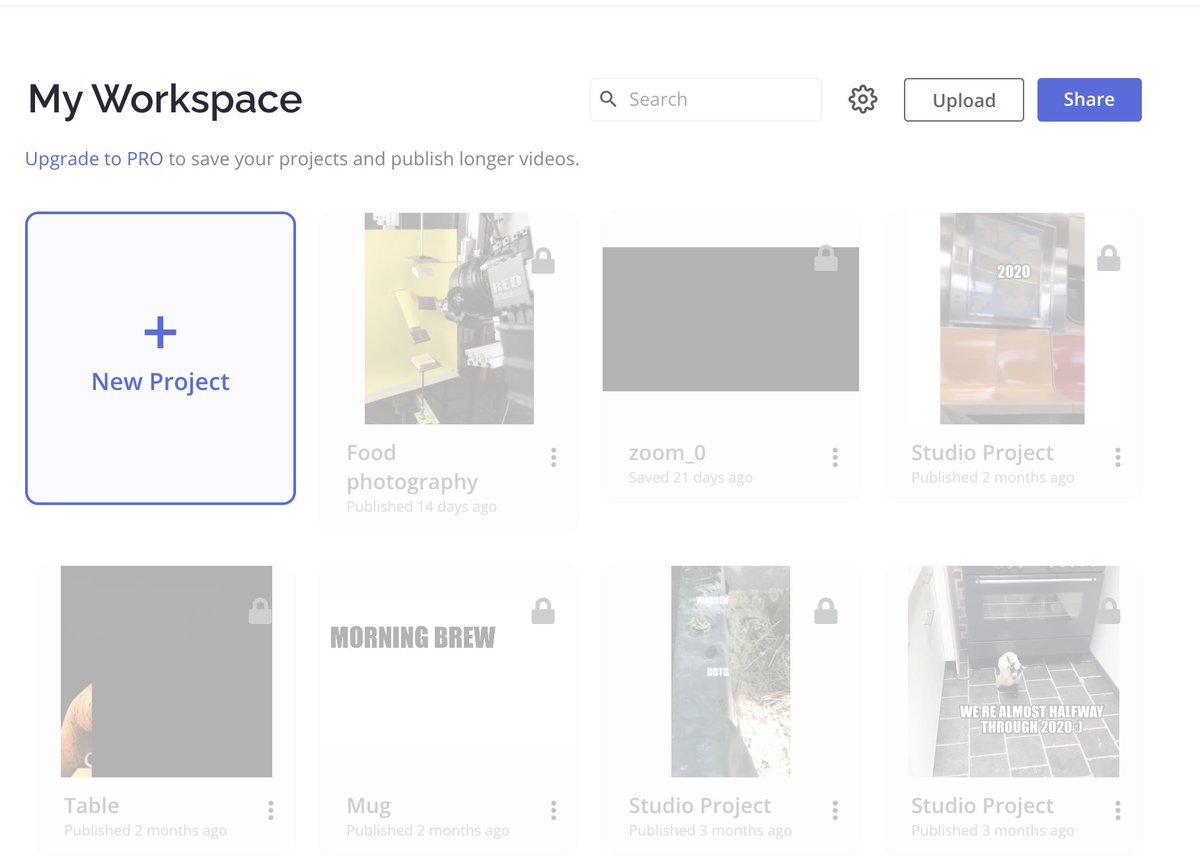

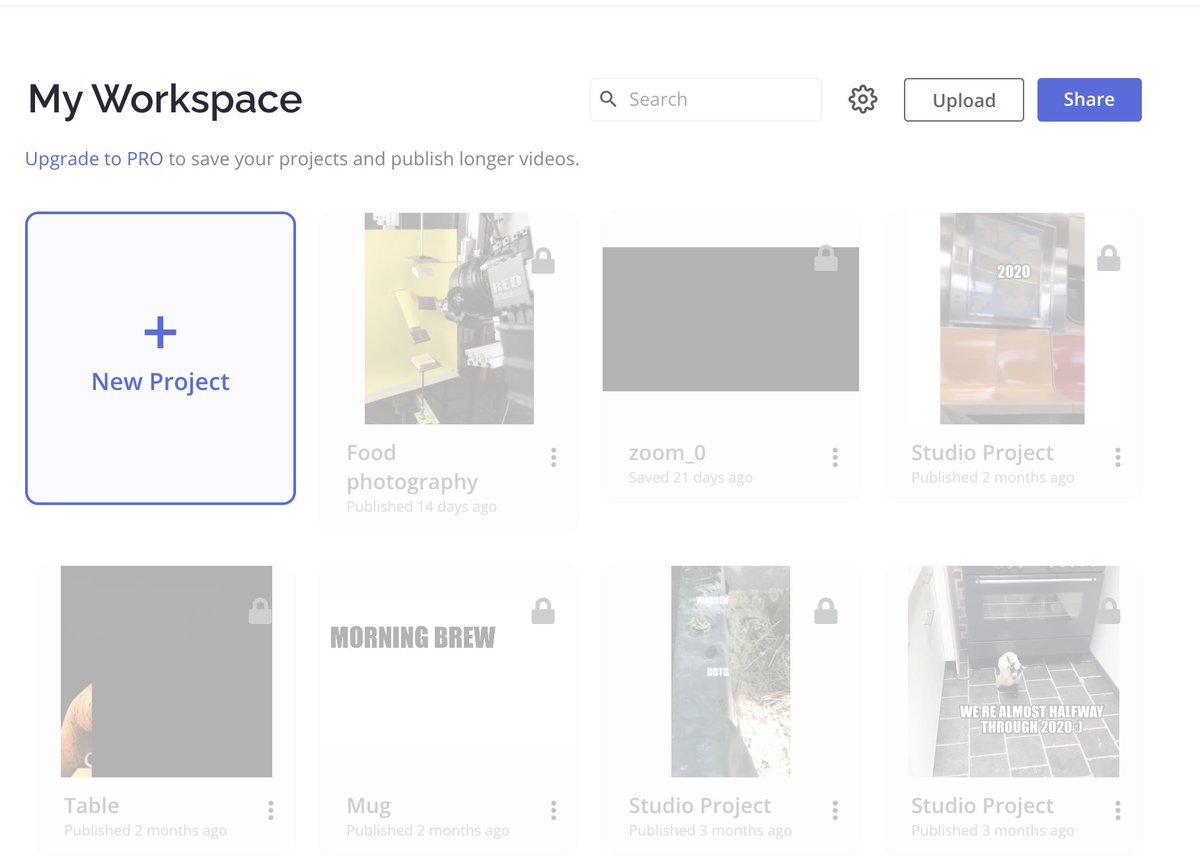

3/ Video editing

Kapwing

Price: Free

What I like: great at pulling vids from youtube/twitter and overlaying captions + different audio on them

What I don't: Can't edit content older than 2 days on the free plan

https://t.co/bREsREkCSJ

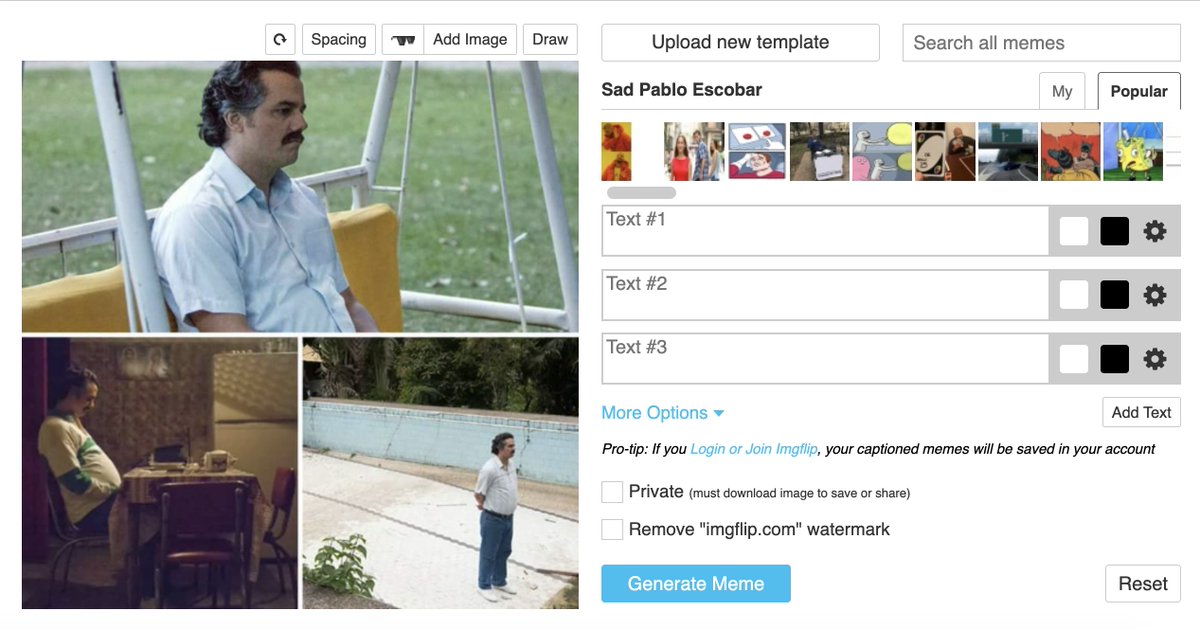

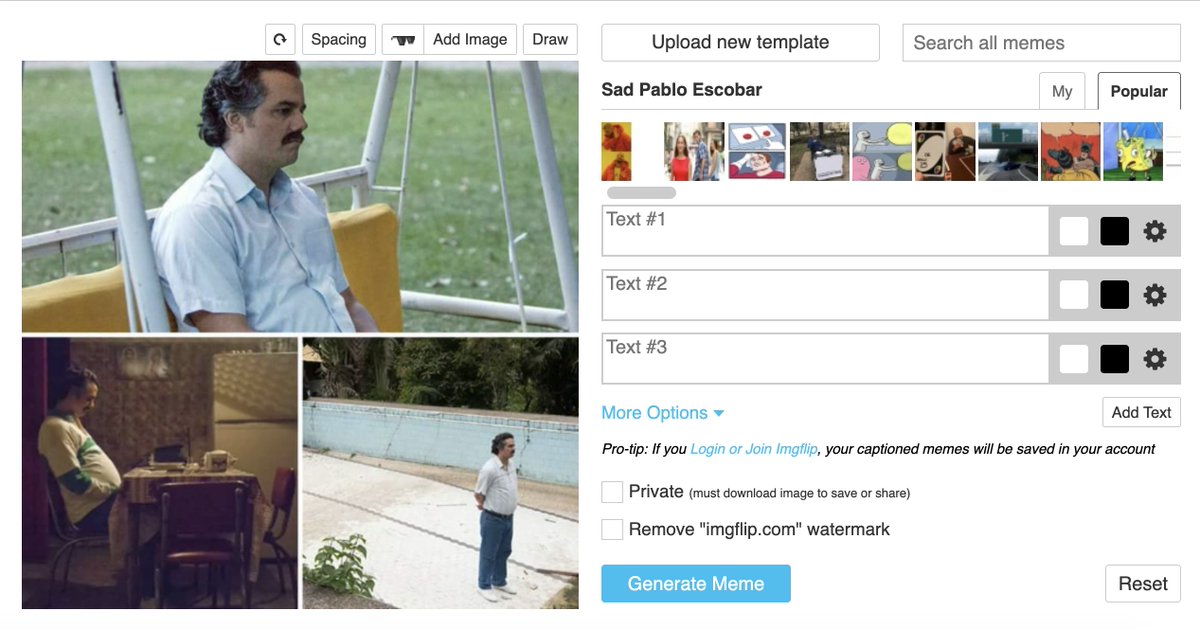

4/ Meme making

Imgflip

Price: Free

What I like: easiest way to caption existing meme formats, quickly

What I don't: limited fonts

https://t.co/sUj13VlPiO

5/ Inspiration

iPhone notes app

Price: Free

What I like: no frills & easily accessible. every thread i write starts as an idea in notes

What I don't: difficult to organize

2/ Thread writing

Chirr app

Price: Free

What I like: has a nice blank space for drafting and a good auto-numbering feature

What I don't: have to copy and paste tweets into Twitter after thread is drafted and can't add pics

https://t.co/YlljnF5eNd

3/ Video editing

Kapwing

Price: Free

What I like: great at pulling vids from youtube/twitter and overlaying captions + different audio on them

What I don't: Can't edit content older than 2 days on the free plan

https://t.co/bREsREkCSJ

4/ Meme making

Imgflip

Price: Free

What I like: easiest way to caption existing meme formats, quickly

What I don't: limited fonts

https://t.co/sUj13VlPiO

5/ Inspiration

iPhone notes app

Price: Free

What I like: no frills & easily accessible. every thread i write starts as an idea in notes

What I don't: difficult to organize

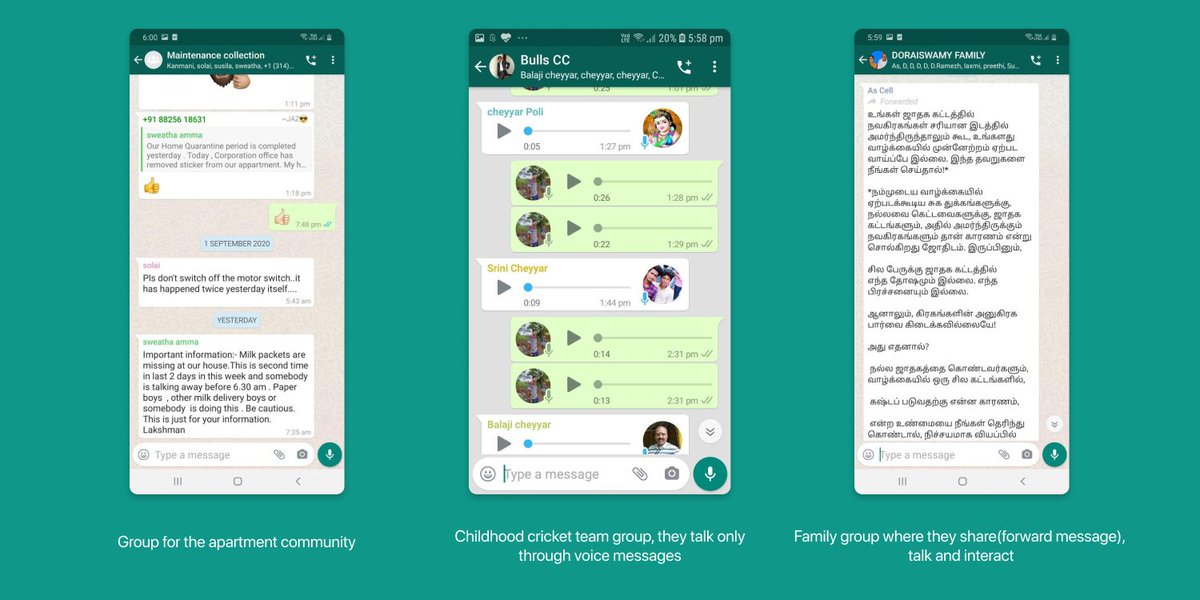

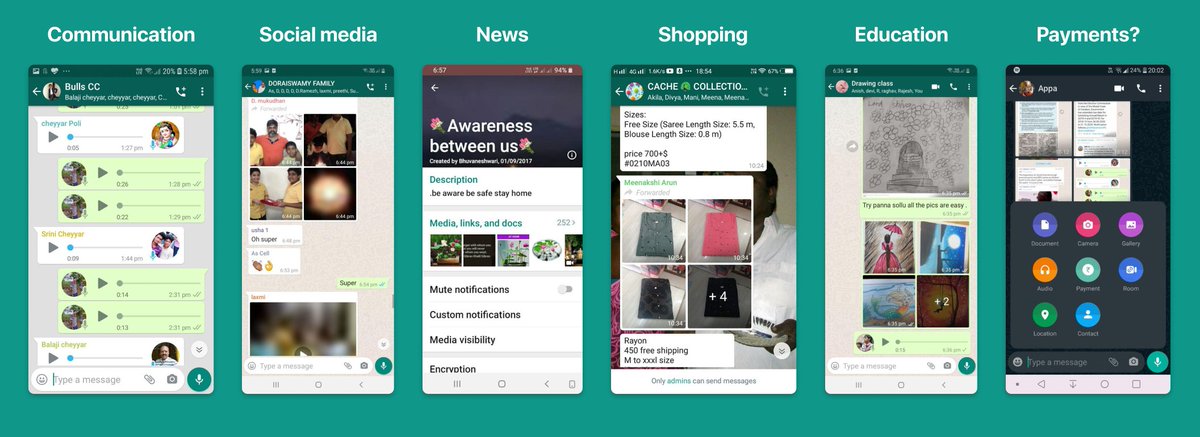

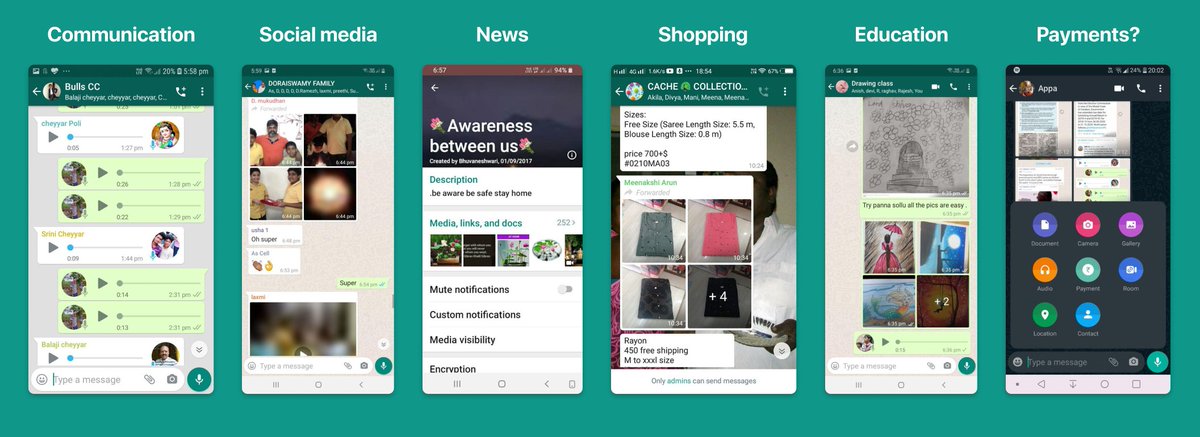

Is WhatsApp already the Super App of India?

Thread 🧵

Below are a few insights I gathered while researching on how Gen-X use WhatsApp as a part of @10kdesigners Cohort!

Okay, let's go!

1/x

Gen-X? Who are they?

Gen-X (short for Generation X) are basically people with birth years around 1960–1980. That’s basically our (millennials’) parents!

2/x

Check out this detailed case study by @zainab_delawala

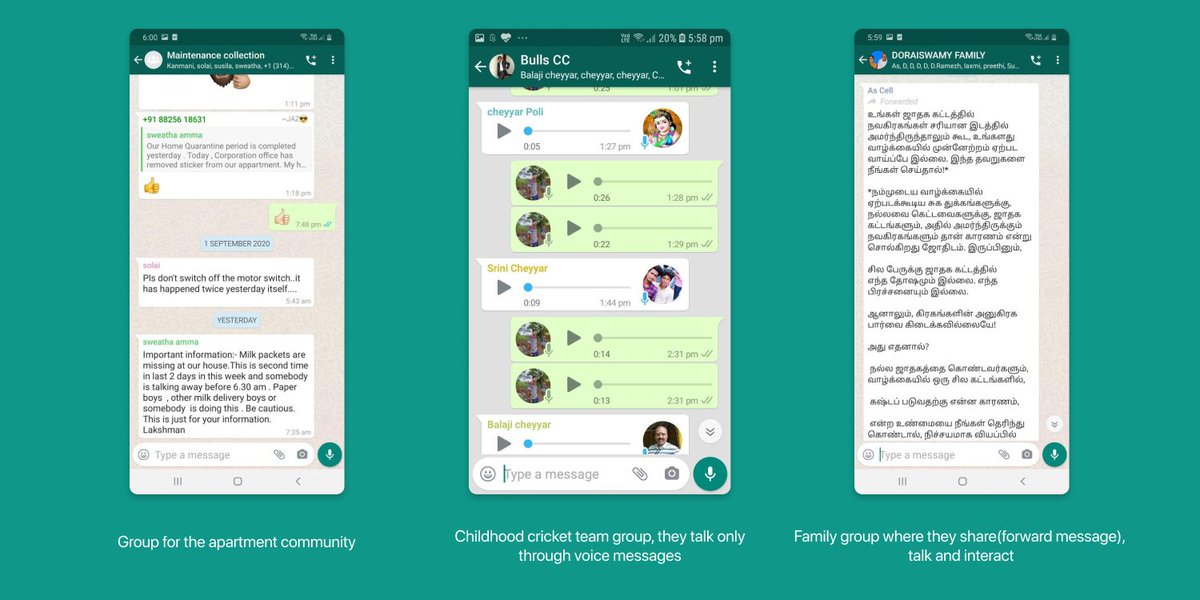

📮 Communication/Community

This is the primary feature of WhatsApp.

This feature is the entry point for most of the Gen-X, they come to WhatsApp to communicate and engage with small

- WhatsApp group is one of the most used features by Gen-X. Most of the message more on groups than on private chats.

- Forward messages received mostly are written in vernacular languages. They are all well scripted.

4/x

Thread 🧵

Below are a few insights I gathered while researching on how Gen-X use WhatsApp as a part of @10kdesigners Cohort!

Okay, let's go!

1/x

Gen-X? Who are they?

Gen-X (short for Generation X) are basically people with birth years around 1960–1980. That’s basically our (millennials’) parents!

2/x

Check out this detailed case study by @zainab_delawala

📮 Communication/Community

This is the primary feature of WhatsApp.

This feature is the entry point for most of the Gen-X, they come to WhatsApp to communicate and engage with small

Can a movie (96') change how people use an app (Whatsapp)?

— Rajesh Raghavan (@rajeshraghavan_) October 1, 2020

YES. It can.

Let's see how\U0001f440 pic.twitter.com/BV0scQ2KEc

- WhatsApp group is one of the most used features by Gen-X. Most of the message more on groups than on private chats.

- Forward messages received mostly are written in vernacular languages. They are all well scripted.

4/x