Or it's important to them that others believe that they're actually depressed, not "just sad."

I know some people who seem (to me) more concerned with receiving VALIDATION for their mental health issues than solving them.

They seem to care most about other people BELIEVING their problems are real.

I'm curious about this.

Or it's important to them that others believe that they're actually depressed, not "just sad."

If no one cuts you slack because of your mental health stuff, maybe you're in a really bad place, so you need people to believe you.

https://t.co/07v275i2gt

While she said some things I agree with, I rolled by eyes at her use of the word "trauma" because it seemed to me a transparent way to show political support to folks who's trauma narratives are important to...

I guess there's a general thing here: the more you can claim to have been harmed, the more likely people are to rally to support you.

I was once in a social dynamic where I would construct things such that I was visibly sad or put upon, because that was the only way I knew to revive (a type of) affection.

They need it, and they need it to be believed, because that's the only way they can feel loved and supported.

(Though that begs the question of WHY people have that need.)

The establishment certifies that this problem is HARD, you're not expected to just be able to trivially solve it. Which gives one protection against others' claims (or even demands) that you can and should.

Do you have a story for what's going on?

More from Eli Tyre

I started by simply stating that I thought that the arguments that I had heard so far don't hold up, and seeing if anyone was interested in going into it in depth with

CritRats!

— Eli Tyre (@EpistemicHope) December 26, 2020

I think AI risk is a real existential concern, and I claim that the CritRat counterarguments that I've heard so far (keywords: universality, person, moral knowledge, education, etc.) don't hold up.

Anyone want to hash this out with me?https://t.co/Sdm4SSfQZv

So far, a few people have engaged pretty extensively with me, for instance, scheduling video calls to talk about some of the stuff, or long private chats.

(Links to some of those that are public at the bottom of the thread.)

But in addition to that, there has been a much more sprawling conversation happening on twitter, involving a much larger number of people.

Having talked to a number of people, I then offered a paraphrase of the basic counter that I was hearing from people of the Crit Rat persuasion.

ELI'S PARAPHRASE OF THE CRIT RAT STORY ABOUT AGI AND AI RISK

— Eli Tyre (@EpistemicHope) January 5, 2021

There are two things that you might call "AI".

The first is non-general AI, which is a program that follows some pre-set algorithm to solve a pre-set problem. This includes modern ML.

I think AI risk is a real existential concern, and I claim that the CritRat counterarguments that I've heard so far (keywords: universality, person, moral knowledge, education, etc.) don't hold up.

Anyone want to hash this out with

In general, I am super up for short (1 to 10 hour) adversarial collaborations.

— Eli Tyre (@EpistemicHope) December 23, 2020

If you think I'm wrong about something, and want to dig into the topic with me to find out what's up / prove me wrong, DM me.

For instance, while I heartily agree with lots of what is said in this video, I don't think that the conclusion about how to prevent (the bad kind of) human extinction, with regard to AGI, follows.

There are a number of reasons to think that AGI will be more dangerous than most people are, despite both people and AGIs being qualitatively the same sort of thing (explanatory knowledge-creating entities).

And, I maintain, that because of practical/quantitative (not fundamental/qualitative) differences, the development of AGI / TAI is very likely to destroy the world, by default.

(I'm not clear on exactly how much disagreement there is. In the video above, Deutsch says "Building an AGI with perverse emotions that lead it to immoral actions would be a crime."

More from Health

#FollowTheScience yes, but not just part of it!

THREAD👇

\U0001f534LIVE \U0001f4c5Today \u23f012:00 CET

— EU_HEALTH - #EUCancerPlan (@EU_Health) February 3, 2021

We are presenting today the #EUCancerPlan as part of a strong \U0001f1ea\U0001f1fa#HealthUnion

Follow the presentation live here: https://t.co/Cr8ATvzNkg#WorldCancerDay pic.twitter.com/zdByuklWV6

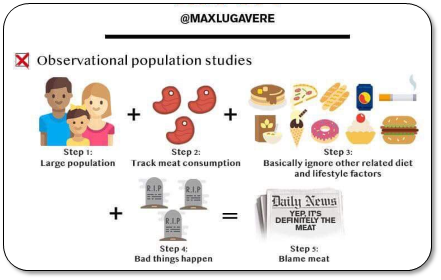

1/ Granted, some studies have pointed to ASSOCIATIONS of HIGH intake of red & processed meats with (slightly!) increased colorectal cancer incidence. Also, @WHO/IARC is often mentioned in support (usually hyperbolically so).

But, let’s have a closer look at all this! 🔍

2/ First, meat being “associated” with cancer is very different from stating that meat CAUSES cancer.

Unwarranted use of causal language is widespread in nutritional sciences, posing a systemic problem & undermining credibility.

3/ That’s because observational data are CONFOUNDED (even after statistical adjustment).

Healthy user bias is a major problem. Healthy middle classes are TOLD to eat less red meat (due to historical rather than rational reasons, cf link). So, they

4/ What’s captured here is sociology, not physiology.

Health-focused Westerners eat less red meat, whereas those who don’t adhere to dietary advice tend to have unhealthier lifestyles.

That tells us very little about meat AS SUCH being responsible for disease.

You May Also Like

If everyone was holding bitcoin on the old x86 in their parents basement, we would be finding a price bottom. The problem is the risk is all pooled at a few brokerages and a network of rotten exchanges with counter party risk that makes AIG circa 2008 look like a good credit.

— Greg Wester (@gwestr) November 25, 2018

The benign product is sovereign programmable money, which is historically a niche interest of folks with a relatively clustered set of beliefs about the state, the literary merit of Snow Crash, and the utility of gold to the modern economy.

This product has narrow appeal and, accordingly, is worth about as much as everything else on a 486 sitting in someone's basement is worth.

The other product is investment scams, which have approximately the best product market fit of anything produced by humans. In no age, in no country, in no city, at no level of sophistication do people consistently say "Actually I would prefer not to get money for nothing."

This product needs the exchanges like they need oxygen, because the value of it is directly tied to having payment rails to move real currency into the ecosystem and some jurisdictional and regulatory legerdemain to stay one step ahead of the banhammer.

I believe that @ripple_crippler and @looP_rM311_7211 are the same person. I know, nobody believes that. 2/*

Today I want to prove that Mr Pool smile faces mean XRP and price increase. In Ripple_Crippler, previous to Mr Pool existence, smile faces were frequent. They were very similar to the ones Mr Pool posts. The eyes also were usually a couple of "x", in fact, XRP logo. 3/*

The smile XRP-eyed face also appears related to the Moon. XRP going to the Moon. 4/*

And smile XRP-eyed faces also appear related to Egypt. In particular, to the Eye of Horus. https://t.co/i4rRzuQ0gZ 5/*