My friends @robwalling and @einarvollset just launched TinySeed, an accelerator for software companies where a successful outcome is a healthy, sustainable business rather than attempting to ride the rocketship trajectory. https://t.co/LSHqZNcVid

I have some thoughts:

More from Patrick McKenzie

More from All

@franciscodeasis https://t.co/OuQaBRFPu7

Unfortunately the "This work includes the identification of viral sequences in bat samples, and has resulted in the isolation of three bat SARS-related coronaviruses that are now used as reagents to test therapeutics and vaccines." were BEFORE the

chimeric infectious clone grants were there.https://t.co/DAArwFkz6v is in 2017, Rs4231.

https://t.co/UgXygDjYbW is in 2016, RsSHC014 and RsWIV16.

https://t.co/krO69CsJ94 is in 2013, RsWIV1. notice that this is before the beginning of the project

starting in 2016. Also remember that they told about only 3 isolates/live viruses. RsSHC014 is a live infectious clone that is just as alive as those other "Isolates".

P.D. somehow is able to use funds that he have yet recieved yet, and send results and sequences from late 2019 back in time into 2015,2013 and 2016!

https://t.co/4wC7k1Lh54 Ref 3: Why ALL your pangolin samples were PCR negative? to avoid deep sequencing and accidentally reveal Paguma Larvata and Oryctolagus Cuniculus?

Unfortunately the "This work includes the identification of viral sequences in bat samples, and has resulted in the isolation of three bat SARS-related coronaviruses that are now used as reagents to test therapeutics and vaccines." were BEFORE the

chimeric infectious clone grants were there.https://t.co/DAArwFkz6v is in 2017, Rs4231.

https://t.co/UgXygDjYbW is in 2016, RsSHC014 and RsWIV16.

https://t.co/krO69CsJ94 is in 2013, RsWIV1. notice that this is before the beginning of the project

starting in 2016. Also remember that they told about only 3 isolates/live viruses. RsSHC014 is a live infectious clone that is just as alive as those other "Isolates".

P.D. somehow is able to use funds that he have yet recieved yet, and send results and sequences from late 2019 back in time into 2015,2013 and 2016!

https://t.co/4wC7k1Lh54 Ref 3: Why ALL your pangolin samples were PCR negative? to avoid deep sequencing and accidentally reveal Paguma Larvata and Oryctolagus Cuniculus?

How can we use language supervision to learn better visual representations for robotics?

Introducing Voltron: Language-Driven Representation Learning for Robotics!

Paper: https://t.co/gIsRPtSjKz

Models: https://t.co/NOB3cpATYG

Evaluation: https://t.co/aOzQu95J8z

🧵👇(1 / 12)

Videos of humans performing everyday tasks (Something-Something-v2, Ego4D) offer a rich and diverse resource for learning representations for robotic manipulation.

Yet, an underused part of these datasets are the rich, natural language annotations accompanying each video. (2/12)

The Voltron framework offers a simple way to use language supervision to shape representation learning, building off of prior work in representations for robotics like MVP (https://t.co/Pb0mk9hb4i) and R3M (https://t.co/o2Fkc3fP0e).

The secret is *balance* (3/12)

Starting with a masked autoencoder over frames from these video clips, make a choice:

1) Condition on language and improve our ability to reconstruct the scene.

2) Generate language given the visual representation and improve our ability to describe what's happening. (4/12)

By trading off *conditioning* and *generation* we show that we can learn 1) better representations than prior methods, and 2) explicitly shape the balance of low and high-level features captured.

Why is the ability to shape this balance important? (5/12)

Introducing Voltron: Language-Driven Representation Learning for Robotics!

Paper: https://t.co/gIsRPtSjKz

Models: https://t.co/NOB3cpATYG

Evaluation: https://t.co/aOzQu95J8z

🧵👇(1 / 12)

Videos of humans performing everyday tasks (Something-Something-v2, Ego4D) offer a rich and diverse resource for learning representations for robotic manipulation.

Yet, an underused part of these datasets are the rich, natural language annotations accompanying each video. (2/12)

The Voltron framework offers a simple way to use language supervision to shape representation learning, building off of prior work in representations for robotics like MVP (https://t.co/Pb0mk9hb4i) and R3M (https://t.co/o2Fkc3fP0e).

The secret is *balance* (3/12)

Starting with a masked autoencoder over frames from these video clips, make a choice:

1) Condition on language and improve our ability to reconstruct the scene.

2) Generate language given the visual representation and improve our ability to describe what's happening. (4/12)

By trading off *conditioning* and *generation* we show that we can learn 1) better representations than prior methods, and 2) explicitly shape the balance of low and high-level features captured.

Why is the ability to shape this balance important? (5/12)

You May Also Like

I like this heuristic, and have a few which are similar in intent to it:

Hiring efficiency:

How long does it take, measured from initial expression of interest through offer of employment signed, for a typical candidate cold inbounding to the company?

What is the *theoretical minimum* for *any* candidate?

How long does it take, as a developer newly hired at the company:

* To get a fully credentialed machine issued to you

* To get a fully functional development environment on that machine which could push code to production immediately

* To solo ship one material quanta of work

How long does it take, from first idea floated to "It's on the Internet", to create a piece of marketing collateral.

(For bonus points: break down by ambitiousness / form factor.)

How many people have to say yes to do something which is clearly worth doing which costs $5,000 / $15,000 / $250,000 and has never been done before.

Here's how I'd measure the health of any tech company:

— Jeff Atwood (@codinghorror) October 25, 2018

How long, as measured from the inception of idea to the modified software arriving in the user's hands, does it take to roll out a *1 word copy change* in your primary product?

Hiring efficiency:

How long does it take, measured from initial expression of interest through offer of employment signed, for a typical candidate cold inbounding to the company?

What is the *theoretical minimum* for *any* candidate?

How long does it take, as a developer newly hired at the company:

* To get a fully credentialed machine issued to you

* To get a fully functional development environment on that machine which could push code to production immediately

* To solo ship one material quanta of work

How long does it take, from first idea floated to "It's on the Internet", to create a piece of marketing collateral.

(For bonus points: break down by ambitiousness / form factor.)

How many people have to say yes to do something which is clearly worth doing which costs $5,000 / $15,000 / $250,000 and has never been done before.

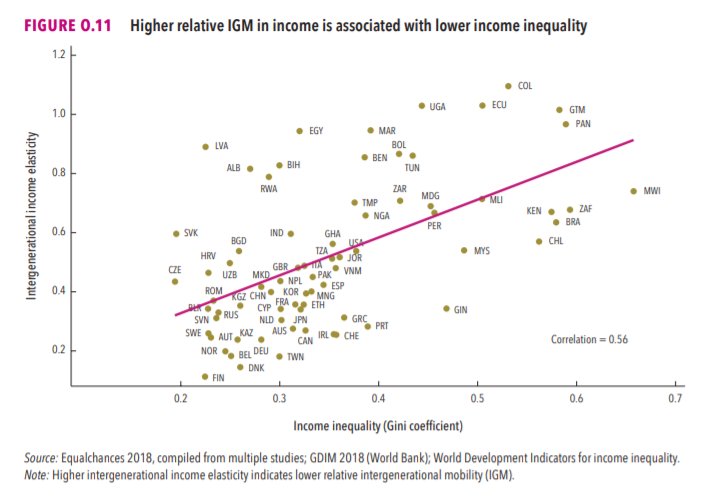

1/OK, data mystery time.

This New York Times feature shows China with a Gini Index of less than 30, which would make it more equal than Canada, France, or the Netherlands. https://t.co/g3Sv6DZTDE

That's weird. Income inequality in China is legendary.

Let's check this number.

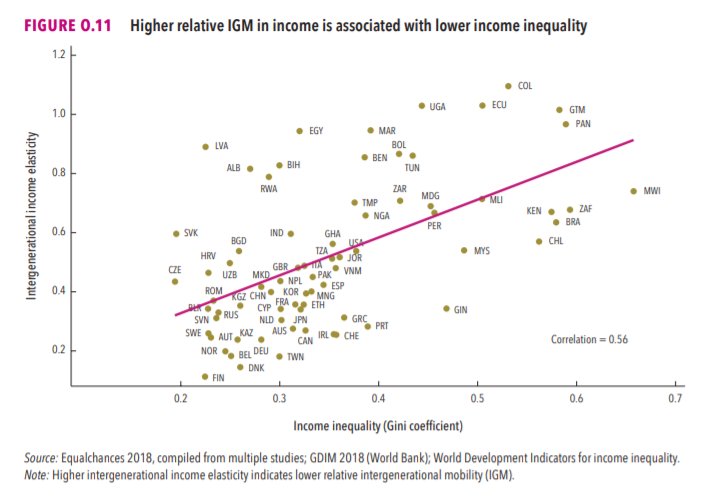

2/The New York Times cites the World Bank's recent report, "Fair Progress? Economic Mobility across Generations Around the World".

The report is available here:

3/The World Bank report has a graph in which it appears to show the same value for China's Gini - under 0.3.

The graph cites the World Development Indicators as its source for the income inequality data.

4/The World Development Indicators are available at the World Bank's website.

Here's the Gini index: https://t.co/MvylQzpX6A

It looks as if the latest estimate for China's Gini is 42.2.

That estimate is from 2012.

5/A Gini of 42.2 would put China in the same neighborhood as the U.S., whose Gini was estimated at 41 in 2013.

I can't find the <30 number anywhere. The only other estimate in the tables for China is from 2008, when it was estimated at 42.8.

This New York Times feature shows China with a Gini Index of less than 30, which would make it more equal than Canada, France, or the Netherlands. https://t.co/g3Sv6DZTDE

That's weird. Income inequality in China is legendary.

Let's check this number.

2/The New York Times cites the World Bank's recent report, "Fair Progress? Economic Mobility across Generations Around the World".

The report is available here:

3/The World Bank report has a graph in which it appears to show the same value for China's Gini - under 0.3.

The graph cites the World Development Indicators as its source for the income inequality data.

4/The World Development Indicators are available at the World Bank's website.

Here's the Gini index: https://t.co/MvylQzpX6A

It looks as if the latest estimate for China's Gini is 42.2.

That estimate is from 2012.

5/A Gini of 42.2 would put China in the same neighborhood as the U.S., whose Gini was estimated at 41 in 2013.

I can't find the <30 number anywhere. The only other estimate in the tables for China is from 2008, when it was estimated at 42.8.