These networks are primary focus for compression tasks of data in Machine Learning.

Ever heard of Autoencoders?

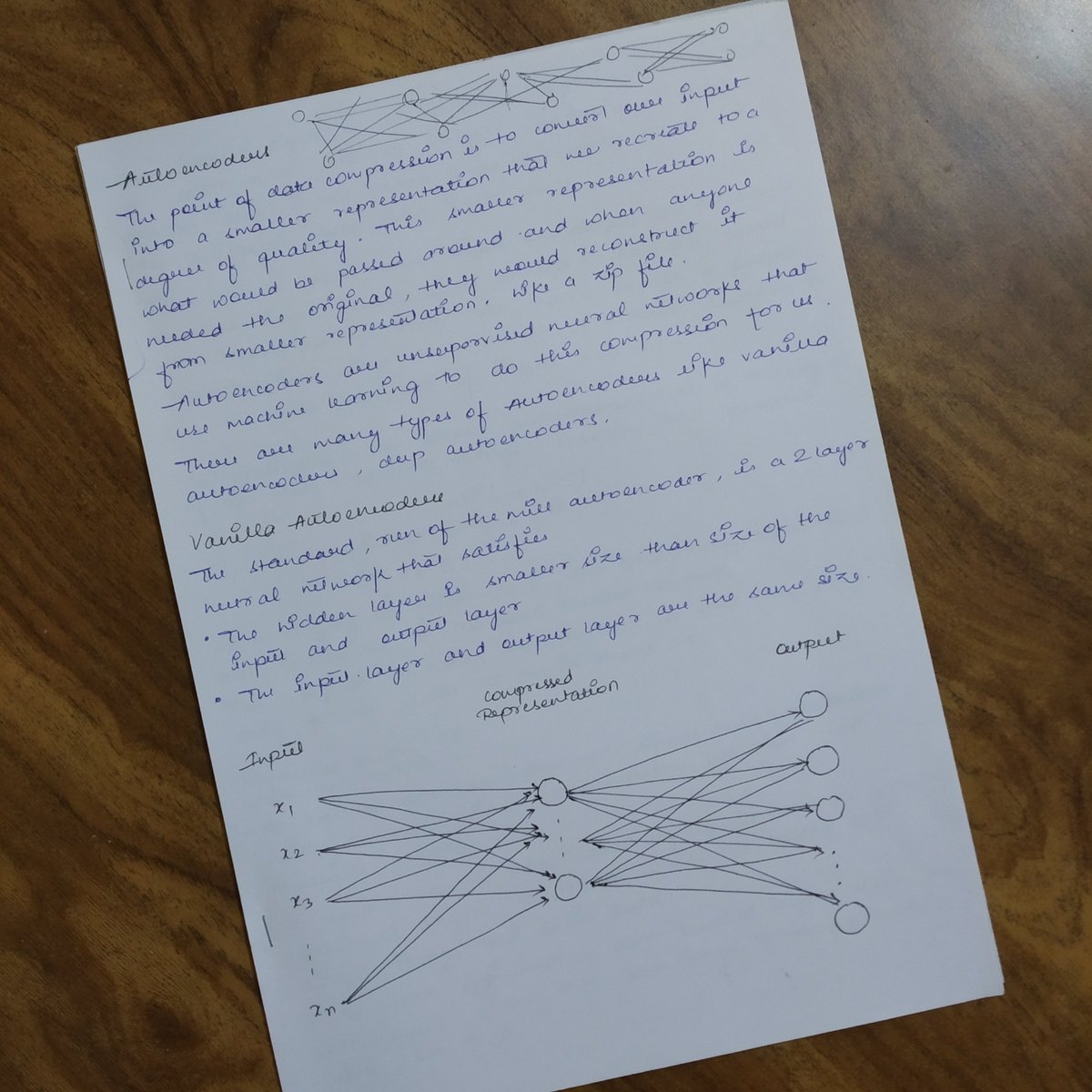

The first time I saw a Neural Network with more output neurons than in the hidden layers, I couldn't figure how it would work?!

#DeepLearning #MachineLearning

Here's a little something about them: 🧵👇

These networks are primary focus for compression tasks of data in Machine Learning.

Later when someone needs, can just take that small representation and recreate the original, just like a zip file.📥

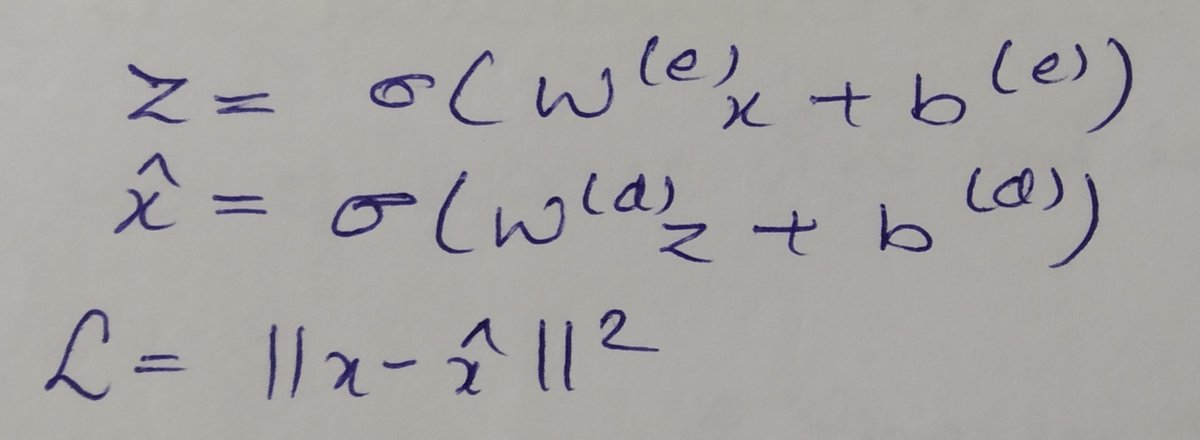

Our inputs and outputs are same and a simple euclidean distance can be used as a loss function for measuring the reconstruction.

Of course, we wouldn't expect a perfect reconstruction.

We are just trying to minimize the L here. All the backpropagation rules still hold.

▫️ Can learn non-linear transformations, with non-linear activation functions and multiple layers.

▫️ Doesn't have to learn only from dense layers, can learn from convolutional layers too, better for images, videos right?

▫️ Can make use of pre-trained layers from another model to apply transfer learning to enhance the encoder /decoder

🔸 Image Colouring

🔸 Feature Variation

🔸 Dimensionality Reduction

🔸 Denoising Image

🔸 Watermark Removal

More from Machine learning

With hard work and determination, anyone can learn to code.

Here’s a list of my favorites resources if you’re learning to code in 2021.

👇

1. freeCodeCamp.

I’d suggest picking one of the projects in the curriculum to tackle and then completing the lessons on syntax when you get stuck. This way you know *why* you’re learning what you’re learning, and you're building things

2. https://t.co/7XC50GlIaa is a hidden gem. Things I love about it:

1) You can see the most upvoted solutions so you can read really good code

2) You can ask questions in the discussion section if you're stuck, and people often answer. Free

3. https://t.co/V9gcXqqLN6 and https://t.co/KbEYGL21iE

On stackoverflow you can find answers to almost every problem you encounter. On GitHub you can read so much great code. You can build so much just from using these two resources and a blank text editor.

4. https://t.co/xX2J00fSrT @eggheadio specifically for frontend dev.

Their tutorials are designed to maximize your time, so you never feel overwhelmed by a 14-hour course. Also, the amount of prep they put into making great courses is unlike any other online course I've seen.

Here’s a list of my favorites resources if you’re learning to code in 2021.

👇

1. freeCodeCamp.

I’d suggest picking one of the projects in the curriculum to tackle and then completing the lessons on syntax when you get stuck. This way you know *why* you’re learning what you’re learning, and you're building things

2. https://t.co/7XC50GlIaa is a hidden gem. Things I love about it:

1) You can see the most upvoted solutions so you can read really good code

2) You can ask questions in the discussion section if you're stuck, and people often answer. Free

3. https://t.co/V9gcXqqLN6 and https://t.co/KbEYGL21iE

On stackoverflow you can find answers to almost every problem you encounter. On GitHub you can read so much great code. You can build so much just from using these two resources and a blank text editor.

4. https://t.co/xX2J00fSrT @eggheadio specifically for frontend dev.

Their tutorials are designed to maximize your time, so you never feel overwhelmed by a 14-hour course. Also, the amount of prep they put into making great courses is unlike any other online course I've seen.

10 PYTHON 🐍 libraries for machine learning.

Retweets are appreciated.

[ Thread ]

1. NumPy (Numerical Python)

- The most powerful feature of NumPy is the n-dimensional array.

- It contains basic linear algebra functions, Fourier transforms, and tools for integration with other low-level languages.

Ref: https://t.co/XY13ILXwSN

2. SciPy (Scientific Python)

- SciPy is built on NumPy.

- It is one of the most useful libraries for a variety of high-level science and engineering modules like discrete Fourier transform, Linear Algebra, Optimization, and Sparse matrices.

Ref: https://t.co/ALTFqM2VUo

3. Matplotlib

- Matplotlib is a comprehensive library for creating static, animated, and interactive visualizations in Python.

- You can also use Latex commands to add math to your plot.

- Matplotlib makes hard things possible.

Ref: https://t.co/zodOo2WzGx

4. Pandas

- Pandas is for structured data operations and manipulations.

- It is extensively used for data munging and preparation.

- Pandas were added relatively recently to Python and have been instrumental in boosting Python’s usage.

Ref: https://t.co/IFzikVHht4

Retweets are appreciated.

[ Thread ]

1. NumPy (Numerical Python)

- The most powerful feature of NumPy is the n-dimensional array.

- It contains basic linear algebra functions, Fourier transforms, and tools for integration with other low-level languages.

Ref: https://t.co/XY13ILXwSN

2. SciPy (Scientific Python)

- SciPy is built on NumPy.

- It is one of the most useful libraries for a variety of high-level science and engineering modules like discrete Fourier transform, Linear Algebra, Optimization, and Sparse matrices.

Ref: https://t.co/ALTFqM2VUo

3. Matplotlib

- Matplotlib is a comprehensive library for creating static, animated, and interactive visualizations in Python.

- You can also use Latex commands to add math to your plot.

- Matplotlib makes hard things possible.

Ref: https://t.co/zodOo2WzGx

4. Pandas

- Pandas is for structured data operations and manipulations.

- It is extensively used for data munging and preparation.

- Pandas were added relatively recently to Python and have been instrumental in boosting Python’s usage.

Ref: https://t.co/IFzikVHht4

You May Also Like

The UN just voted to condemn Israel 9 times, and the rest of the world 0.

View the resolutions and voting results here:

The resolution titled "The occupied Syrian Golan," which condemns Israel for "repressive measures" against Syrian citizens in the Golan Heights, was adopted by a vote of 151 - 2 - 14.

Israel and the U.S. voted 'No' https://t.co/HoO7oz0dwr

The resolution titled "Israeli practices affecting the human rights of the Palestinian people..." was adopted by a vote of 153 - 6 - 9.

Australia, Canada, Israel, Marshall Islands, Micronesia, and the U.S. voted 'No' https://t.co/1Ntpi7Vqab

The resolution titled "Israeli settlements in the Occupied Palestinian Territory, including East Jerusalem, and the occupied Syrian Golan" was adopted by a vote of 153 – 5 – 10.

Canada, Israel, Marshall Islands, Micronesia, and the U.S. voted 'No'

https://t.co/REumYgyRuF

The resolution titled "Applicability of the Geneva Convention... to the

Occupied Palestinian Territory..." was adopted by a vote of 154 - 5 - 8.

Canada, Israel, Marshall Islands, Micronesia, and the U.S. voted 'No'

https://t.co/xDAeS9K1kW

View the resolutions and voting results here:

The resolution titled "The occupied Syrian Golan," which condemns Israel for "repressive measures" against Syrian citizens in the Golan Heights, was adopted by a vote of 151 - 2 - 14.

Israel and the U.S. voted 'No' https://t.co/HoO7oz0dwr

The resolution titled "Israeli practices affecting the human rights of the Palestinian people..." was adopted by a vote of 153 - 6 - 9.

Australia, Canada, Israel, Marshall Islands, Micronesia, and the U.S. voted 'No' https://t.co/1Ntpi7Vqab

The resolution titled "Israeli settlements in the Occupied Palestinian Territory, including East Jerusalem, and the occupied Syrian Golan" was adopted by a vote of 153 – 5 – 10.

Canada, Israel, Marshall Islands, Micronesia, and the U.S. voted 'No'

https://t.co/REumYgyRuF

The resolution titled "Applicability of the Geneva Convention... to the

Occupied Palestinian Territory..." was adopted by a vote of 154 - 5 - 8.

Canada, Israel, Marshall Islands, Micronesia, and the U.S. voted 'No'

https://t.co/xDAeS9K1kW