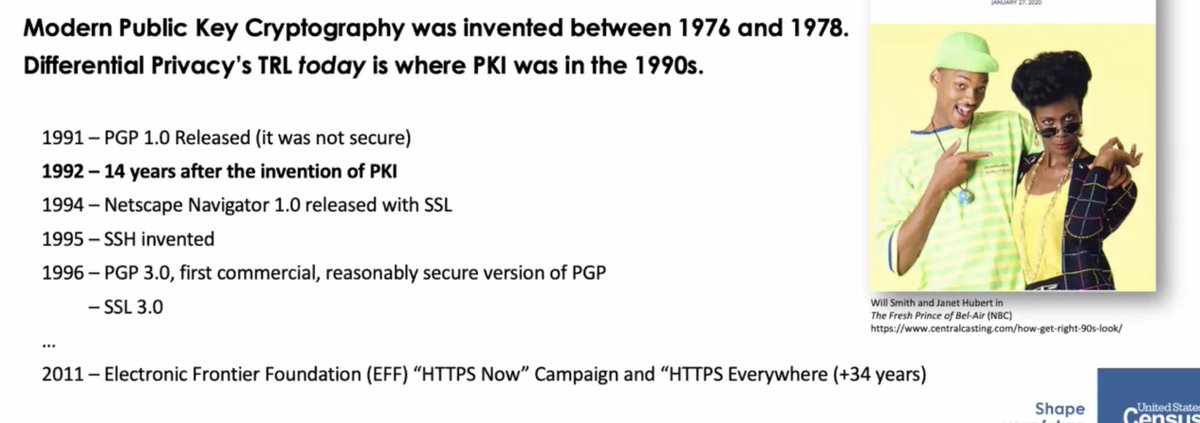

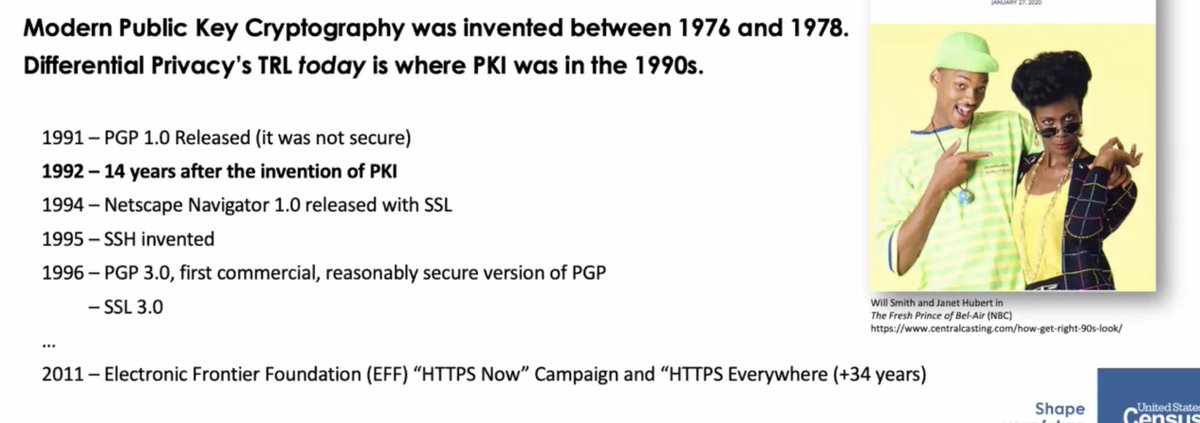

Last up in Privacy Tech for #enigma2021, @xchatty speaking about "IMPLEMENTING DIFFERENTIAL PRIVACY FOR THE 2020

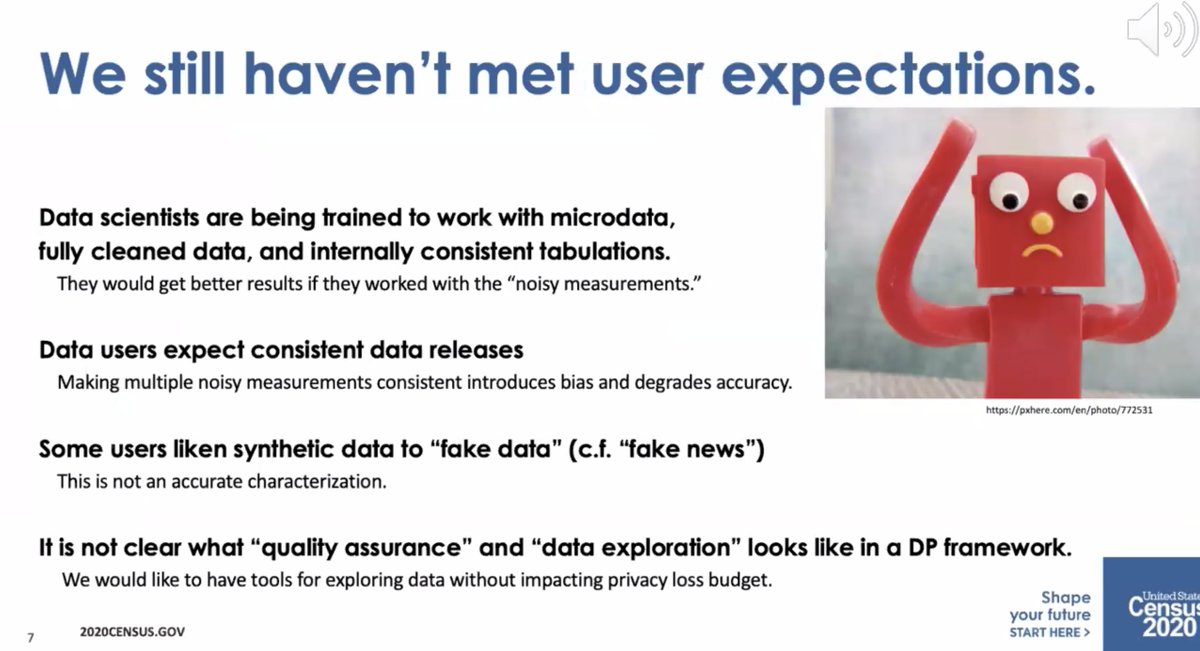

* Data users expect consistent data releases

* Some people call synthetic data "fake data" like

"fake news"

* It's not clear what "quality assurance" and "data exploration" means in a DP framework

* required to collect it by the constitution

* but required to maintain privacy by law

* differential privacy is open and we can talk about privacy loss/accuracy tradeoff

* swapping assumed limitations of the attackers (e.g. limited computational power)

Change in the meaning of "privacy" as relative -- it requires a lot of explanation and overcoming organizational barriers.

* different groups at the Census thought that meant different things

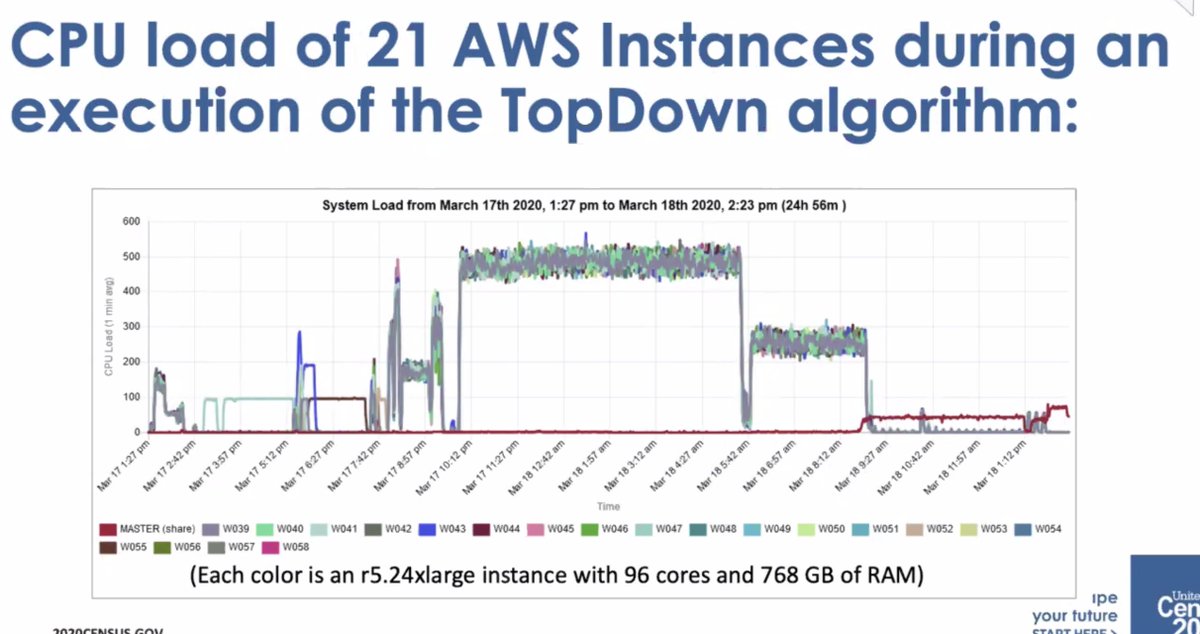

* before, states were processed as they came in. Differential privacy requires everything be computed on at once

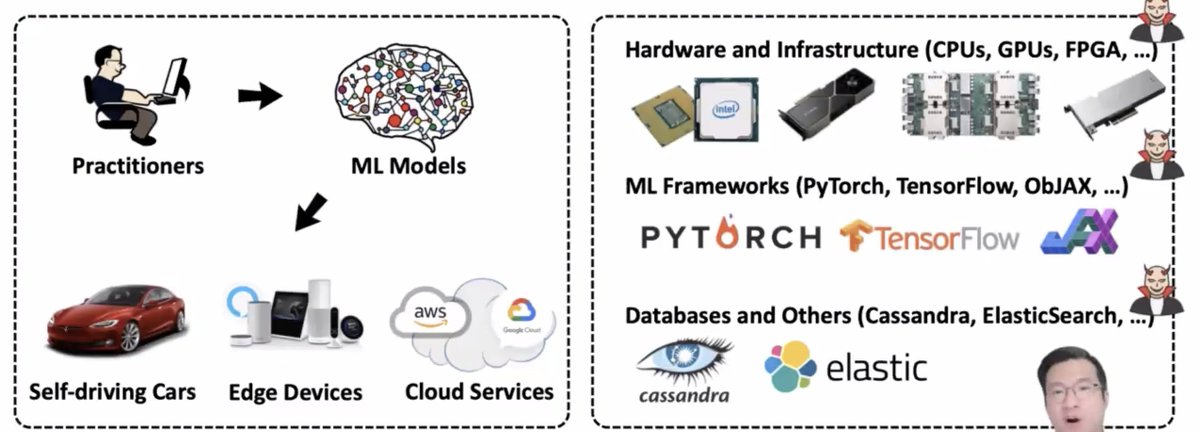

* required a lot more computing power

* initial implementation was by Dan Kiefer, who took a sabbatical

* expanded team to with Simson and others

* 2018 end to end test

* then got to move to AWS Elastic compute... but the monitoring wasn't good enough and had to create their own dashboard to track execution

* it wasn't a small amount of compute

* ... it wasn't well-received by the data users who thought there was too much error

If you avoid that, you might add bias to the data. How to avoid that? Let some data users get access to the measurement files [I don't follow]

More from Lea Kissner

More from Tech

You May Also Like

His arrogance and ambition prohibit any allegiance to morality or character.

Thus far, his plan to seize the presidency has fallen into place.

An explanation in photographs.

🧵

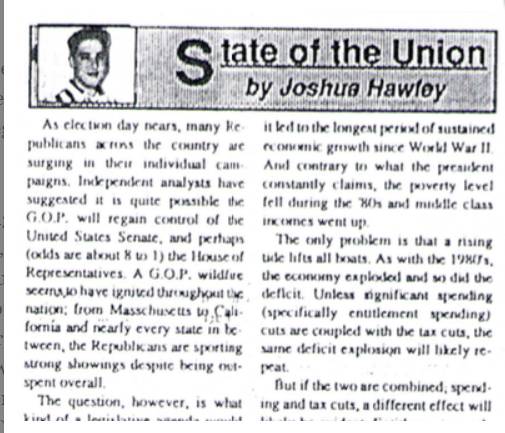

Joshua grew up in the next town over from mine, in Lexington, Missouri. A a teenager he wrote a column for the local paper, where he perfected his political condescension.

2/

By the time he reached high-school, however, he attended an elite private high-school 60 miles away in Kansas City.

This is a piece of his history he works to erase as he builds up his counterfeit image as a rural farm boy from a small town who grew up farming.

3/

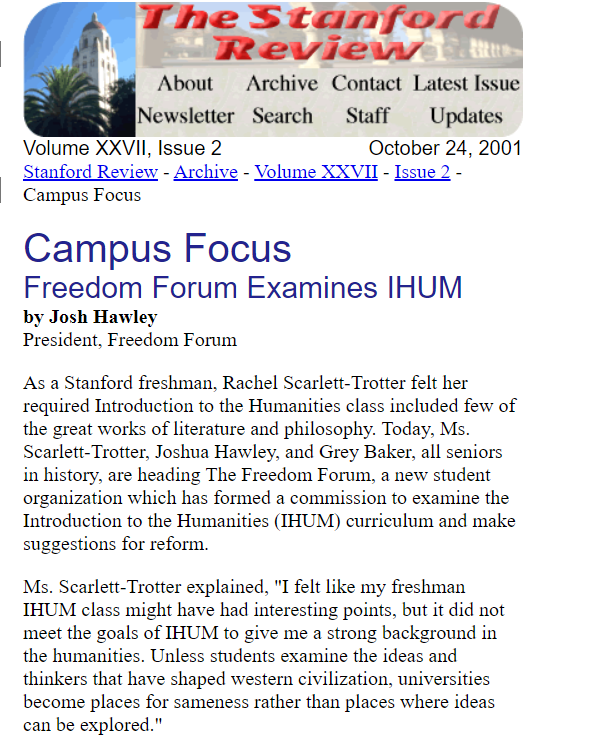

After graduating from Rockhurst High School, he attended Stanford University where he wrote for the Stanford Review--a libertarian publication founded by Peter Thiel..

4/

(Full Link: https://t.co/zixs1HazLk)

Hawley's writing during his early 20s reveals that he wished for the curriculum at Stanford and other "liberal institutions" to change and to incorporate more conservative moral values.

This led him to create the "Freedom Forum."

5/

Like company moats, your personal moat should be a competitive advantage that is not only durable—it should also compound over time.

Characteristics of a personal moat below:

I'm increasingly interested in the idea of "personal moats" in the context of careers.

— Erik Torenberg (@eriktorenberg) November 22, 2018

Moats should be:

- Hard to learn and hard to do (but perhaps easier for you)

- Skills that are rare and valuable

- Legible

- Compounding over time

- Unique to your own talents & interests https://t.co/bB3k1YcH5b

2/ Like a company moat, you want to build career capital while you sleep.

As Andrew Chen noted:

People talk about \u201cpassive income\u201d a lot but not about \u201cpassive social capital\u201d or \u201cpassive networking\u201d or \u201cpassive knowledge gaining\u201d but that\u2019s what you can architect if you have a thing and it grows over time without intensive constant effort to sustain it

— Andrew Chen (@andrewchen) November 22, 2018

3/ You don’t want to build a competitive advantage that is fleeting or that will get commoditized

Things that might get commoditized over time (some longer than

Things that look like moats but likely aren\u2019t or may fade:

— Erik Torenberg (@eriktorenberg) November 22, 2018

- Proprietary networks

- Being something other than one of the best at any tournament style-game

- Many "awards"

- Twitter followers or general reach without "respect"

- Anything that depends on information asymmetry https://t.co/abjxesVIh9

4/ Before the arrival of recorded music, what used to be scarce was the actual music itself — required an in-person artist.

After recorded music, the music itself became abundant and what became scarce was curation, distribution, and self space.

5/ Similarly, in careers, what used to be (more) scarce were things like ideas, money, and exclusive relationships.

In the internet economy, what has become scarce are things like specific knowledge, rare & valuable skills, and great reputations.