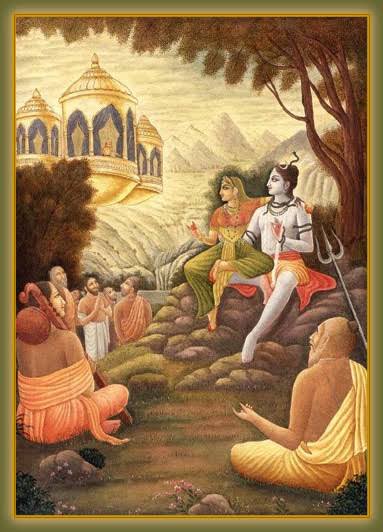

JWALAMUKHI TRIPURASUNDARI, UTHANAHALLI, MYSURU (KAR)

#bharatmandir

Jwalamukhi Tripursundari is believed to be the sister of Devi Chamundeshwari, the presiding deity of Mysuru

It is also that she represents the flaming mouth of Jalandhara, the demon whom Shiva crushed to death.

The murti was installed during Wodeyar rule. An utsava murti made of pancha loha is kept in the sanctum sanctorum.

Another shrine here is dedicated to Siddeshwara / Shiva surrounded by a beautiful wooden Mantapa.

@GunduHuDuGa

More from Jaya_Upadhyaya

More from All

Ivor Cummins has been wrong (or lying) almost entirely throughout this pandemic and got paid handsomly for it.

He has been wrong (or lying) so often that it will be nearly impossible for me to track every grift, lie, deceit, manipulation he has pulled. I will use...

... other sources who have been trying to shine on light on this grifter (as I have tried to do, time and again:

Example #1: "Still not seeing Sweden signal versus Denmark really"... There it was (Images attached).

19 to 80 is an over 300% difference.

Tweet: https://t.co/36FnYnsRT9

Example #2 - "Yes, I'm comparing the Noridcs / No, you cannot compare the Nordics."

I wonder why...

Tweets: https://t.co/XLfoX4rpck / https://t.co/vjE1ctLU5x

Example #3 - "I'm only looking at what makes the data fit in my favour" a.k.a moving the goalposts.

Tweets: https://t.co/vcDpTu3qyj / https://t.co/CA3N6hC2Lq

He has been wrong (or lying) so often that it will be nearly impossible for me to track every grift, lie, deceit, manipulation he has pulled. I will use...

... other sources who have been trying to shine on light on this grifter (as I have tried to do, time and again:

Ivor Cummins BE (Chem) is a former R&D Manager at HP (sourcre: https://t.co/Wbf5scf7gn), turned Content Creator/Podcast Host/YouTube personality. (Call it what you will.)

— Steve (@braidedmanga) November 17, 2020

Example #1: "Still not seeing Sweden signal versus Denmark really"... There it was (Images attached).

19 to 80 is an over 300% difference.

Tweet: https://t.co/36FnYnsRT9

Example #2 - "Yes, I'm comparing the Noridcs / No, you cannot compare the Nordics."

I wonder why...

Tweets: https://t.co/XLfoX4rpck / https://t.co/vjE1ctLU5x

Example #3 - "I'm only looking at what makes the data fit in my favour" a.k.a moving the goalposts.

Tweets: https://t.co/vcDpTu3qyj / https://t.co/CA3N6hC2Lq

How can we use language supervision to learn better visual representations for robotics?

Introducing Voltron: Language-Driven Representation Learning for Robotics!

Paper: https://t.co/gIsRPtSjKz

Models: https://t.co/NOB3cpATYG

Evaluation: https://t.co/aOzQu95J8z

🧵👇(1 / 12)

Videos of humans performing everyday tasks (Something-Something-v2, Ego4D) offer a rich and diverse resource for learning representations for robotic manipulation.

Yet, an underused part of these datasets are the rich, natural language annotations accompanying each video. (2/12)

The Voltron framework offers a simple way to use language supervision to shape representation learning, building off of prior work in representations for robotics like MVP (https://t.co/Pb0mk9hb4i) and R3M (https://t.co/o2Fkc3fP0e).

The secret is *balance* (3/12)

Starting with a masked autoencoder over frames from these video clips, make a choice:

1) Condition on language and improve our ability to reconstruct the scene.

2) Generate language given the visual representation and improve our ability to describe what's happening. (4/12)

By trading off *conditioning* and *generation* we show that we can learn 1) better representations than prior methods, and 2) explicitly shape the balance of low and high-level features captured.

Why is the ability to shape this balance important? (5/12)

Introducing Voltron: Language-Driven Representation Learning for Robotics!

Paper: https://t.co/gIsRPtSjKz

Models: https://t.co/NOB3cpATYG

Evaluation: https://t.co/aOzQu95J8z

🧵👇(1 / 12)

Videos of humans performing everyday tasks (Something-Something-v2, Ego4D) offer a rich and diverse resource for learning representations for robotic manipulation.

Yet, an underused part of these datasets are the rich, natural language annotations accompanying each video. (2/12)

The Voltron framework offers a simple way to use language supervision to shape representation learning, building off of prior work in representations for robotics like MVP (https://t.co/Pb0mk9hb4i) and R3M (https://t.co/o2Fkc3fP0e).

The secret is *balance* (3/12)

Starting with a masked autoencoder over frames from these video clips, make a choice:

1) Condition on language and improve our ability to reconstruct the scene.

2) Generate language given the visual representation and improve our ability to describe what's happening. (4/12)

By trading off *conditioning* and *generation* we show that we can learn 1) better representations than prior methods, and 2) explicitly shape the balance of low and high-level features captured.

Why is the ability to shape this balance important? (5/12)

You May Also Like

I like this heuristic, and have a few which are similar in intent to it:

Hiring efficiency:

How long does it take, measured from initial expression of interest through offer of employment signed, for a typical candidate cold inbounding to the company?

What is the *theoretical minimum* for *any* candidate?

How long does it take, as a developer newly hired at the company:

* To get a fully credentialed machine issued to you

* To get a fully functional development environment on that machine which could push code to production immediately

* To solo ship one material quanta of work

How long does it take, from first idea floated to "It's on the Internet", to create a piece of marketing collateral.

(For bonus points: break down by ambitiousness / form factor.)

How many people have to say yes to do something which is clearly worth doing which costs $5,000 / $15,000 / $250,000 and has never been done before.

Here's how I'd measure the health of any tech company:

— Jeff Atwood (@codinghorror) October 25, 2018

How long, as measured from the inception of idea to the modified software arriving in the user's hands, does it take to roll out a *1 word copy change* in your primary product?

Hiring efficiency:

How long does it take, measured from initial expression of interest through offer of employment signed, for a typical candidate cold inbounding to the company?

What is the *theoretical minimum* for *any* candidate?

How long does it take, as a developer newly hired at the company:

* To get a fully credentialed machine issued to you

* To get a fully functional development environment on that machine which could push code to production immediately

* To solo ship one material quanta of work

How long does it take, from first idea floated to "It's on the Internet", to create a piece of marketing collateral.

(For bonus points: break down by ambitiousness / form factor.)

How many people have to say yes to do something which is clearly worth doing which costs $5,000 / $15,000 / $250,000 and has never been done before.

This is NONSENSE. The people who take photos with their books on instagram are known to be voracious readers who graciously take time to review books and recommend them to their followers. Part of their medium is to take elaborate, beautiful photos of books. Die mad, Guardian.

THEY DO READ THEM, YOU JUDGY, RACOON-PICKED TRASH BIN

If you come for Bookstagram, i will fight you.

In appreciation, here are some of my favourite bookstagrams of my books: (photos by lit_nerd37, mybookacademy, bookswrotemystory, and scorpio_books)

Beautifully read: why bookselfies are all over Instagram https://t.co/pBQA3JY0xm

— Guardian Books (@GuardianBooks) October 30, 2018

THEY DO READ THEM, YOU JUDGY, RACOON-PICKED TRASH BIN

If you come for Bookstagram, i will fight you.

In appreciation, here are some of my favourite bookstagrams of my books: (photos by lit_nerd37, mybookacademy, bookswrotemystory, and scorpio_books)

The YouTube algorithm that I helped build in 2011 still recommends the flat earth theory by the *hundreds of millions*. This investigation by @RawStory shows some of the real-life consequences of this badly designed AI.

This spring at SxSW, @SusanWojcicki promised "Wikipedia snippets" on debated videos. But they didn't put them on flat earth videos, and instead @YouTube is promoting merchandising such as "NASA lies - Never Trust a Snake". 2/

A few example of flat earth videos that were promoted by YouTube #today:

https://t.co/TumQiX2tlj 3/

https://t.co/uAORIJ5BYX 4/

https://t.co/yOGZ0pLfHG 5/

Flat Earth conference attendees explain how they have been brainwashed by YouTube and Infowarshttps://t.co/gqZwGXPOoc

— Raw Story (@RawStory) November 18, 2018

This spring at SxSW, @SusanWojcicki promised "Wikipedia snippets" on debated videos. But they didn't put them on flat earth videos, and instead @YouTube is promoting merchandising such as "NASA lies - Never Trust a Snake". 2/

A few example of flat earth videos that were promoted by YouTube #today:

https://t.co/TumQiX2tlj 3/

https://t.co/uAORIJ5BYX 4/

https://t.co/yOGZ0pLfHG 5/