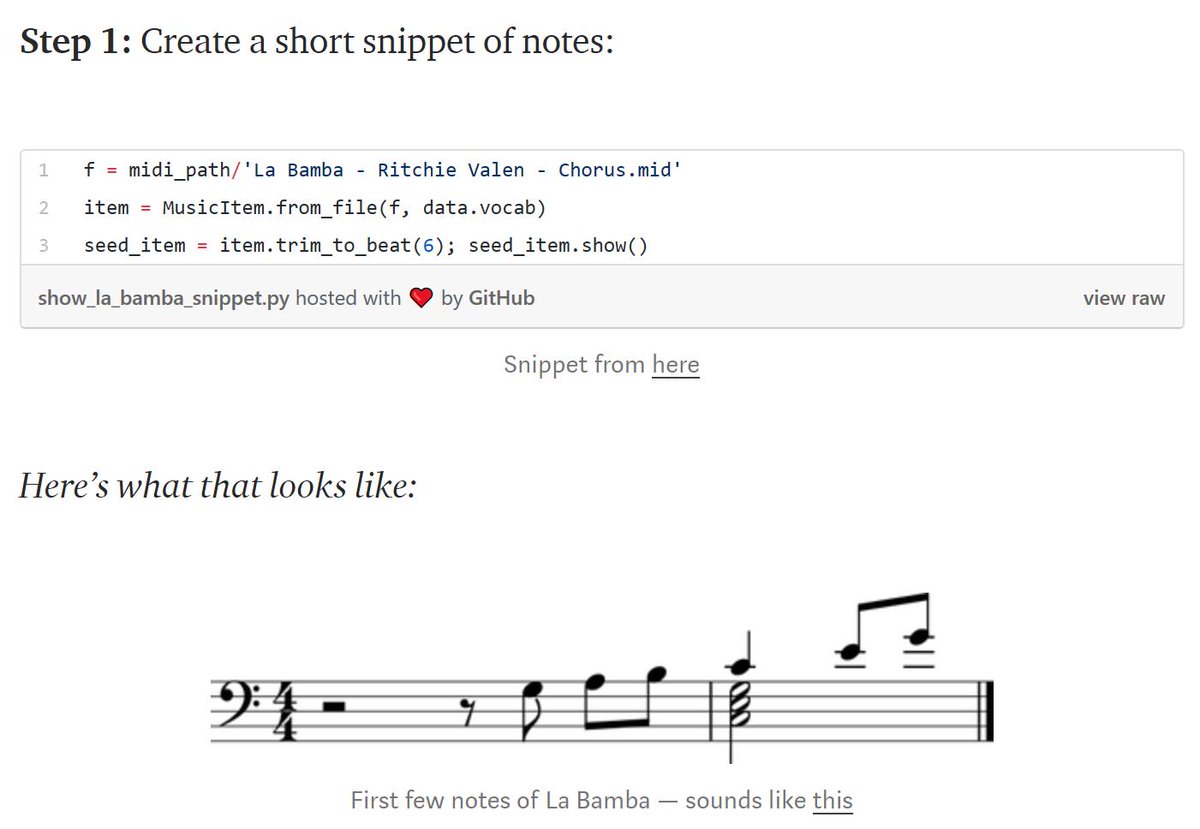

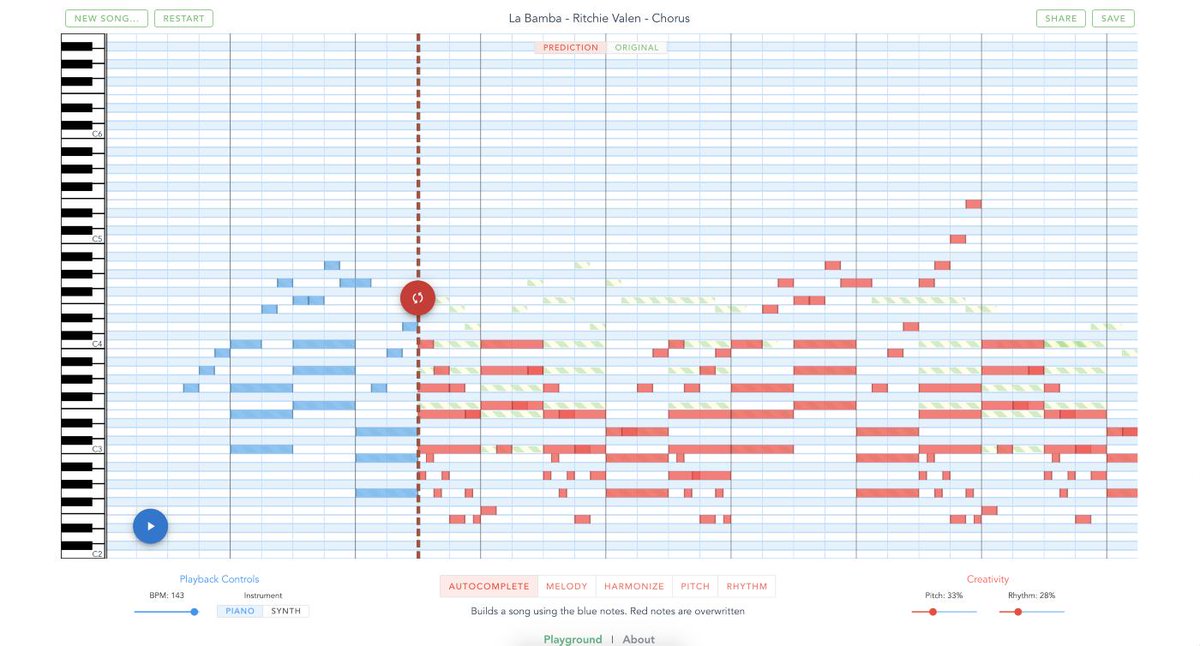

An amazing new project from @bearpelican was just released: https://t.co/DBov6sZTVS . A beautiful design; you can auto-generate a melody from chords, chords from a melody, and more.

It's technically brilliant, combining BERT, seq2seq, and Transformer XL

https://t.co/jF3mO5aXiu

https://t.co/jF3mO5aXiu

https://t.co/DrCJxxJRAy

https://t.co/GgatWxa9nM

https://t.co/U9Yp5IZpxt

More from Data science

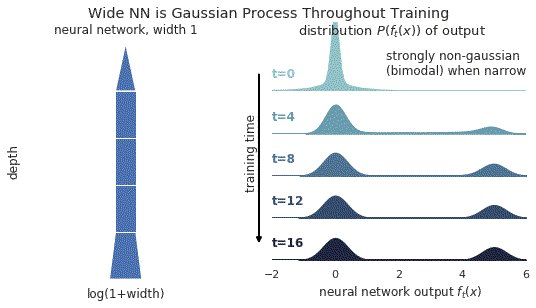

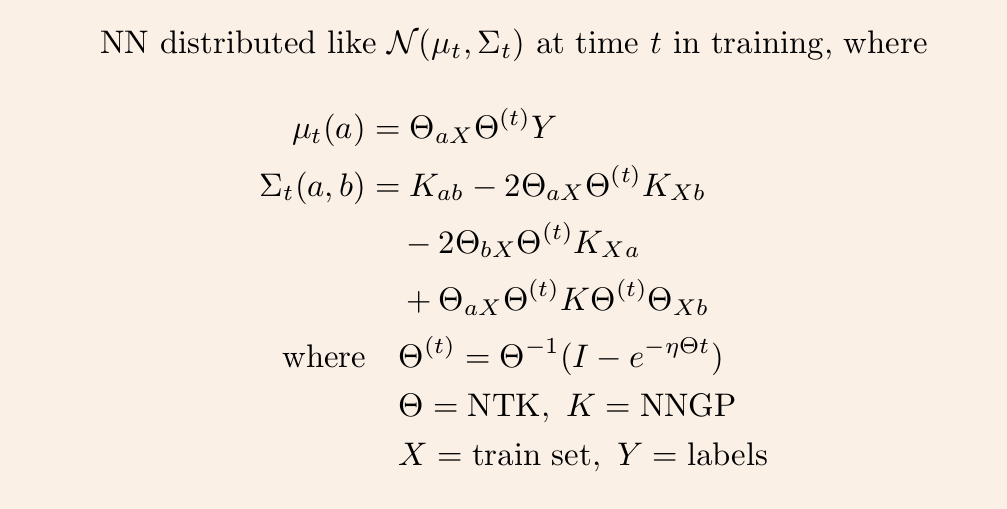

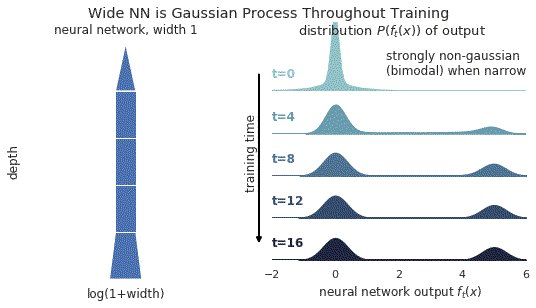

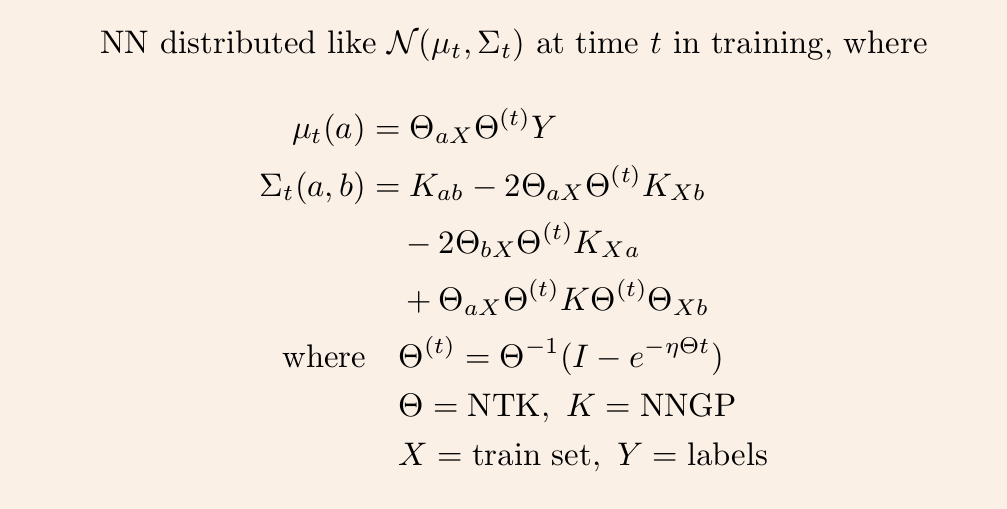

1/ A ∞-wide NN of *any architecture* is a Gaussian process (GP) at init. The NN in fact evolves linearly in function space under SGD, so is a GP at *any time* during training. https://t.co/v1b6kndqCk With Tensor Programs, we can calculate this time-evolving GP w/o trainin any NN

2/ In this gif, narrow relu networks have high probability of initializing near the 0 function (because of relu) and getting stuck. This causes the function distribution to become multi-modal over time. However, for wide relu networks this is not an issue.

3/ This time-evolving GP depends on two kernels: the kernel describing the GP at init, and the kernel describing the linear evolution of this GP. The former is the NNGP kernel, and the latter is the Neural Tangent Kernel (NTK).

4/ Once we have these two kernels, we can derive the GP mean and covariance at any time t via straightforward linear algebra.

5/ So it remains to calculate the NNGP kernel and NT kernel for any given architecture. The first is described in https://t.co/cFWfNC5ALC and in this thread

2/ In this gif, narrow relu networks have high probability of initializing near the 0 function (because of relu) and getting stuck. This causes the function distribution to become multi-modal over time. However, for wide relu networks this is not an issue.

3/ This time-evolving GP depends on two kernels: the kernel describing the GP at init, and the kernel describing the linear evolution of this GP. The former is the NNGP kernel, and the latter is the Neural Tangent Kernel (NTK).

4/ Once we have these two kernels, we can derive the GP mean and covariance at any time t via straightforward linear algebra.

5/ So it remains to calculate the NNGP kernel and NT kernel for any given architecture. The first is described in https://t.co/cFWfNC5ALC and in this thread

To my JVM friends looking to explore Machine Learning techniques - you don’t necessarily have to learn Python to do that. There are libraries you can use from the comfort of your JVM environment. 🧵👇

https://t.co/EwwOzgfDca : Deep Learning framework in Java that supports the whole cycle: from data loading and preprocessing to building and tuning a variety deep learning networks.

https://t.co/J4qMzPAZ6u Framework for defining machine learning models, including feature generation and transformations, as directed acyclic graphs (DAGs).

https://t.co/9IgKkSxPCq a machine learning library in Java that provides multi-class classification, regression, clustering, anomaly detection and multi-label classification.

https://t.co/EAqn2YngIE : TensorFlow Java API (experimental)

https://t.co/EwwOzgfDca : Deep Learning framework in Java that supports the whole cycle: from data loading and preprocessing to building and tuning a variety deep learning networks.

https://t.co/J4qMzPAZ6u Framework for defining machine learning models, including feature generation and transformations, as directed acyclic graphs (DAGs).

https://t.co/9IgKkSxPCq a machine learning library in Java that provides multi-class classification, regression, clustering, anomaly detection and multi-label classification.

https://t.co/EAqn2YngIE : TensorFlow Java API (experimental)

You May Also Like

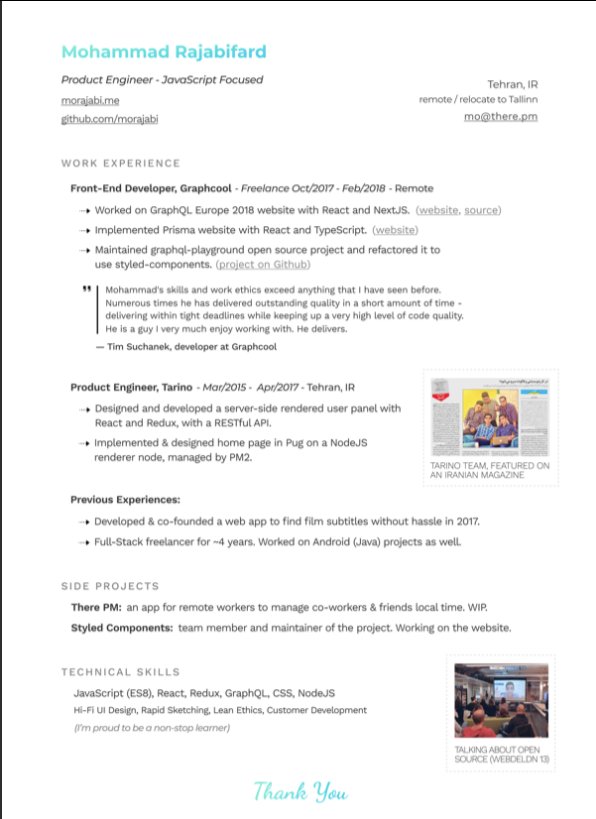

👨💻 Last resume I sent to a startup one year ago, sharing with you to get ideas:

- Forget what you don't have, make your strength bold

- Pick one work experience and explain what you did in detail w/ bullet points

- Write it towards the role you apply

- Give social proof

/thread

"But I got no work experience..."

Make a open source lib, make a small side project for yourself, do freelance work, ask friends to work with them, no friends? Find friends on Github, and Twitter.

Bonus points:

- Show you care about the company: I used the company's brand font and gradient for in the resume for my name and "Thank You" note.

- Don't list 15 things and libraries you worked with, pick the most related ones to the role you're applying.

-🙅♂️"copy cover letter"

"I got no firends, no work"

One practical way is to reach out to conferences and offer to make their website for free. But make sure to do it good. You'll get:

- a project for portfolio

- new friends

- work experience

- learnt new stuff

- new thing for Twitter bio

If you don't even have the skills yet, why not try your chance for @LambdaSchool? No? @freeCodeCamp. Still not? Pick something from here and learn https://t.co/7NPS1zbLTi

You'll feel very overwhelmed, no escape, just acknowledge it and keep pushing.

- Forget what you don't have, make your strength bold

- Pick one work experience and explain what you did in detail w/ bullet points

- Write it towards the role you apply

- Give social proof

/thread

"But I got no work experience..."

Make a open source lib, make a small side project for yourself, do freelance work, ask friends to work with them, no friends? Find friends on Github, and Twitter.

Bonus points:

- Show you care about the company: I used the company's brand font and gradient for in the resume for my name and "Thank You" note.

- Don't list 15 things and libraries you worked with, pick the most related ones to the role you're applying.

-🙅♂️"copy cover letter"

"I got no firends, no work"

One practical way is to reach out to conferences and offer to make their website for free. But make sure to do it good. You'll get:

- a project for portfolio

- new friends

- work experience

- learnt new stuff

- new thing for Twitter bio

If you don't even have the skills yet, why not try your chance for @LambdaSchool? No? @freeCodeCamp. Still not? Pick something from here and learn https://t.co/7NPS1zbLTi

You'll feel very overwhelmed, no escape, just acknowledge it and keep pushing.

Keep dwelling on this:

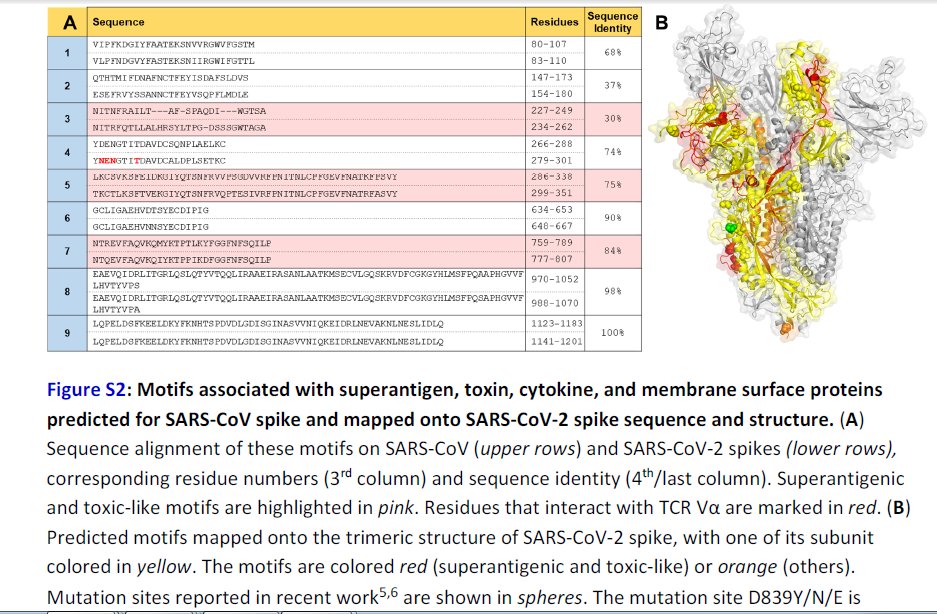

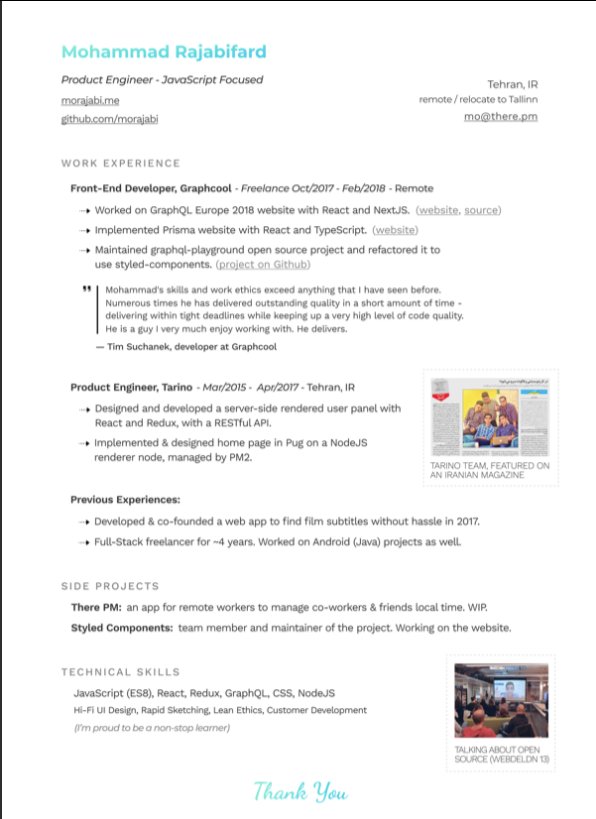

Further Examination of the Motif near PRRA Reveals Close Structural Similarity to the SEB Superantigen as well as Sequence Similarities to Neurotoxins and a Viral SAg.

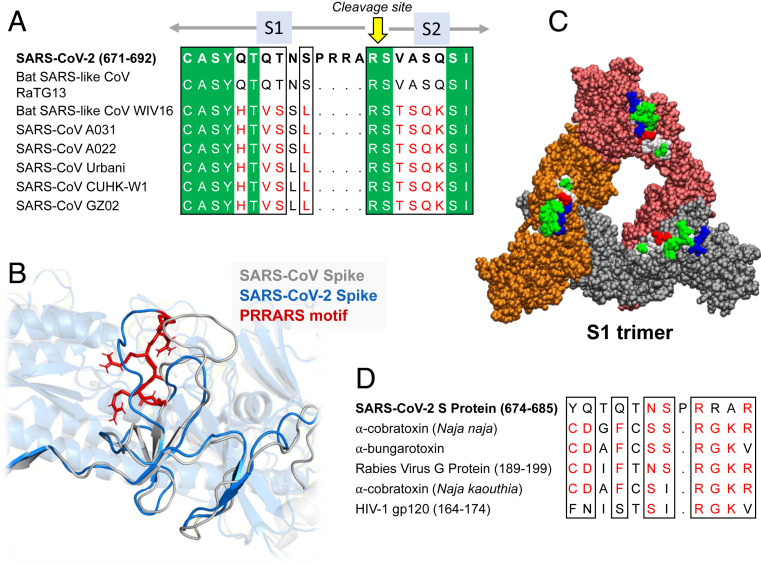

The insertion PRRA together with 7 sequentially preceding residues & succeeding R685 (conserved in β-CoVs) form a motif, Y674QTQTNSPRRAR685, homologous to those of neurotoxins from Ophiophagus (cobra) and Bungarus genera, as well as neurotoxin-like regions from three RABV strains

(20) (Fig. 2D). We further noticed that the same segment bears close similarity to the HIV-1 glycoprotein gp120 SAg motif F164 to V174.

https://t.co/EwwJOSa8RK

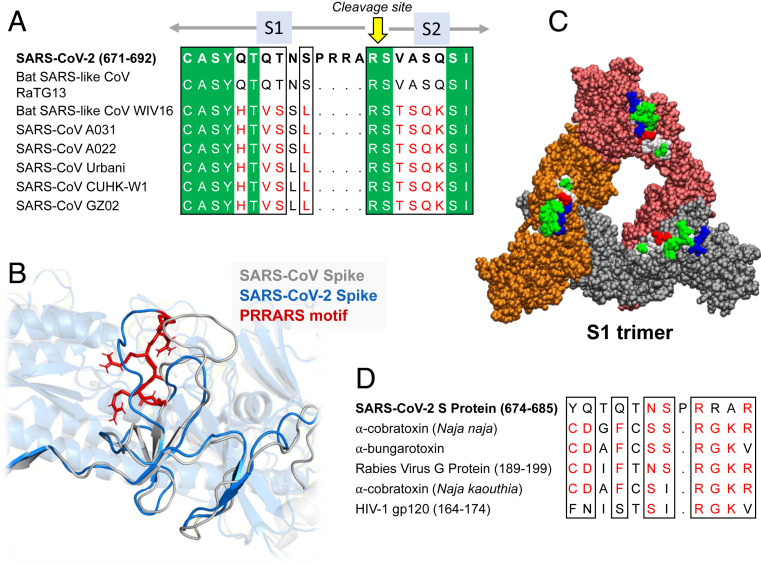

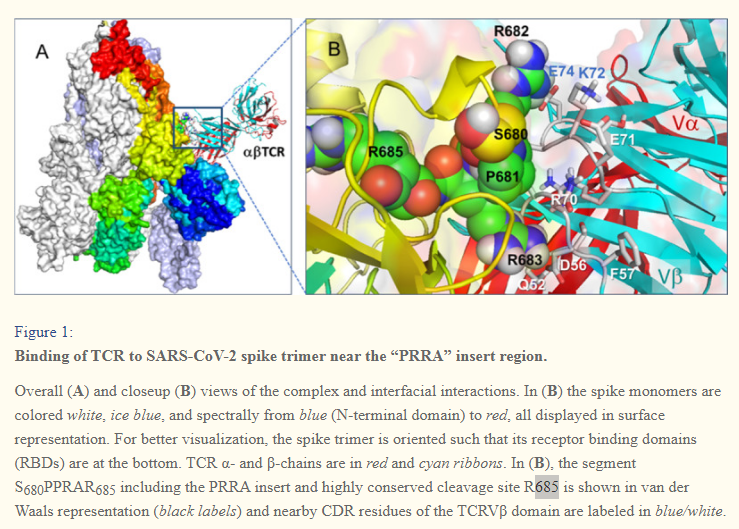

In (B), the segment S680PPRAR685 including the PRRA insert and highly conserved cleavage site *R685* is shown in van der Waals representation (black labels) and nearby CDR residues of the TCRVβ domain are labeled in blue/white

https://t.co/BsY8BAIzDa

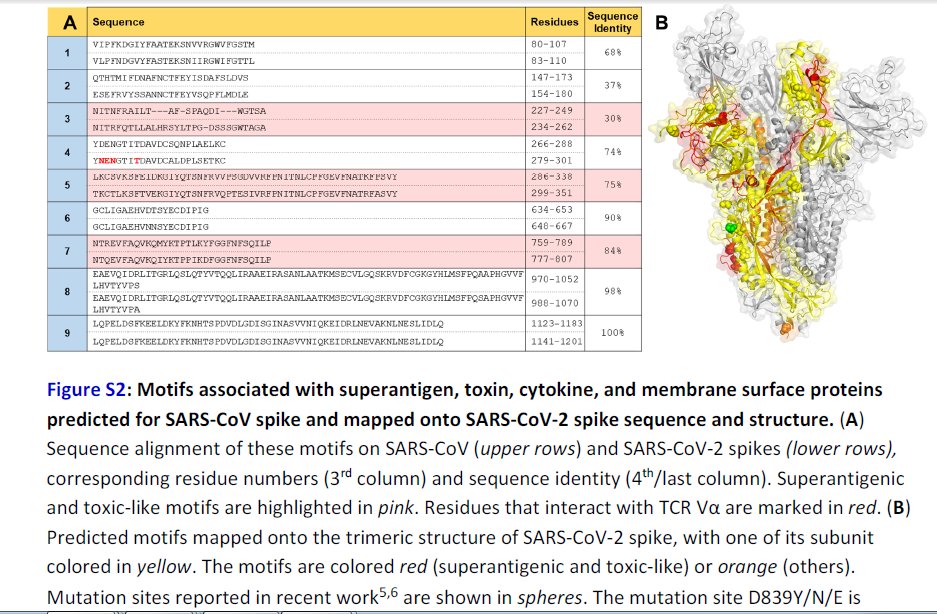

Sequence Identity %

https://t.co/BsY8BAIzDa

Y674 - QTQTNSPRRA - R685

Similar to neurotoxins from Ophiophagus (cobra) & Bungarus genera & neurotoxin-like regions from three RABV strains

T678 - NSPRRA- R685

Superantigenic core, consistently aligned against bacterial or viral SAgs

Further Examination of the Motif near PRRA Reveals Close Structural Similarity to the SEB Superantigen as well as Sequence Similarities to Neurotoxins and a Viral SAg.

The insertion PRRA together with 7 sequentially preceding residues & succeeding R685 (conserved in β-CoVs) form a motif, Y674QTQTNSPRRAR685, homologous to those of neurotoxins from Ophiophagus (cobra) and Bungarus genera, as well as neurotoxin-like regions from three RABV strains

(20) (Fig. 2D). We further noticed that the same segment bears close similarity to the HIV-1 glycoprotein gp120 SAg motif F164 to V174.

https://t.co/EwwJOSa8RK

In (B), the segment S680PPRAR685 including the PRRA insert and highly conserved cleavage site *R685* is shown in van der Waals representation (black labels) and nearby CDR residues of the TCRVβ domain are labeled in blue/white

https://t.co/BsY8BAIzDa

Sequence Identity %

https://t.co/BsY8BAIzDa

Y674 - QTQTNSPRRA - R685

Similar to neurotoxins from Ophiophagus (cobra) & Bungarus genera & neurotoxin-like regions from three RABV strains

T678 - NSPRRA- R685

Superantigenic core, consistently aligned against bacterial or viral SAgs