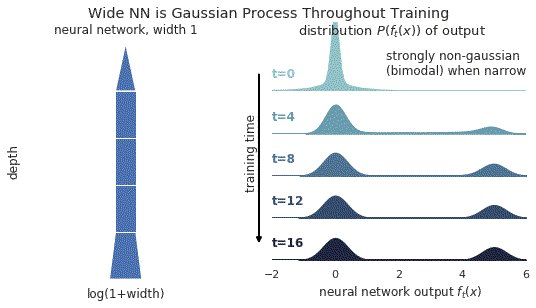

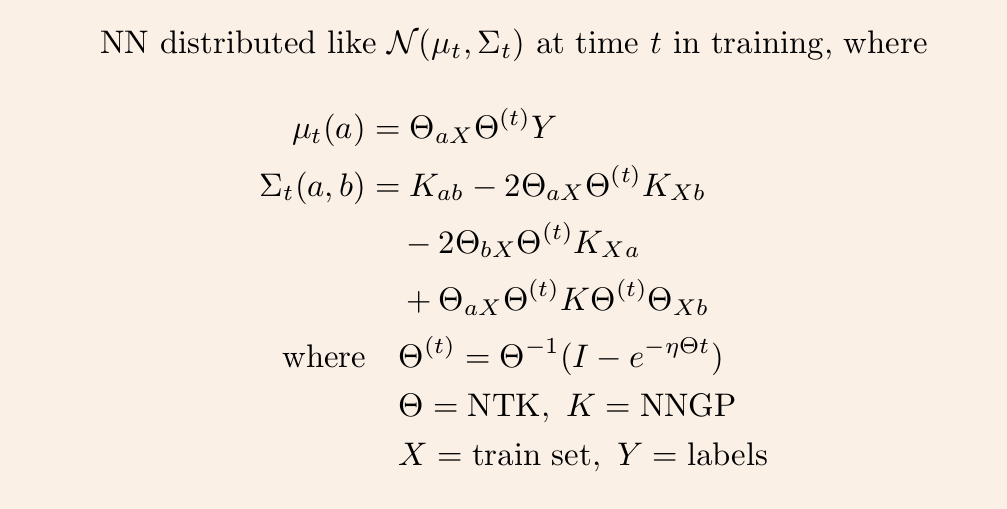

1/ A ∞-wide NN of *any architecture* is a Gaussian process (GP) at init. The NN in fact evolves linearly in function space under SGD, so is a GP at *any time* during training. https://t.co/v1b6kndqCk With Tensor Programs, we can calculate this time-evolving GP w/o trainin any NN

https://t.co/6RO7VZDQNZ

https://t.co/OOoOMdPOsR

More from Data science

To my JVM friends looking to explore Machine Learning techniques - you don’t necessarily have to learn Python to do that. There are libraries you can use from the comfort of your JVM environment. 🧵👇

https://t.co/EwwOzgfDca : Deep Learning framework in Java that supports the whole cycle: from data loading and preprocessing to building and tuning a variety deep learning networks.

https://t.co/J4qMzPAZ6u Framework for defining machine learning models, including feature generation and transformations, as directed acyclic graphs (DAGs).

https://t.co/9IgKkSxPCq a machine learning library in Java that provides multi-class classification, regression, clustering, anomaly detection and multi-label classification.

https://t.co/EAqn2YngIE : TensorFlow Java API (experimental)

https://t.co/EwwOzgfDca : Deep Learning framework in Java that supports the whole cycle: from data loading and preprocessing to building and tuning a variety deep learning networks.

https://t.co/J4qMzPAZ6u Framework for defining machine learning models, including feature generation and transformations, as directed acyclic graphs (DAGs).

https://t.co/9IgKkSxPCq a machine learning library in Java that provides multi-class classification, regression, clustering, anomaly detection and multi-label classification.

https://t.co/EAqn2YngIE : TensorFlow Java API (experimental)

You May Also Like

Neo-nazi group #PatriotFront held a photo op in #Chicago last weekend & is currently marching around #DC so it's as good time as any to compile a list of their identified members for folks to watch for

Who are these chuds?

Patriot Front broke away from white nationalist org Vanguard America following #unitetheright in #charlottesville after James Alex Fields was seen with a VA shield before driving his car into a crowd, murdering Heather Heyer & injuring dozens of others

Syed Robbie Javid a.k.a. Sayed Robbie Javid or Robbie Javid of Alexandria,

Antoine Bernard Renard (a.k.a. “Charlemagne MD” on Discord) from Rockville, MD.

https://t.co/ykEjdZFDi6

Brandon Troy Higgs, 25, from Reisterstown,

Who are these chuds?

Patriot Front broke away from white nationalist org Vanguard America following #unitetheright in #charlottesville after James Alex Fields was seen with a VA shield before driving his car into a crowd, murdering Heather Heyer & injuring dozens of others

Syed Robbie Javid a.k.a. Sayed Robbie Javid or Robbie Javid of Alexandria,

Happy Monday everyone :-) Let's ring in September by reacquainting ourselves with Virginia neo-Nazi and NSC Dixie affiliate Sayed "Robbie" Javid, now known by "Reform the States". Robbie is an explicitly genocidal neo-Nazi, so lets get to know him a bit better!

— Garfield but Anti-Fascist (@AntifaGarfield) August 31, 2020

CW on this thread pic.twitter.com/3gzxrIo9HD

Antoine Bernard Renard (a.k.a. “Charlemagne MD” on Discord) from Rockville, MD.

https://t.co/ykEjdZFDi6

Brandon Troy Higgs, 25, from Reisterstown,