Student papers are overlooked, easy to understand, and have good compute constraints.

Tips for AI writers:

1. Spend 30% of your effort on skimming all student ML papers (e.g. Stanford NLP CS224n) the past 3 years and prototype your favorites

The idea is everything. Pick an area you are interested in and ideally something that has a visual aspect to it

Student papers are overlooked, easy to understand, and have good compute constraints.

Create an edge to the project. Apply it to something new and use FastAI or Keras to improve the accuracy with 5-30%.

Have a north star article in terms of structure and quality. Find something that stretches you to your utmost capability. I used @copingbear’s Style transfer article: https://t.co/OrR1B94t1w

Invest a week in studying the strategies to rank on sites like HN and Reddit, then use them. If you have an interesting result and a great article, you've done the hard work.

Articles will market you 24/7 worldwide. You want them to be relevant for a decade. High-quality articles increase your reputation and spread easier on the web.

cc @remiconnesson @mehtadata_

More from Data science

✨✨ BIG NEWS: We are hiring!! ✨✨

Amazing Research Software Engineer / Research Data Scientist positions within the @turinghut23 group at the @turinginst, at Standard (permanent) and Junior levels 🤩

👇 Here below a thread on who we are and what we

We are a highly diverse and interdisciplinary group of around 30 research software engineers and data scientists 😎💻 👉 https://t.co/KcSVMb89yx #RSEng

We value expertise across many domains - members of our group have backgrounds in psychology, mathematics, digital humanities, biology, astrophysics and many other areas 🧬📖🧪📈🗺️⚕️🪐

https://t.co/zjoQDGxKHq

/ @DavidBeavan @LivingwMachines

In our everyday job we turn cutting edge research into professionally usable software tools. Check out @evelgab's #LambdaDays 👩💻 presentation for some examples:

We create software packages to analyse data in a readable, reliable and reproducible fashion and contribute to the #opensource community, as @drsarahlgibson highlights in her contributions to @mybinderteam and @turingway: https://t.co/pRqXtFpYXq #ResearchSoftwareHour

Amazing Research Software Engineer / Research Data Scientist positions within the @turinghut23 group at the @turinginst, at Standard (permanent) and Junior levels 🤩

👇 Here below a thread on who we are and what we

We are a highly diverse and interdisciplinary group of around 30 research software engineers and data scientists 😎💻 👉 https://t.co/KcSVMb89yx #RSEng

We value expertise across many domains - members of our group have backgrounds in psychology, mathematics, digital humanities, biology, astrophysics and many other areas 🧬📖🧪📈🗺️⚕️🪐

https://t.co/zjoQDGxKHq

/ @DavidBeavan @LivingwMachines

In our everyday job we turn cutting edge research into professionally usable software tools. Check out @evelgab's #LambdaDays 👩💻 presentation for some examples:

We create software packages to analyse data in a readable, reliable and reproducible fashion and contribute to the #opensource community, as @drsarahlgibson highlights in her contributions to @mybinderteam and @turingway: https://t.co/pRqXtFpYXq #ResearchSoftwareHour

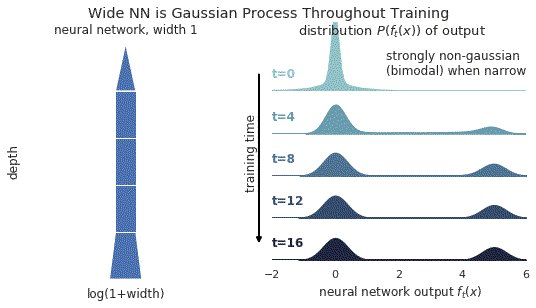

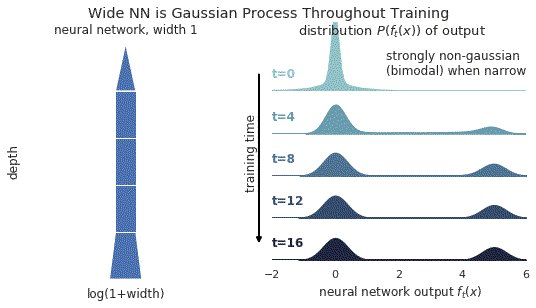

1/ A ∞-wide NN of *any architecture* is a Gaussian process (GP) at init. The NN in fact evolves linearly in function space under SGD, so is a GP at *any time* during training. https://t.co/v1b6kndqCk With Tensor Programs, we can calculate this time-evolving GP w/o trainin any NN

2/ In this gif, narrow relu networks have high probability of initializing near the 0 function (because of relu) and getting stuck. This causes the function distribution to become multi-modal over time. However, for wide relu networks this is not an issue.

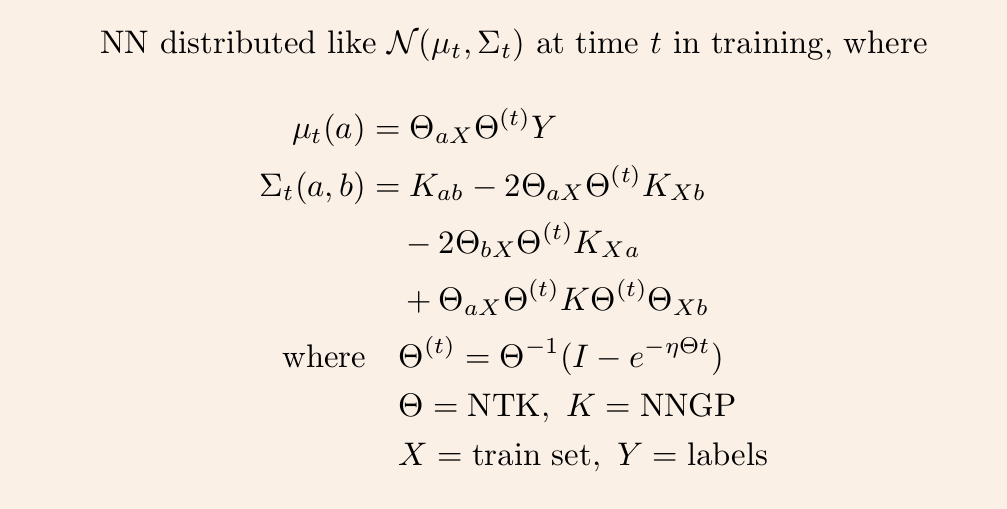

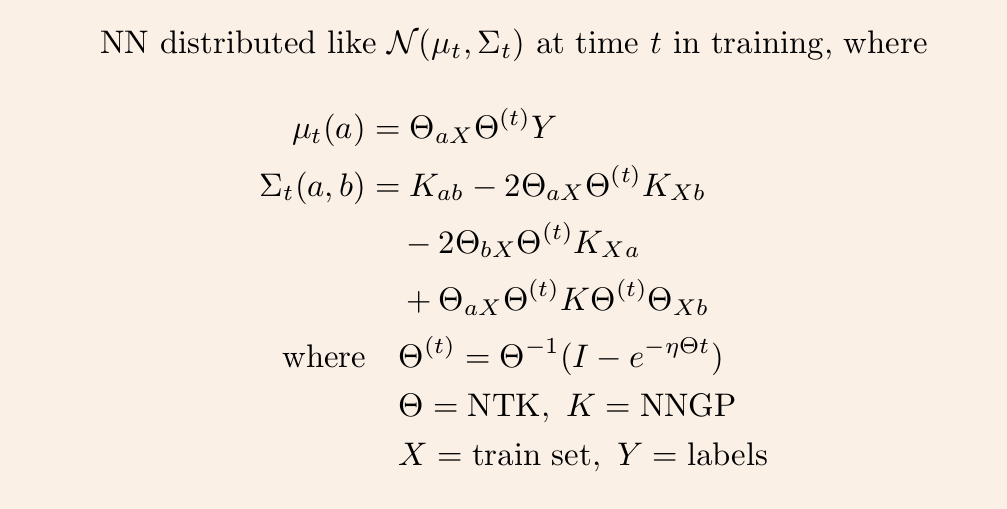

3/ This time-evolving GP depends on two kernels: the kernel describing the GP at init, and the kernel describing the linear evolution of this GP. The former is the NNGP kernel, and the latter is the Neural Tangent Kernel (NTK).

4/ Once we have these two kernels, we can derive the GP mean and covariance at any time t via straightforward linear algebra.

5/ So it remains to calculate the NNGP kernel and NT kernel for any given architecture. The first is described in https://t.co/cFWfNC5ALC and in this thread

2/ In this gif, narrow relu networks have high probability of initializing near the 0 function (because of relu) and getting stuck. This causes the function distribution to become multi-modal over time. However, for wide relu networks this is not an issue.

3/ This time-evolving GP depends on two kernels: the kernel describing the GP at init, and the kernel describing the linear evolution of this GP. The former is the NNGP kernel, and the latter is the Neural Tangent Kernel (NTK).

4/ Once we have these two kernels, we can derive the GP mean and covariance at any time t via straightforward linear algebra.

5/ So it remains to calculate the NNGP kernel and NT kernel for any given architecture. The first is described in https://t.co/cFWfNC5ALC and in this thread

You May Also Like

Stan Lee’s fictional superheroes lived in the real New York. Here’s where they lived, and why. https://t.co/oV1IGGN8R6

Stan Lee, who died Monday at 95, was born in Manhattan and graduated from DeWitt Clinton High School in the Bronx. His pulp-fiction heroes have come to define much of popular culture in the early 21st century.

Tying Marvel’s stable of pulp-fiction heroes to a real place — New York — served a counterbalance to the sometimes gravity-challenged action and the improbability of the stories. That was just what Stan Lee wanted. https://t.co/rDosqzpP8i

The New York universe hooked readers. And the artists drew what they were familiar with, which made the Marvel universe authentic-looking, down to the water towers atop many of the buildings. https://t.co/rDosqzpP8i

The Avengers Mansion was a Beaux-Arts palace. Fans know it as 890 Fifth Avenue. The Frick Collection, which now occupies the place, uses the address of the front door: 1 East 70th Street.

Stan Lee, who died Monday at 95, was born in Manhattan and graduated from DeWitt Clinton High School in the Bronx. His pulp-fiction heroes have come to define much of popular culture in the early 21st century.

Tying Marvel’s stable of pulp-fiction heroes to a real place — New York — served a counterbalance to the sometimes gravity-challenged action and the improbability of the stories. That was just what Stan Lee wanted. https://t.co/rDosqzpP8i

The New York universe hooked readers. And the artists drew what they were familiar with, which made the Marvel universe authentic-looking, down to the water towers atop many of the buildings. https://t.co/rDosqzpP8i

The Avengers Mansion was a Beaux-Arts palace. Fans know it as 890 Fifth Avenue. The Frick Collection, which now occupies the place, uses the address of the front door: 1 East 70th Street.