I was reading something that suggested that trauma "tries" to spread itself. ie that the reason why intergenerational trauma is a thing is that the traumatized part in a parent will take action to recreate that trauma in the child.

More like, "here's a story for how this could work.")

Why on earth would trauma be agenty, in that way? It sounds like too much to swallow.

If some traumas try to replicate them selves in other minds, but most don't pretty soon the world will be awash in the replicator type.

Its unclear how high the fidelity of transmission is.

Thinking through this has given me a new appreciation of what @DavidDeutschOxf calls "anti-rational memes". I think he might be on to something that they are more-or-less at the core of all our problems on earth.

More from Eli Tyre

I started by simply stating that I thought that the arguments that I had heard so far don't hold up, and seeing if anyone was interested in going into it in depth with

CritRats!

— Eli Tyre (@EpistemicHope) December 26, 2020

I think AI risk is a real existential concern, and I claim that the CritRat counterarguments that I've heard so far (keywords: universality, person, moral knowledge, education, etc.) don't hold up.

Anyone want to hash this out with me?https://t.co/Sdm4SSfQZv

So far, a few people have engaged pretty extensively with me, for instance, scheduling video calls to talk about some of the stuff, or long private chats.

(Links to some of those that are public at the bottom of the thread.)

But in addition to that, there has been a much more sprawling conversation happening on twitter, involving a much larger number of people.

Having talked to a number of people, I then offered a paraphrase of the basic counter that I was hearing from people of the Crit Rat persuasion.

ELI'S PARAPHRASE OF THE CRIT RAT STORY ABOUT AGI AND AI RISK

— Eli Tyre (@EpistemicHope) January 5, 2021

There are two things that you might call "AI".

The first is non-general AI, which is a program that follows some pre-set algorithm to solve a pre-set problem. This includes modern ML.

I think AI risk is a real existential concern, and I claim that the CritRat counterarguments that I've heard so far (keywords: universality, person, moral knowledge, education, etc.) don't hold up.

Anyone want to hash this out with

In general, I am super up for short (1 to 10 hour) adversarial collaborations.

— Eli Tyre (@EpistemicHope) December 23, 2020

If you think I'm wrong about something, and want to dig into the topic with me to find out what's up / prove me wrong, DM me.

For instance, while I heartily agree with lots of what is said in this video, I don't think that the conclusion about how to prevent (the bad kind of) human extinction, with regard to AGI, follows.

There are a number of reasons to think that AGI will be more dangerous than most people are, despite both people and AGIs being qualitatively the same sort of thing (explanatory knowledge-creating entities).

And, I maintain, that because of practical/quantitative (not fundamental/qualitative) differences, the development of AGI / TAI is very likely to destroy the world, by default.

(I'm not clear on exactly how much disagreement there is. In the video above, Deutsch says "Building an AGI with perverse emotions that lead it to immoral actions would be a crime."

More from Culture

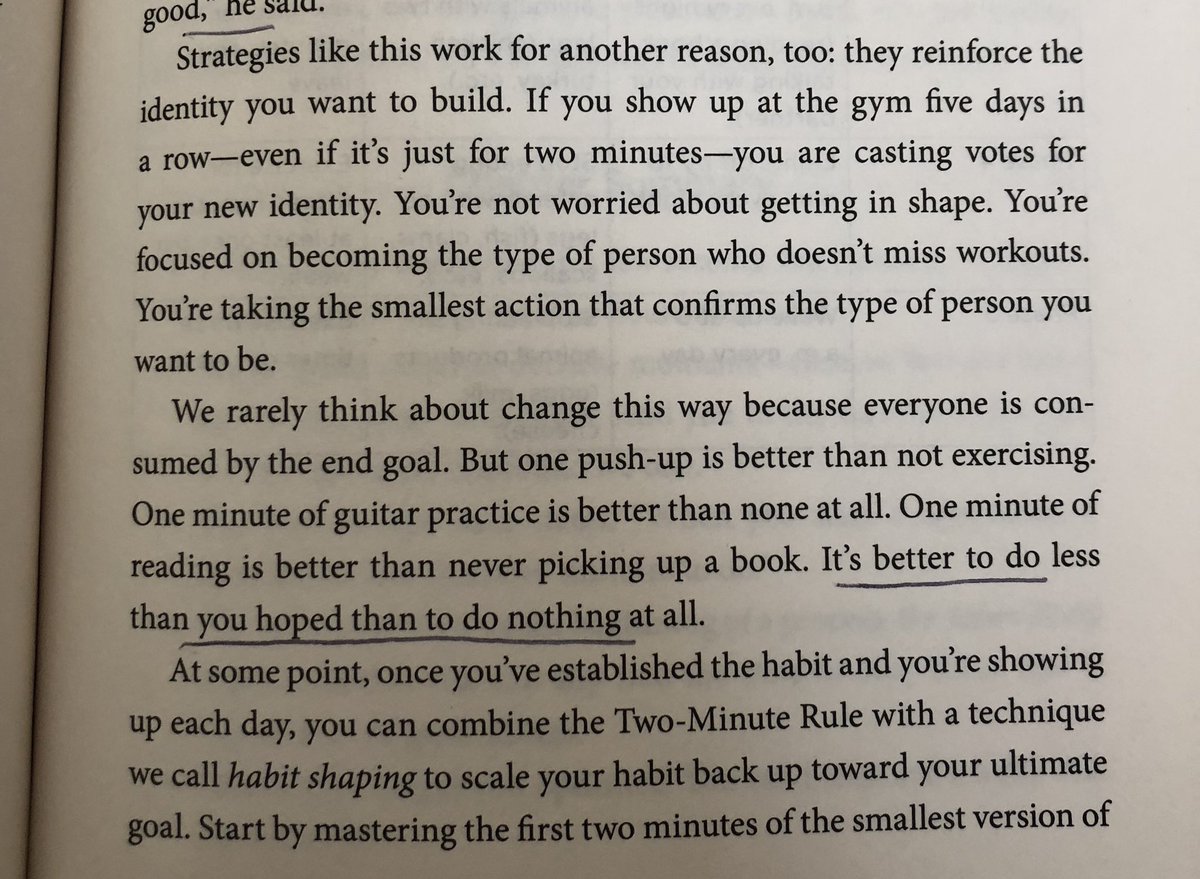

1. Atomic Habits by @JamesClear

“If you show up at the gym 5 days in a row—even for 2 minutes—you're casting votes for your new identity. You’re not worried about getting in shape. Youre focused on becoming the type of person who doesn’t miss workouts”

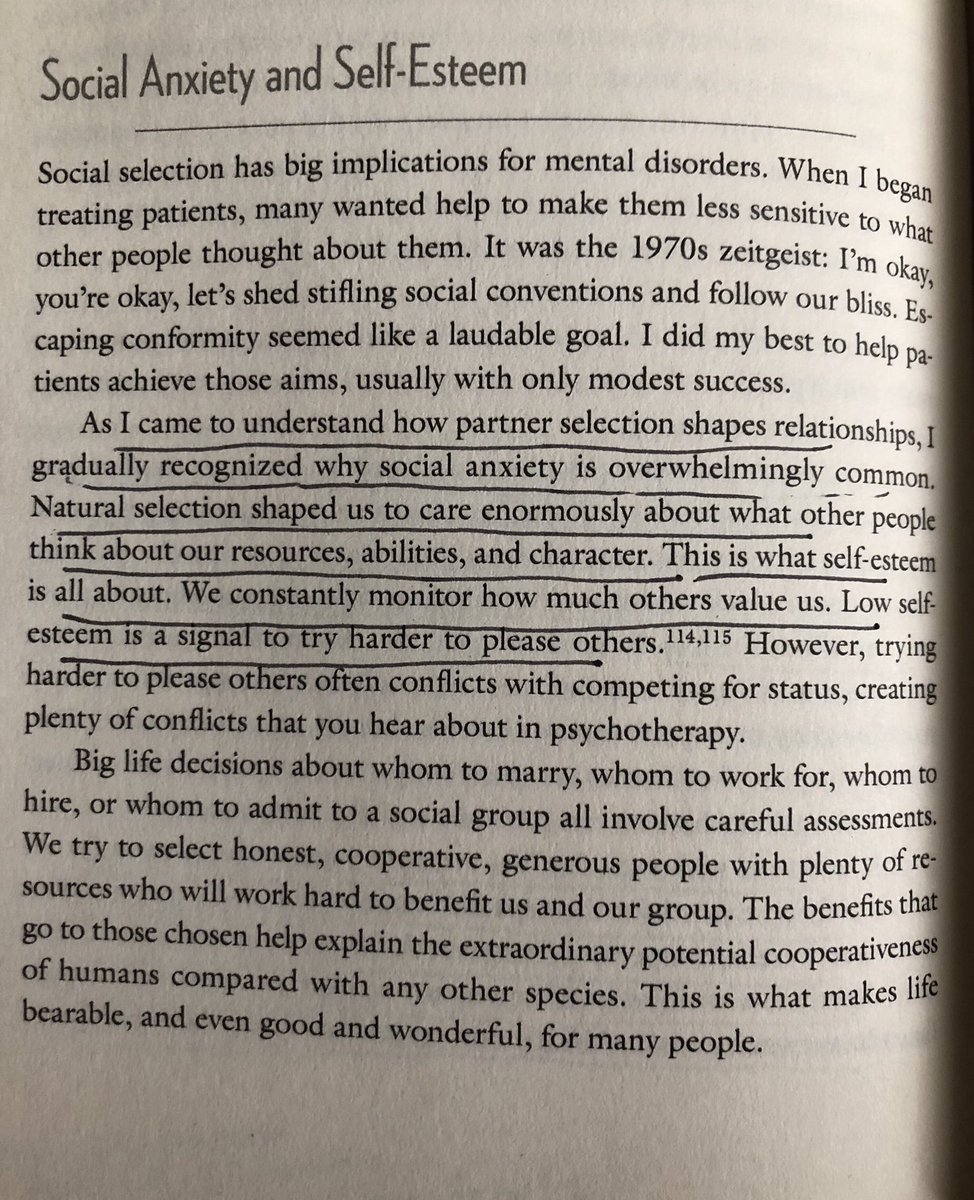

Good Reasons for Bad Feelings

https://t.co/KZDqte19nG

2. “social anxiety is overwhelmingly common. Natural selection shaped us to care enormously what other people think..We constantly monitor how much others value us..Low self-esteem is a signal to try harder to please others”

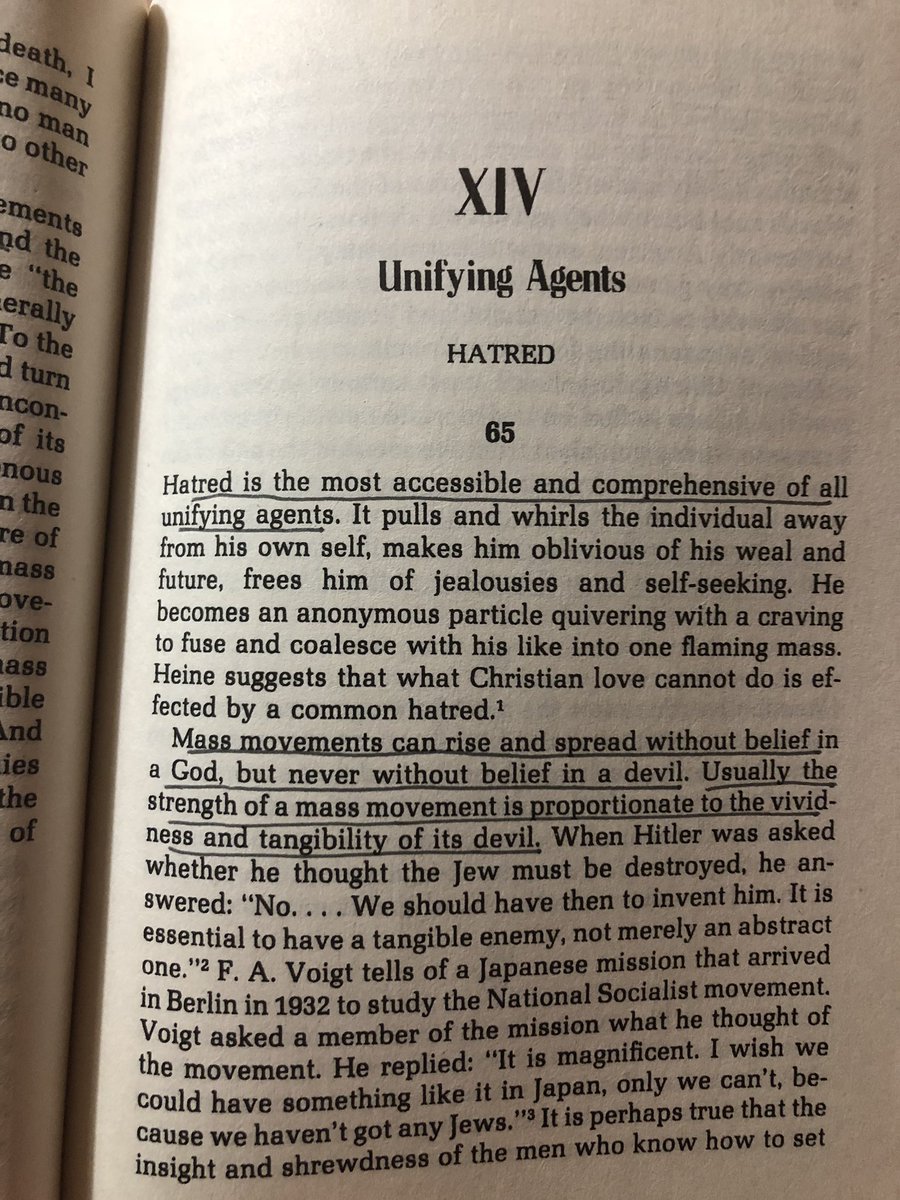

The True Believer by Eric Hoffer

https://t.co/uZT4kdhzvZ

“Hatred is the most accessible and comprehensive of all unifying agents...Mass movements can rise and spread without belief in a God, but never without a believe in a devil.”

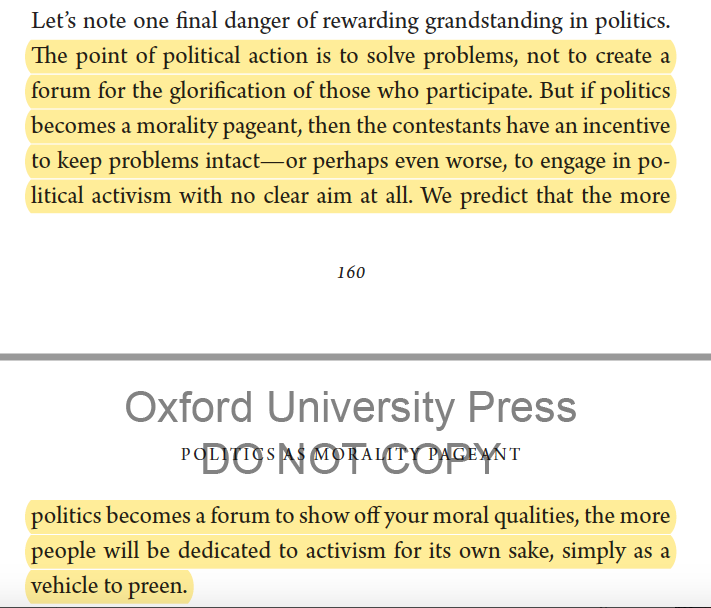

Grandstanding

https://t.co/4Of58AZUj8

"if politics becomes a morality pageant, then the contestants have an incentive to keep problems intact...politics becomes a forum to show off moral qualities...people will be dedicated to activism for its own sake, as a vehicle to preen"

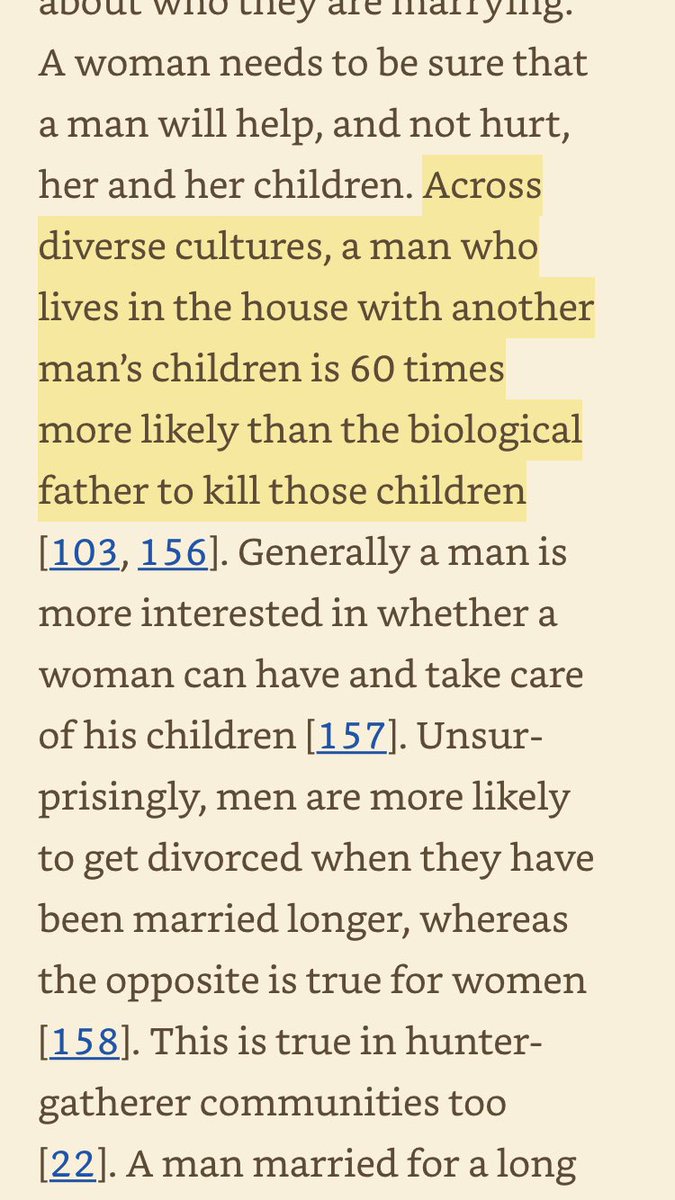

Warriors and Worriers by Joyce Benenson

https://t.co/yLC4eGHEd4

“Across diverse cultures, a man who lives in the house with another man’s children is about 60 times more likely than the biological father to kill those children.”

The level of vitriol in the replies is a new experience for me on here. I love Twitter, but this is the dark side of it.

Thread...

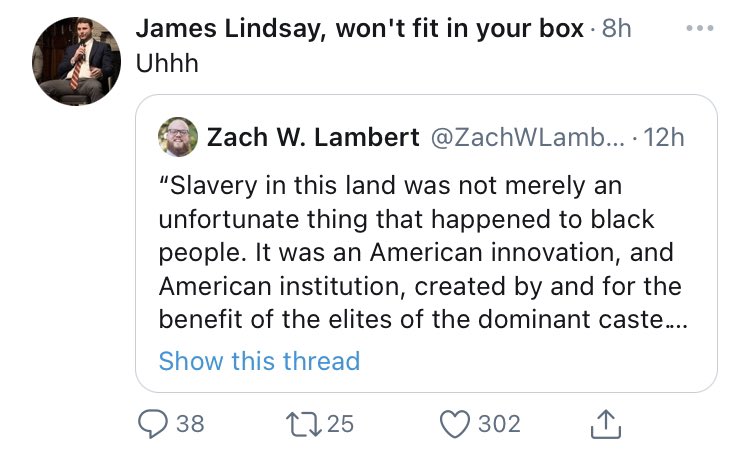

\u201cSlavery in this land was not merely an unfortunate thing that happened to black people. It was an American innovation, and American institution, created by and for the benefit of the elites of the dominant caste.\u201d @Isabelwilkerson

— Zach W. Lambert (@ZachWLambert) February 11, 2021

First, this quote is from a book which examines castes and slavery throughout history. Obviously Wilkerson isn’t claiming slavery was invented by America.

She says, “Slavery IN THIS LAND...” wasn’t happenstance. American chattel slavery was purposefully crafted and carried out.

That’s not a “hot take” or a fringe opinion. It’s a fact with which any reputable historian or scholar agrees.

Second, this is a perfect example of how nefarious folks operate here on Twitter...

J*mes Linds*y, P*ter Bogh*ssian and others like them purposefully misrepresent something (or just outright ignore what it actually says as they do in this case) and then feed it to their large, angry following so they will attack.

The attacks are rarely about ideas or beliefs, because purposefully misrepresenting someone’s argument prevents that from happening. Instead, the attacks are directed at the person.