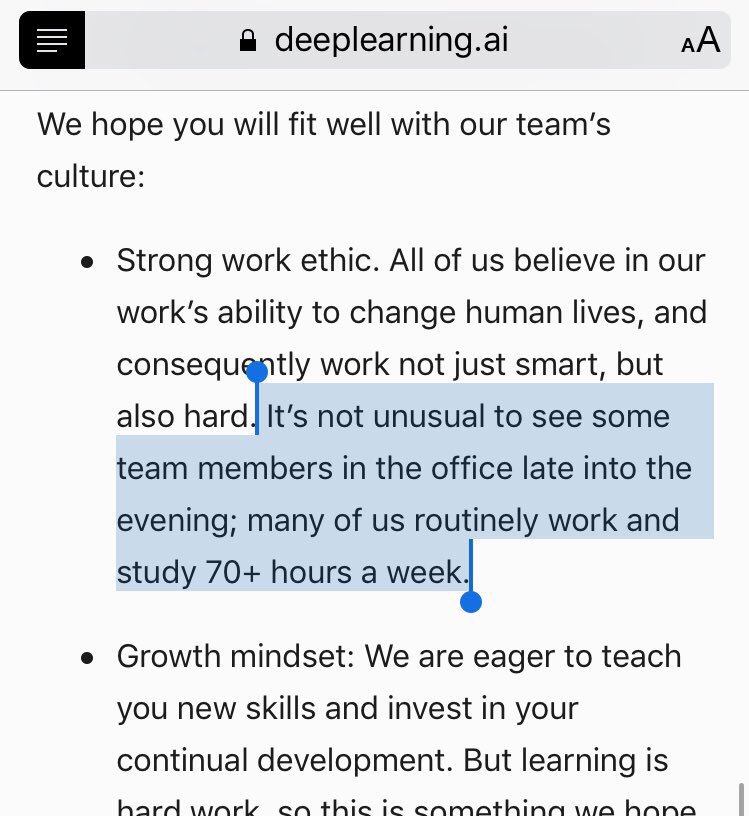

For the record: that's not a "strong work ethic."

That's exploiting young unattached engineers and spinning it as "team culture."

Toxic 💀💀

If your company is asking for sustained 70+ hrs they're hurting you & they know it.

CEO/C-team & the short-gain-at-long-expense tactic they chose.

They want to burn you up for their gain.

More from All

How can we use language supervision to learn better visual representations for robotics?

Introducing Voltron: Language-Driven Representation Learning for Robotics!

Paper: https://t.co/gIsRPtSjKz

Models: https://t.co/NOB3cpATYG

Evaluation: https://t.co/aOzQu95J8z

🧵👇(1 / 12)

Videos of humans performing everyday tasks (Something-Something-v2, Ego4D) offer a rich and diverse resource for learning representations for robotic manipulation.

Yet, an underused part of these datasets are the rich, natural language annotations accompanying each video. (2/12)

The Voltron framework offers a simple way to use language supervision to shape representation learning, building off of prior work in representations for robotics like MVP (https://t.co/Pb0mk9hb4i) and R3M (https://t.co/o2Fkc3fP0e).

The secret is *balance* (3/12)

Starting with a masked autoencoder over frames from these video clips, make a choice:

1) Condition on language and improve our ability to reconstruct the scene.

2) Generate language given the visual representation and improve our ability to describe what's happening. (4/12)

By trading off *conditioning* and *generation* we show that we can learn 1) better representations than prior methods, and 2) explicitly shape the balance of low and high-level features captured.

Why is the ability to shape this balance important? (5/12)

Introducing Voltron: Language-Driven Representation Learning for Robotics!

Paper: https://t.co/gIsRPtSjKz

Models: https://t.co/NOB3cpATYG

Evaluation: https://t.co/aOzQu95J8z

🧵👇(1 / 12)

Videos of humans performing everyday tasks (Something-Something-v2, Ego4D) offer a rich and diverse resource for learning representations for robotic manipulation.

Yet, an underused part of these datasets are the rich, natural language annotations accompanying each video. (2/12)

The Voltron framework offers a simple way to use language supervision to shape representation learning, building off of prior work in representations for robotics like MVP (https://t.co/Pb0mk9hb4i) and R3M (https://t.co/o2Fkc3fP0e).

The secret is *balance* (3/12)

Starting with a masked autoencoder over frames from these video clips, make a choice:

1) Condition on language and improve our ability to reconstruct the scene.

2) Generate language given the visual representation and improve our ability to describe what's happening. (4/12)

By trading off *conditioning* and *generation* we show that we can learn 1) better representations than prior methods, and 2) explicitly shape the balance of low and high-level features captured.

Why is the ability to shape this balance important? (5/12)

You May Also Like

The YouTube algorithm that I helped build in 2011 still recommends the flat earth theory by the *hundreds of millions*. This investigation by @RawStory shows some of the real-life consequences of this badly designed AI.

This spring at SxSW, @SusanWojcicki promised "Wikipedia snippets" on debated videos. But they didn't put them on flat earth videos, and instead @YouTube is promoting merchandising such as "NASA lies - Never Trust a Snake". 2/

A few example of flat earth videos that were promoted by YouTube #today:

https://t.co/TumQiX2tlj 3/

https://t.co/uAORIJ5BYX 4/

https://t.co/yOGZ0pLfHG 5/

Flat Earth conference attendees explain how they have been brainwashed by YouTube and Infowarshttps://t.co/gqZwGXPOoc

— Raw Story (@RawStory) November 18, 2018

This spring at SxSW, @SusanWojcicki promised "Wikipedia snippets" on debated videos. But they didn't put them on flat earth videos, and instead @YouTube is promoting merchandising such as "NASA lies - Never Trust a Snake". 2/

A few example of flat earth videos that were promoted by YouTube #today:

https://t.co/TumQiX2tlj 3/

https://t.co/uAORIJ5BYX 4/

https://t.co/yOGZ0pLfHG 5/

These 10 threads will teach you more than reading 100 books

Five billionaires share their top lessons on startups, life and entrepreneurship (1/10)

10 competitive advantages that will trump talent (2/10)

Some harsh truths you probably don’t want to hear (3/10)

10 significant lies you’re told about the world (4/10)

Five billionaires share their top lessons on startups, life and entrepreneurship (1/10)

I interviewed 5 billionaires this week

— GREG ISENBERG (@gregisenberg) January 23, 2021

I asked them to share their lessons learned on startups, life and entrepreneurship:

Here's what they told me:

10 competitive advantages that will trump talent (2/10)

To outperform, you need serious competitive advantages.

— Sahil Bloom (@SahilBloom) March 20, 2021

But contrary to what you have been told, most of them don't require talent.

10 competitive advantages that you can start developing today:

Some harsh truths you probably don’t want to hear (3/10)

I\u2019ve gotten a lot of bad advice in my career and I see even more of it here on Twitter.

— Nick Huber (@sweatystartup) January 3, 2021

Time for a stiff drink and some truth you probably dont want to hear.

\U0001f447\U0001f447

10 significant lies you’re told about the world (4/10)

THREAD: 10 significant lies you're told about the world.

— Julian Shapiro (@Julian) January 9, 2021

On startups, writing, and your career: