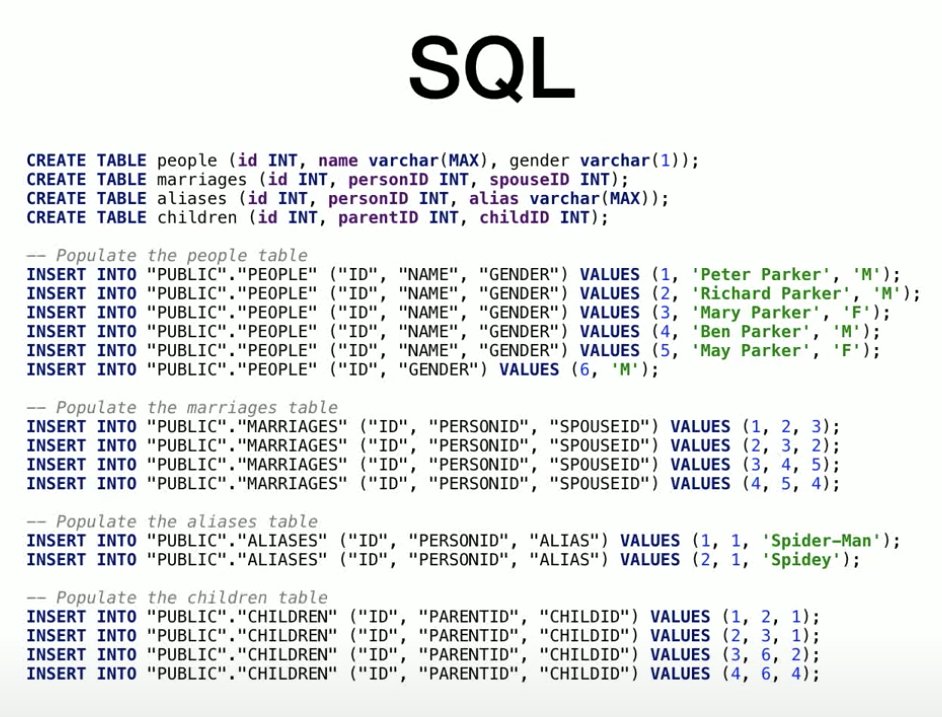

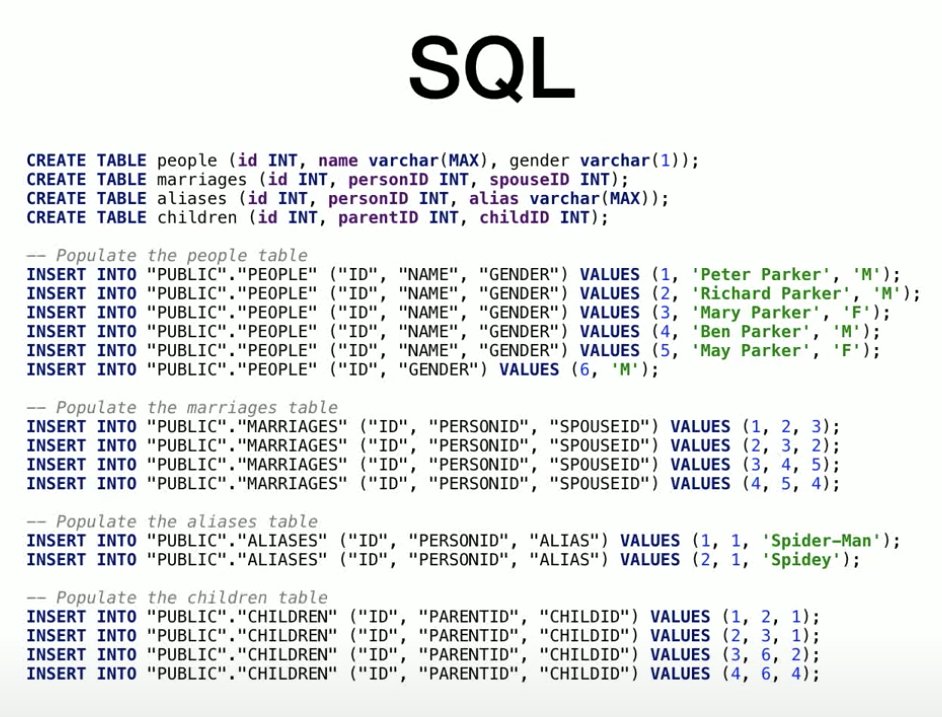

For people curious about the Roam API and confused by the syntax, or interested in why Conor went with Datomic/Datascript and not a traditional database, this older talk by Roam developer @mark_bastian is a great overview.

You should be able to model the entire Spiderman story in Roam.

Page title: Peter Parker

Child of:: [[Richard Parker]] [[Mary Parker]]

Aliases:: [[Spidey]]

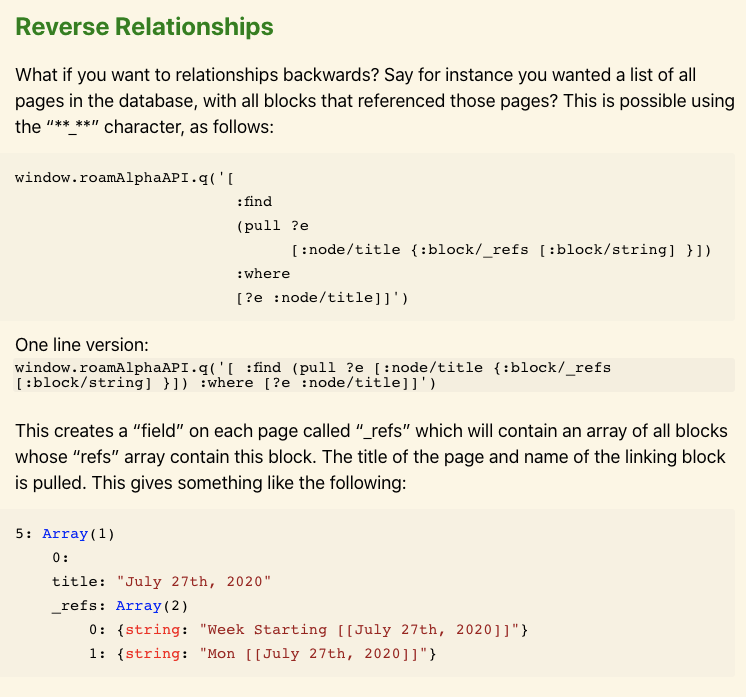

etc, and do these kind of queries.

"Show me companies in Boise, Idaho, founded by women, whose evaluation is lower than 10X ARR"

"Show me a graph of my sleep quality versus days in which I ate foods that had gluten in them or not" (where [[bread]] has a page with ingredients::).

More from Tech

Thought I'd put a thread together of some resources & people I consider really valuable & insightful for anyone considering or just starting out on their @SorareHQ journey. It's by no means comprehensive, this community is super helpful so no offence to anyone I've missed off...

1) Get yourself on the official Sorare Discord group https://t.co/1CWeyglJhu, the forum is always full of interesting debate. Got a question? Put it on the relevant thread & it's usually answered in minutes. This is also a great place to engage directly with the @SorareHQ team.

2) Bury your head in @HGLeitch's @SorareData & get to grips with all the collated information you have to hand FOR FREE! IMO it's vital for price-checking, scouting & S05 team building plus they are hosts to the forward thinking SO11 and SorareData Cups 🏆

3) Get on YouTube 📺, subscribe to @Qu_Tang_Clan's channel https://t.co/1ZxMsQR1kq & engross yourself in hours of Sorare tutorials & videos. There's a good crowd that log in to the live Gameweek shows where you get to see Quinny scratching his head/ beard over team selection.

4) Make sure to follow & give a listen to the @Sorare_Podcast on the streaming service of your choice 🔊, weekly shows are always insightful with great guests. Worth listening to the old episodes too as there's loads of information you'll take from them.

1) Get yourself on the official Sorare Discord group https://t.co/1CWeyglJhu, the forum is always full of interesting debate. Got a question? Put it on the relevant thread & it's usually answered in minutes. This is also a great place to engage directly with the @SorareHQ team.

2) Bury your head in @HGLeitch's @SorareData & get to grips with all the collated information you have to hand FOR FREE! IMO it's vital for price-checking, scouting & S05 team building plus they are hosts to the forward thinking SO11 and SorareData Cups 🏆

3) Get on YouTube 📺, subscribe to @Qu_Tang_Clan's channel https://t.co/1ZxMsQR1kq & engross yourself in hours of Sorare tutorials & videos. There's a good crowd that log in to the live Gameweek shows where you get to see Quinny scratching his head/ beard over team selection.

4) Make sure to follow & give a listen to the @Sorare_Podcast on the streaming service of your choice 🔊, weekly shows are always insightful with great guests. Worth listening to the old episodes too as there's loads of information you'll take from them.