In order to understand Artificial intelligence, machine learning, deep learning, we need to start at the very basics.

🧵🧵🧵🧵 👇

Artificial Inteligence = 🖥️ + 🧠

When can you expect your fridge & mobile phone to revolt against you? (Spoiler Alert: Not in the foreseeable future).

Please Do 're-tweet' the Thread & help me spread the knowledge to more people.

In order to understand Artificial intelligence, machine learning, deep learning, we need to start at the very basics.

Person 1: Lets go watch The Dark Knight. Its our marriage anniversary!

Person 2: I dont like going out at night. Its too scary & depressing.

Person 1: Oh, what i meant was watching the movie "The dark Knight".

Person 1: Thanks honey, you're the best :D.

Person 1: As serious as Harvey Dent. As serious as Gautam Gambhir's surname.

Person 2: Okay, I dont know who they are, and obviously I was being sarcastic.

But its more than that. This language, in which computers talk, every string has a unique precise way to understand the 'sentence' (also known as a computer program). No ambiguity. No Ambiguity.

This is the stereotypical 1st program which any person new to Computer Science writes in one of the computer programming languages: Python.

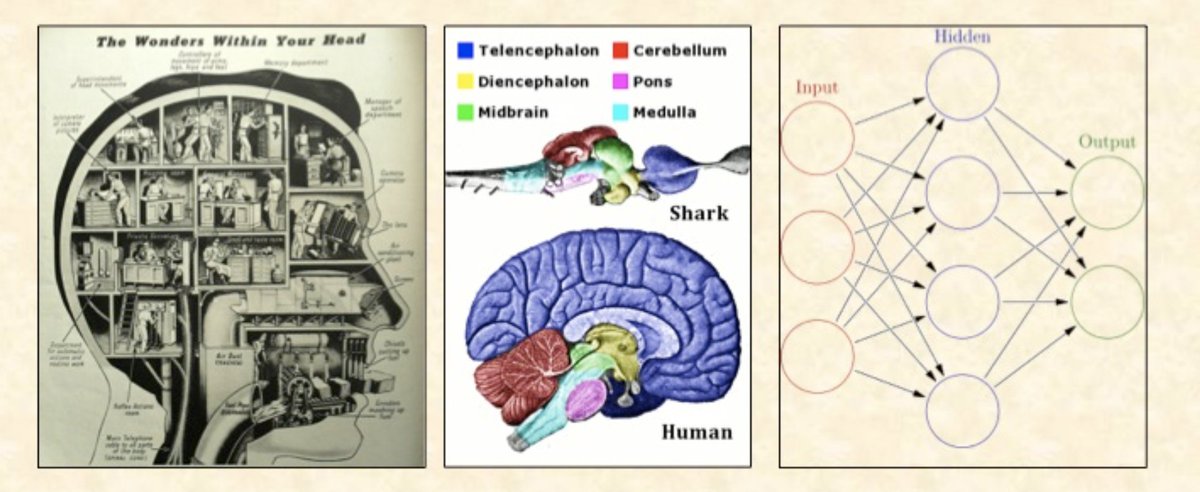

As old as our tryst with computers is our yearning to see them do 'intelligent' things. This was the 1st time that humans have turned God. And we wanted to build our prodigy in our own image. Give it the gift of intelligence.

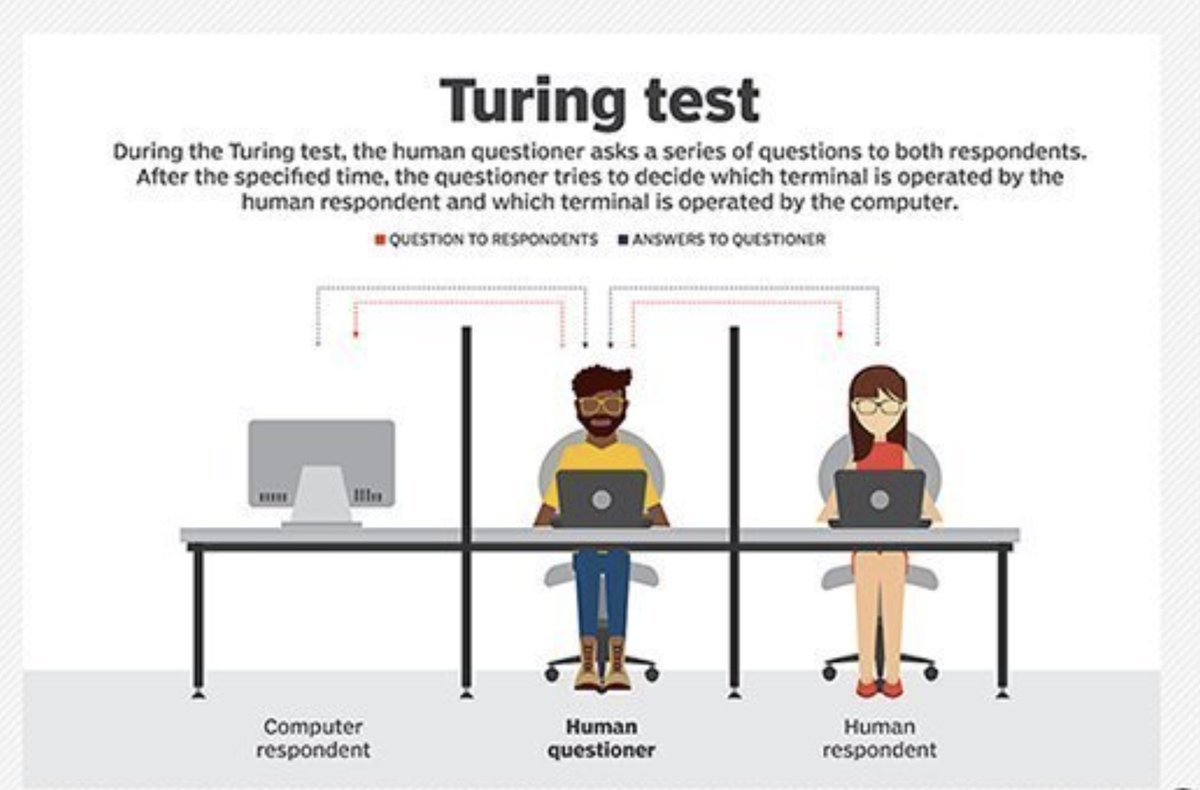

Artificial Intelligence: Any style of programming which enables computers to mimic humans.

so, through a limited set of atomic or basic programs we can represent an exponential set of outcomes.

Good resource: https://t.co/Yn7pV45yip

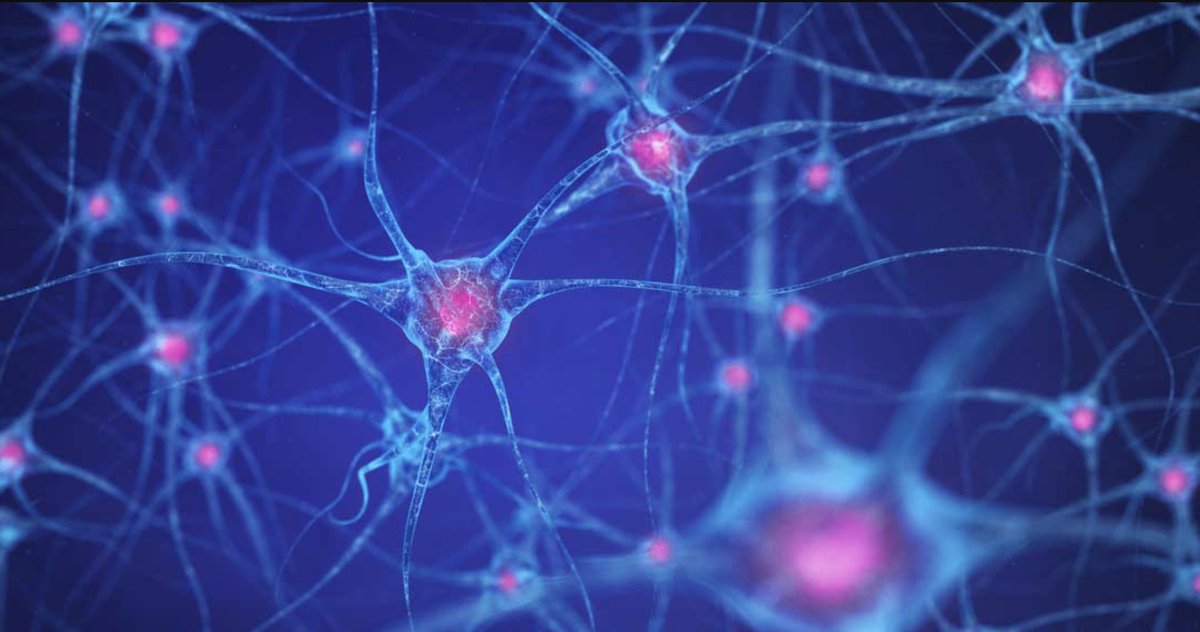

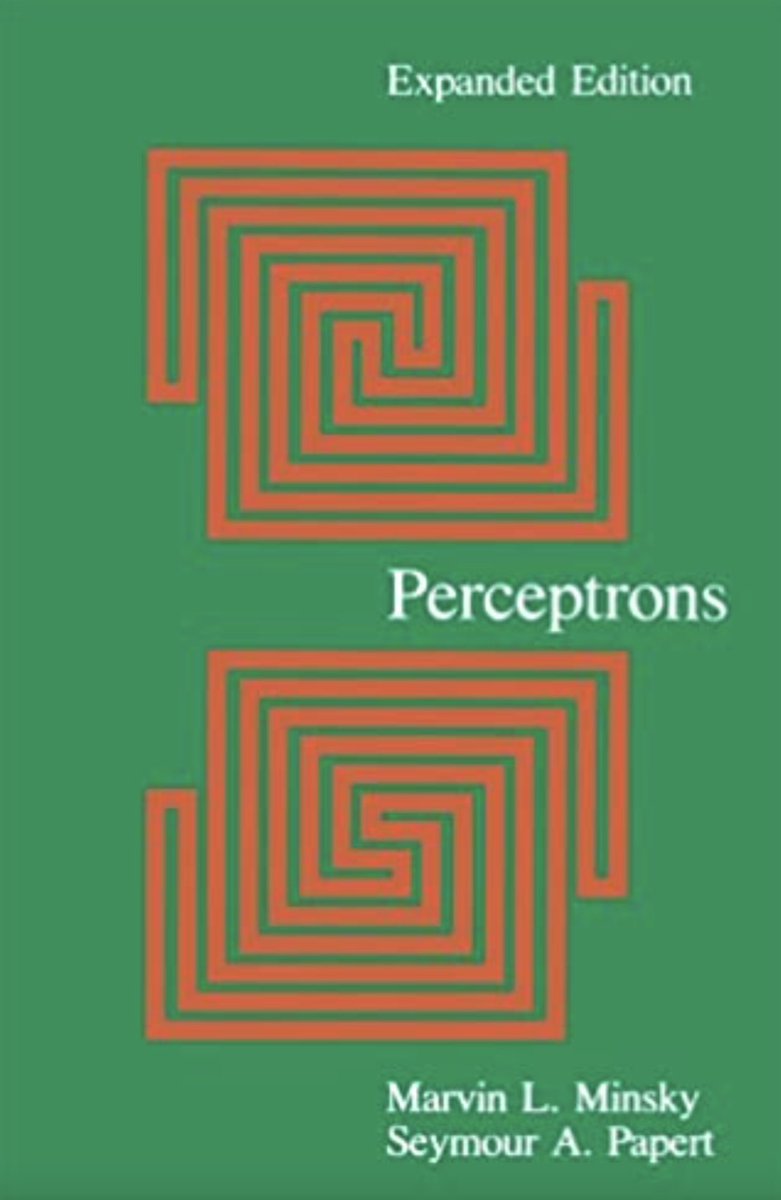

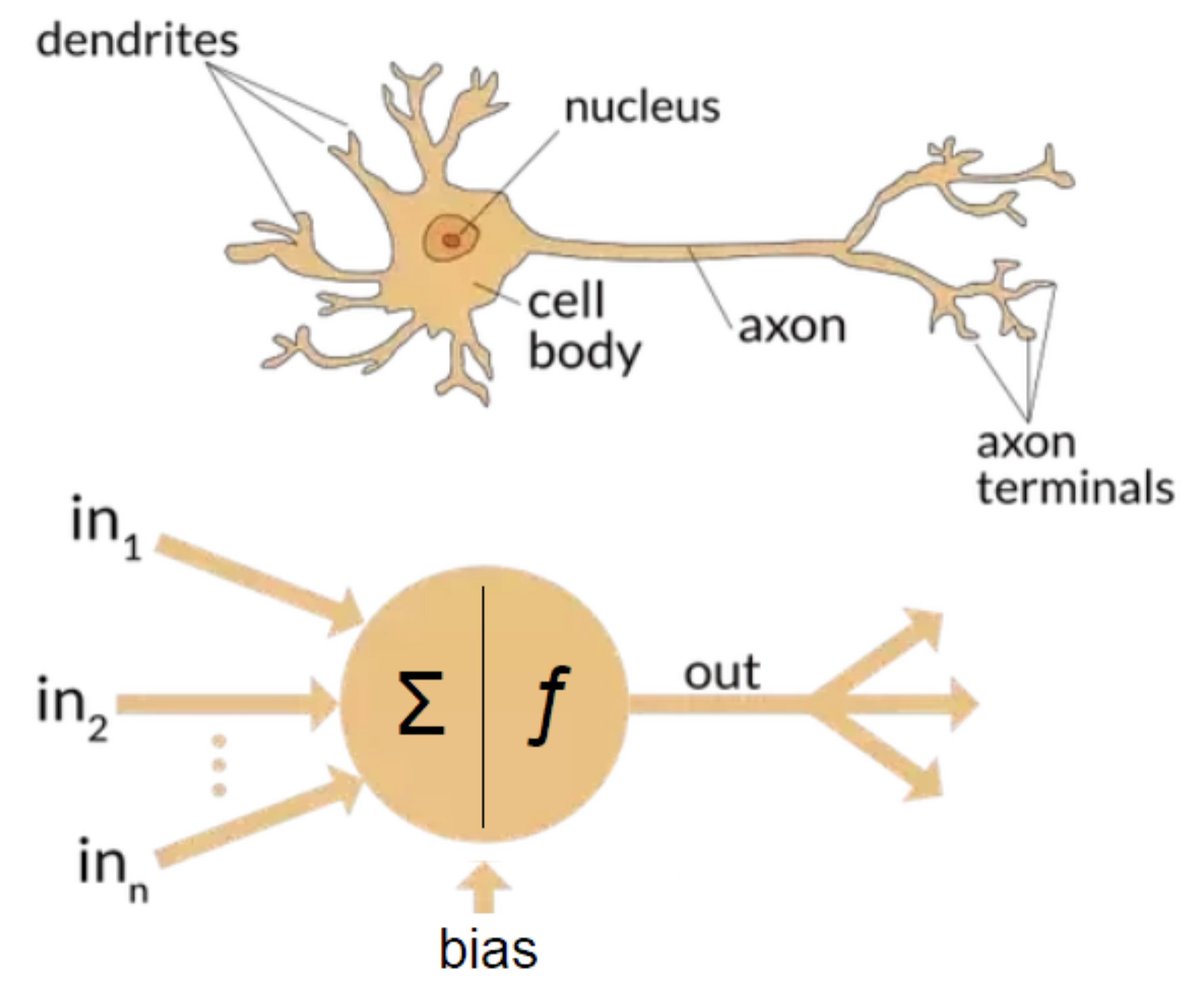

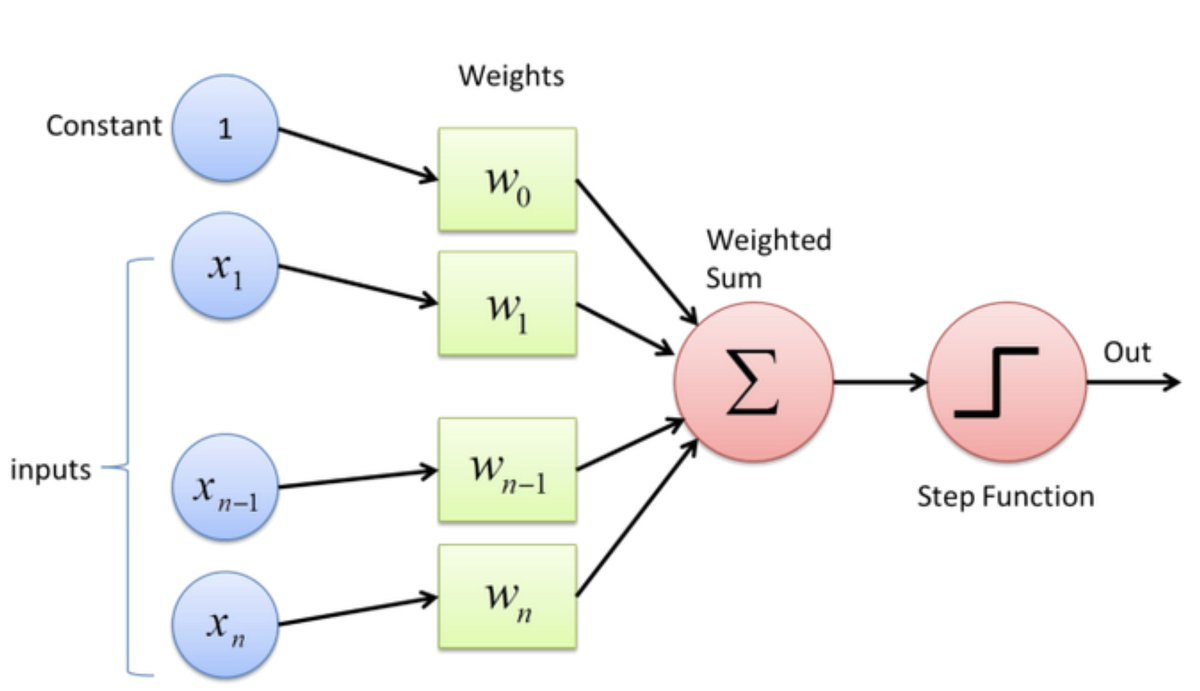

Now that we can simulate a neuron, what is the next step? Of course, to simulate an interconnected web of neurons! These are what are known as artificial neural networks.

It turns out, single hidden layer feed forward neural networks can approximate ANY* function to arbitrary degree of precision.

*terms & conditions applied.

https://t.co/WFRooX53Sx

While the theorem proves that such networks exist for each function and degree of precision, it says NOTHING about how to find them.

Let me describe this algorithm in few easy steps. I will try to keep it as non-mathematical as possible since many readers might have a non-mathematical background. Some math is unavoidable thought.

https://t.co/AfxlveCec6

https://t.co/yTZ9W55ncy

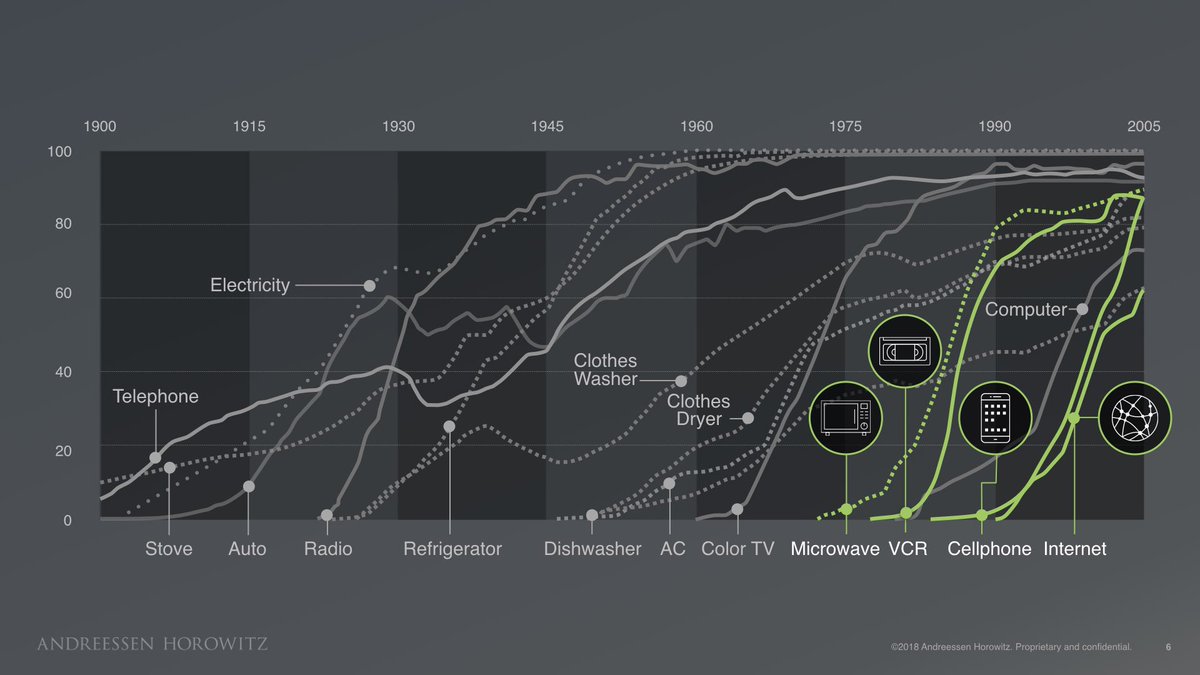

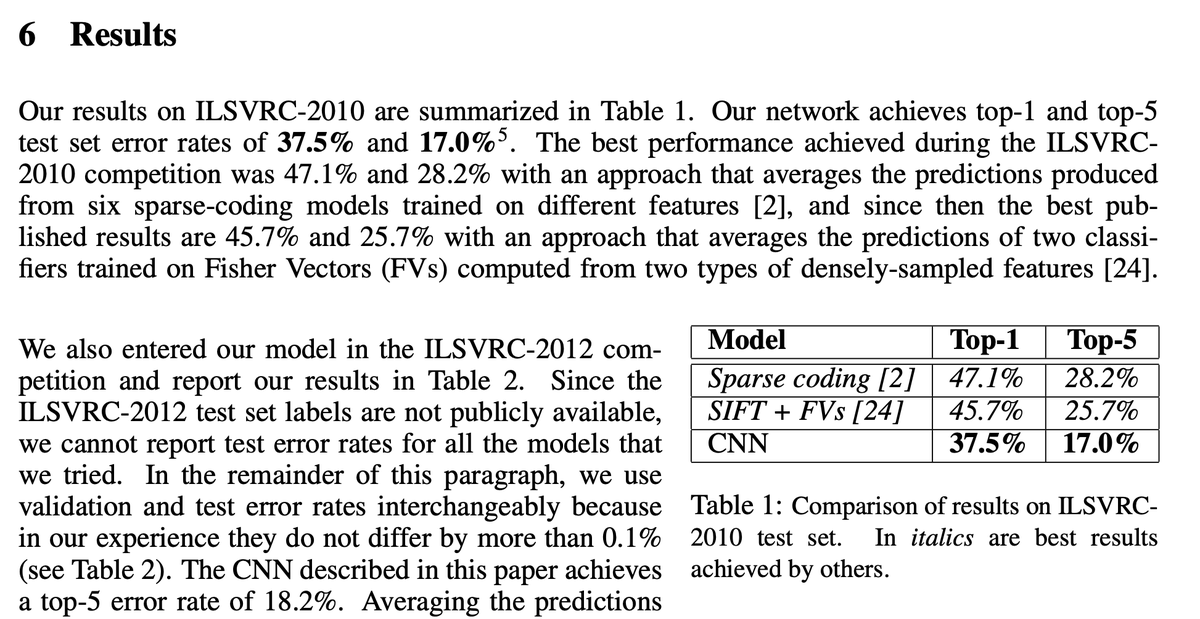

We have known about ANNs for many decades. Why then did we have to wait until 2010s before seeing wide ranging applications?

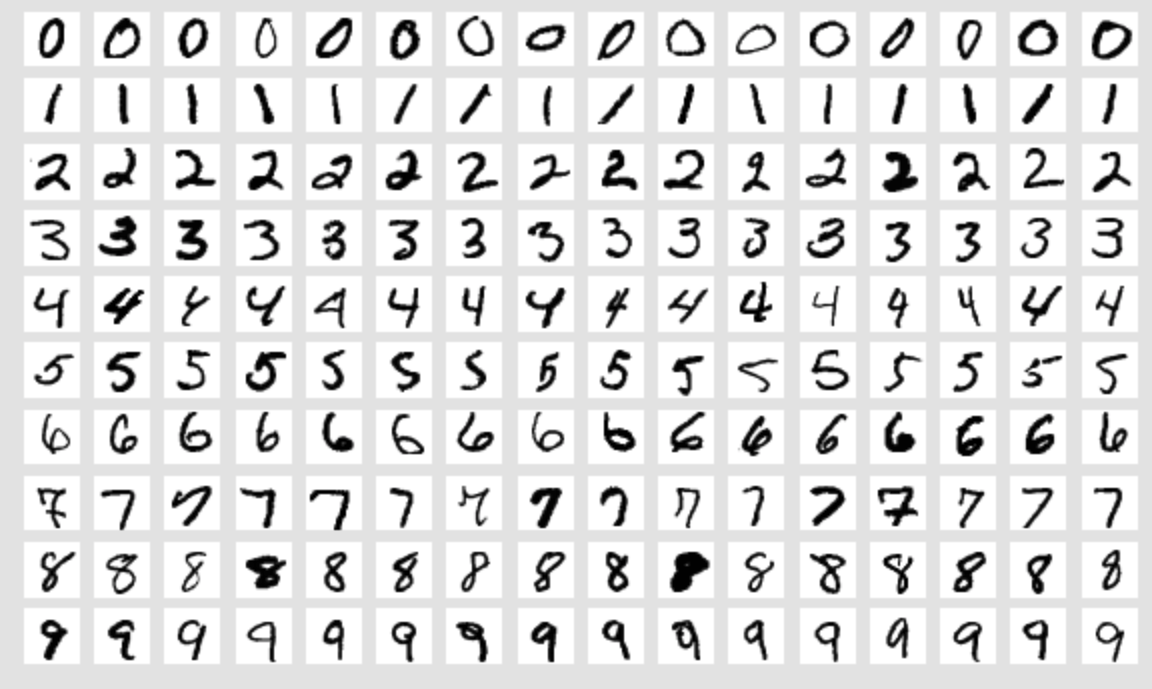

1. Lot of data. If we're learning from data (this is the core of machine learning), we need lot of data! To capture all the variations in data. https://t.co/9x4qnTAWB4

https://t.co/NZDi4fYpU6

https://t.co/tudLnUAhHh

I'm been untruthful when i talked about 2 schools of thought in AI. There are several. What predates modern ML and developed alongside the other schools are traditional ML methods. Everybody loves talking about the winners.

https://t.co/ylraMZQips

We did parts of this in college.

It wouldn't be an overstatement to say, everything.

Google, Facebook, Instagram, YouTube, Google Photos, Twitter everything is powered by Deep Learning. Deep Learning is eating software, quite literally.

https://t.co/SXBoEXQuma

You can provide this program english description of what a program should do, and it codes that program. That day might not be far when standard programming is 'deprecated' by Deep Learning models.

https://t.co/7yz7ydUL0a

https://t.co/AprUFaYKJE

https://t.co/SQOQAPUvBL

So many great places. But in my opinion, best one is courses. There are many of them, all of them great. My Masters guide Ravindran sir has free NPTEL courses.

https://t.co/aIjSK13v0F

https://t.co/LwE94cwzoe

for those that prefer the gentler, simpler, easier version.

This is something which has worried many people. The short answer is, not in the foreseeable future. Computers are still quite dumb. They do what they're told. Remember objective functions? That is how you tell a DL model what to do.

More from Sahil Sharma

This thread is to create awareness on how to use

https://t.co/3jeqlXO0QH

Please note that i am not officially associated with website. Only an active contributor & hope to benefit from network effects of interested investors actively contributing on platform.

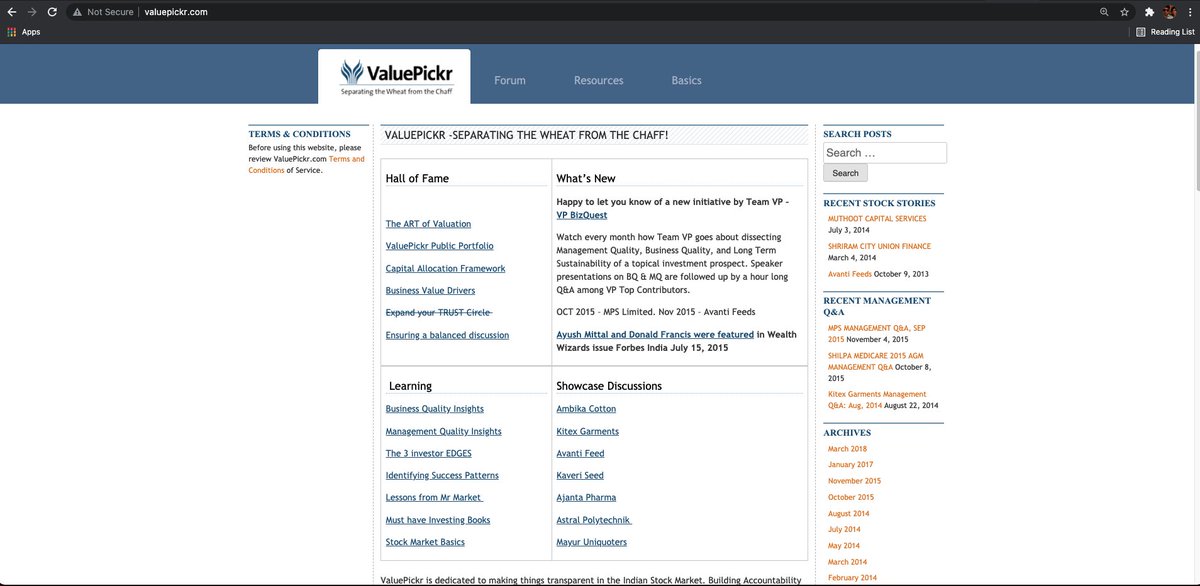

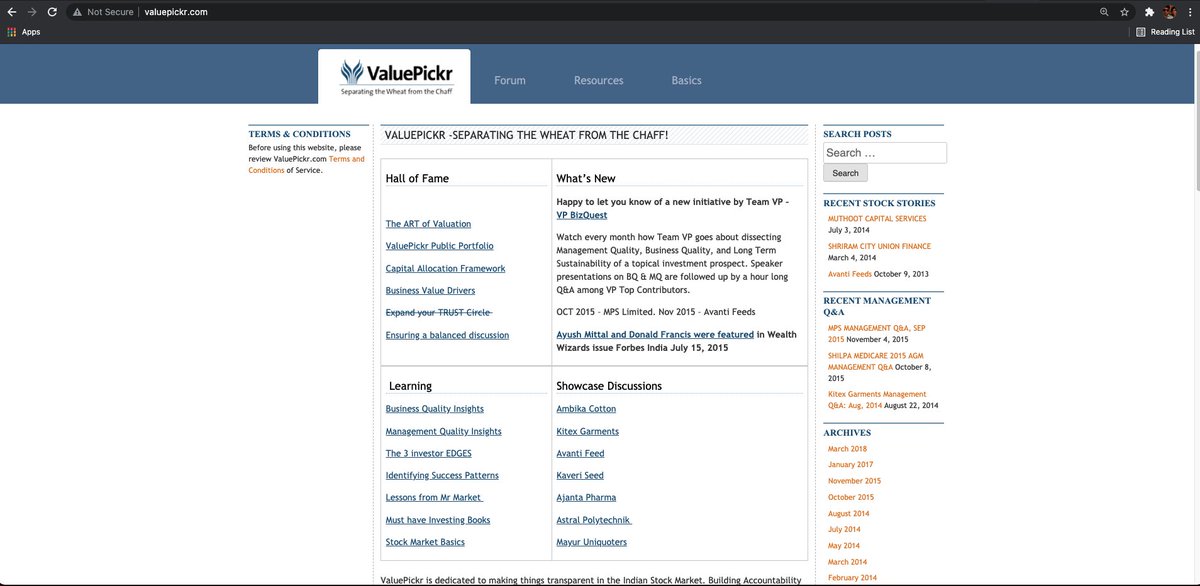

https://t.co/64yVScplqi is above all else a community of like minded investors who wants to actively engage in understandin at a fundamental level & separate wheat from the chaff.

Develop an understanding of the biz, industry, competitive intensity, management, valuation

Website was created > 10 years ago. Early users are seasoned investors & i personally look to learn greatly by following their footprints across the website.

There are broadly speaking 4-5 types of "threads" or discussion places across the website.

1. Featured discussions (hall of fame, showcase discussions, learning).

These enable investor to level up the most. These discussions are a showcase for the website itself.

These are also a great starting point. Since VP is not a course, feel free to traverse them in any order

https://t.co/3jeqlXO0QH

Please note that i am not officially associated with website. Only an active contributor & hope to benefit from network effects of interested investors actively contributing on platform.

https://t.co/64yVScplqi is above all else a community of like minded investors who wants to actively engage in understandin at a fundamental level & separate wheat from the chaff.

Develop an understanding of the biz, industry, competitive intensity, management, valuation

Website was created > 10 years ago. Early users are seasoned investors & i personally look to learn greatly by following their footprints across the website.

There are broadly speaking 4-5 types of "threads" or discussion places across the website.

1. Featured discussions (hall of fame, showcase discussions, learning).

These enable investor to level up the most. These discussions are a showcase for the website itself.

These are also a great starting point. Since VP is not a course, feel free to traverse them in any order

Such opportunities only come once in a few years.

Step-by-step: how to use (the free) @screener_in to generate investment ideas.

Do retweet if you find it useful to benefit max investors. 🙏🙏

Ready or not, 🧵🧵⤵️

I will use the free screener version so that everyone can follow along.

Outline

1. Stepwise Guide

2. Practical Example: CoffeeCan Companies

3. Practical Example: Smallcap Consistent compounders

4. Practical Example: Smallcap turnaround

5. Key Takeaway

1. Stepwise Guide

Step1

Go to https://t.co/jtOL2Bpoys

Step2

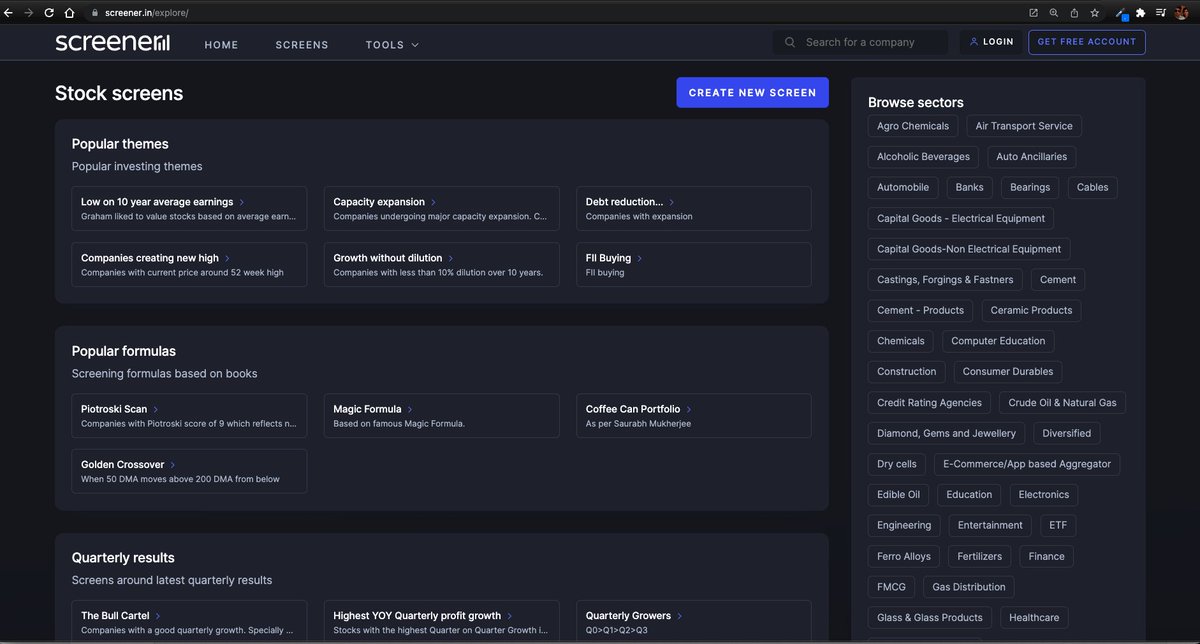

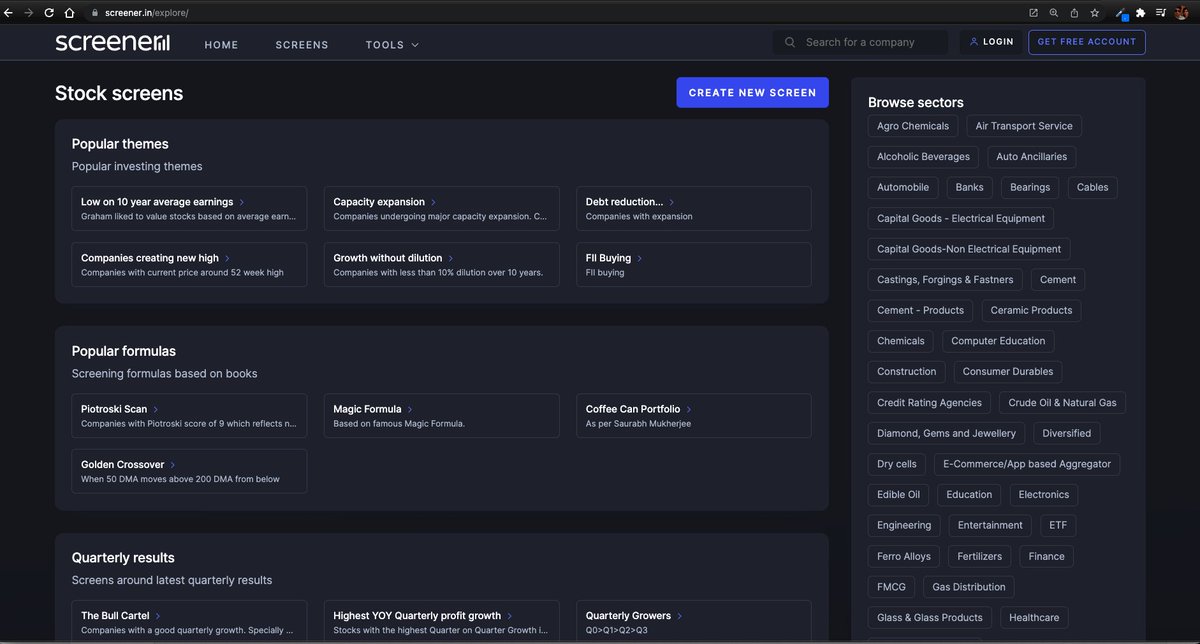

Go to "SCREENS" tab

Step3

Go to "CREATE NEW SCREEN"

At this point you need to register. No charges. I did that with my brother's email id. This is what you see after that.

Step-by-step: how to use (the free) @screener_in to generate investment ideas.

Do retweet if you find it useful to benefit max investors. 🙏🙏

Ready or not, 🧵🧵⤵️

I will use the free screener version so that everyone can follow along.

Outline

1. Stepwise Guide

2. Practical Example: CoffeeCan Companies

3. Practical Example: Smallcap Consistent compounders

4. Practical Example: Smallcap turnaround

5. Key Takeaway

1. Stepwise Guide

Step1

Go to https://t.co/jtOL2Bpoys

Step2

Go to "SCREENS" tab

Step3

Go to "CREATE NEW SCREEN"

At this point you need to register. No charges. I did that with my brother's email id. This is what you see after that.

More from Genericlearnings

You May Also Like

Joe Rogan's podcast is now is listened to 1.5+ billion times per year at around $50-100M/year revenue.

Independent and 100% owned by Joe, no networks, no middle men and a 100M+ people audience.

👏

https://t.co/RywAiBxA3s

Joe is the #1 / #2 podcast (depends per week) of all podcasts

120 million plays per month source https://t.co/k7L1LfDdcM

https://t.co/aGcYnVDpMu

Independent and 100% owned by Joe, no networks, no middle men and a 100M+ people audience.

👏

https://t.co/RywAiBxA3s

Joe is the #1 / #2 podcast (depends per week) of all podcasts

120 million plays per month source https://t.co/k7L1LfDdcM

https://t.co/aGcYnVDpMu

So it's now October 10, 2018 and....Rod Rosenstein is STILL not fired.

He's STILL in charge of the Mueller investigation.

He's STILL refusing to hand over the McCabe memos.

He's STILL holding up the declassification of the #SpyGate documents & their release to the public.

I love a good cover story.......

The guy had a face-to-face with El Grande Trumpo himself on Air Force One just 2 days ago. Inside just about the most secure SCIF in the world.

And Trump came out of AF1 and gave ol' Rod a big thumbs up!

And so we're right back to 'that dirty rat Rosenstein!' 2 days later.

At this point it's clear some members of Congress are either in on this and helping the cover story or they haven't got a clue and are out in the cold.

Note the conflicting stories about 'Rosenstein cancelled meeting with Congress on Oct 11!"

First, rumors surfaced of a scheduled meeting on Oct. 11 between Rosenstein & members of Congress, and Rosenstein just cancelled it.

He's STILL in charge of the Mueller investigation.

He's STILL refusing to hand over the McCabe memos.

He's STILL holding up the declassification of the #SpyGate documents & their release to the public.

I love a good cover story.......

The guy had a face-to-face with El Grande Trumpo himself on Air Force One just 2 days ago. Inside just about the most secure SCIF in the world.

And Trump came out of AF1 and gave ol' Rod a big thumbs up!

And so we're right back to 'that dirty rat Rosenstein!' 2 days later.

At this point it's clear some members of Congress are either in on this and helping the cover story or they haven't got a clue and are out in the cold.

Note the conflicting stories about 'Rosenstein cancelled meeting with Congress on Oct 11!"

First, rumors surfaced of a scheduled meeting on Oct. 11 between Rosenstein & members of Congress, and Rosenstein just cancelled it.

Rep. Andy Biggs and Rep. Matt Gaetz say DAG Rod Rosenstein cancelled an Oct. 11 appearance before the judiciary and oversight committees. They are now calling for a subpoena. pic.twitter.com/TknVHKjXtd

— Ivan Pentchoukov \U0001f1fa\U0001f1f8 (@IvanPentchoukov) October 10, 2018

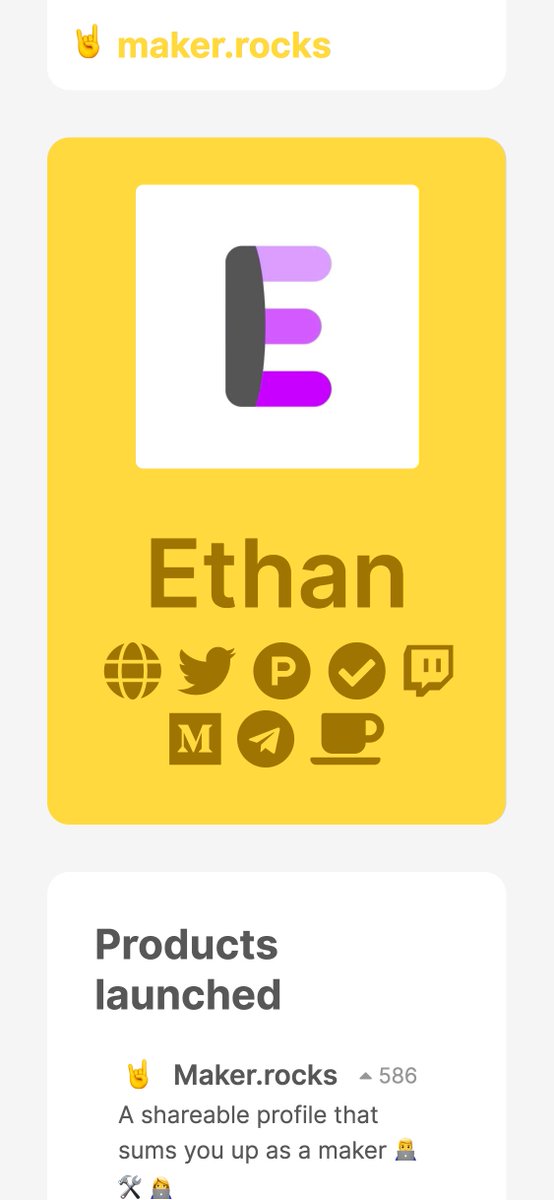

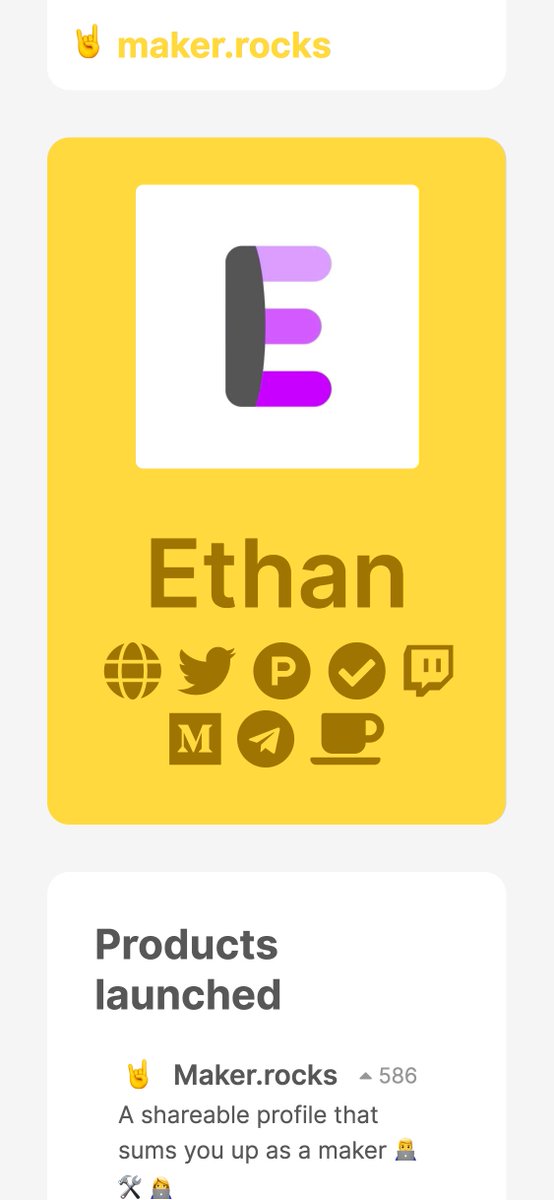

First update to https://t.co/lDdqjtKTZL since the challenge ended – Medium links!! Go add your Medium profile now 👀📝 (thanks @diannamallen for the suggestion 😁)

Just added Telegram links to https://t.co/lDdqjtKTZL too! Now you can provide a nice easy way for people to message you :)

Less than 1 hour since I started adding stuff to https://t.co/lDdqjtKTZL again, and profile pages are now responsive!!! 🥳 Check it out -> https://t.co/fVkEL4fu0L

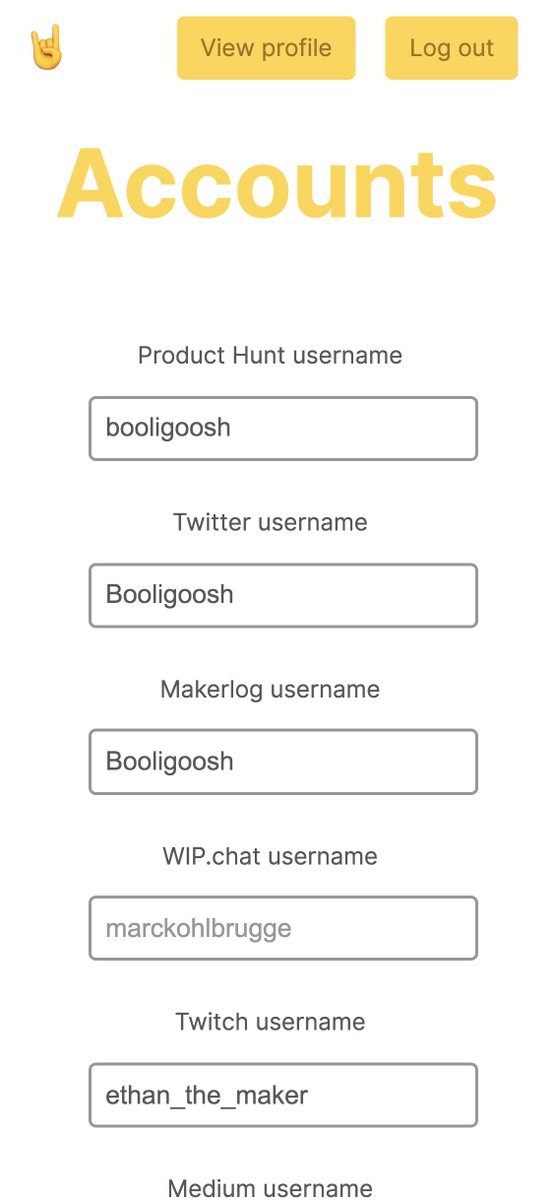

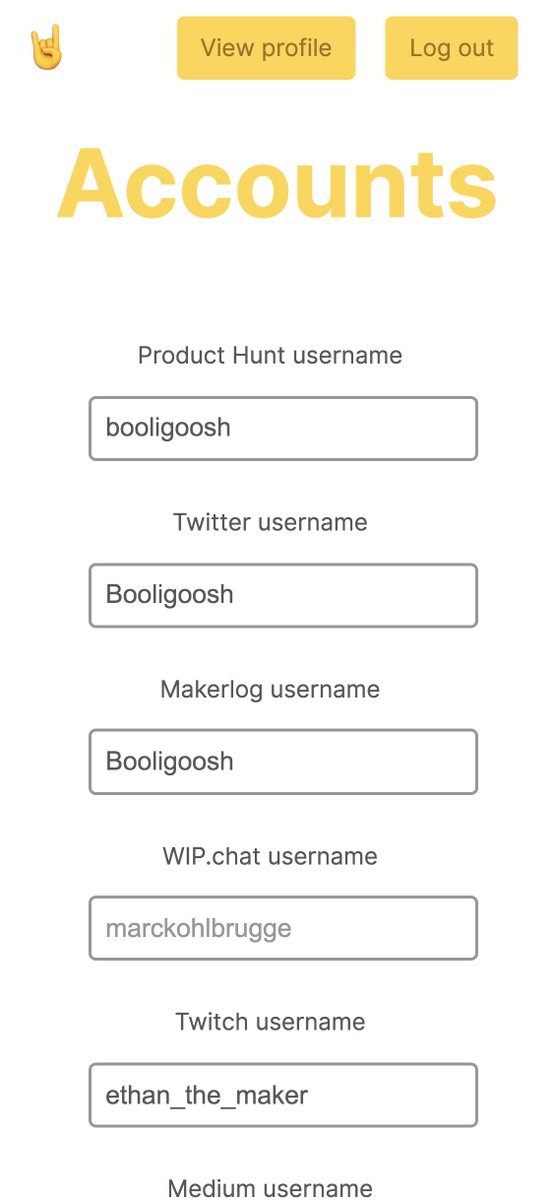

Accounts page is now also responsive!! 📱✨

💪 I managed to make the whole site responsive in about an hour. On my roadmap I had it down as 4-5 hours!!! 🤘🤠🤘

Just added Telegram links to https://t.co/lDdqjtKTZL too! Now you can provide a nice easy way for people to message you :)

Less than 1 hour since I started adding stuff to https://t.co/lDdqjtKTZL again, and profile pages are now responsive!!! 🥳 Check it out -> https://t.co/fVkEL4fu0L

Accounts page is now also responsive!! 📱✨

💪 I managed to make the whole site responsive in about an hour. On my roadmap I had it down as 4-5 hours!!! 🤘🤠🤘