From @jack:

Starting now, we are changing the way we do things at Twitter. Even though we have been following policies we created, that we thought were good and necessary, it just hasn’t had the desired effect of promoting good discourse. Effective immediately, we are changing.

— @jack

More from For later read

A name has caught the radar of agencies investigating #GretaToolkit- FRIEDRICH PIETER. Delhi Police expressed shock over the appearance of Pieter's name as "Who to Follow" in g-doc as he is under cops' scanner since 2006 for his Anti-India activities. Some shocking details..

1/9

Pieter is close associate (read hired by) Bhajan Singh Bhindar, founder of OFMI (Org for Minorities of India) that considers itself an anti-Gandhi 'crusader' & is Pro-Khalistan. They also campaigned to free Bhullar (convicted Khalistani terr0r!st) & lobby against Modi in US.

2/9

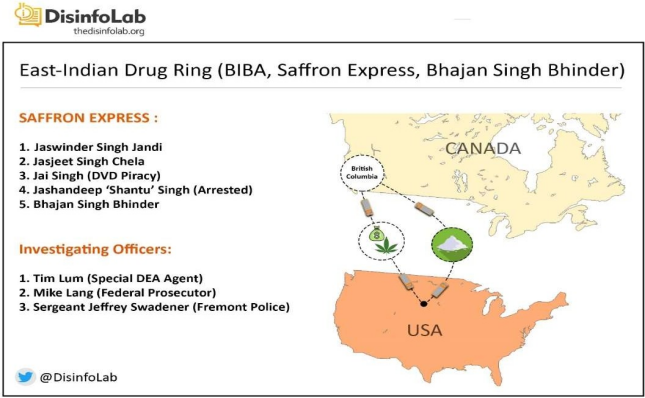

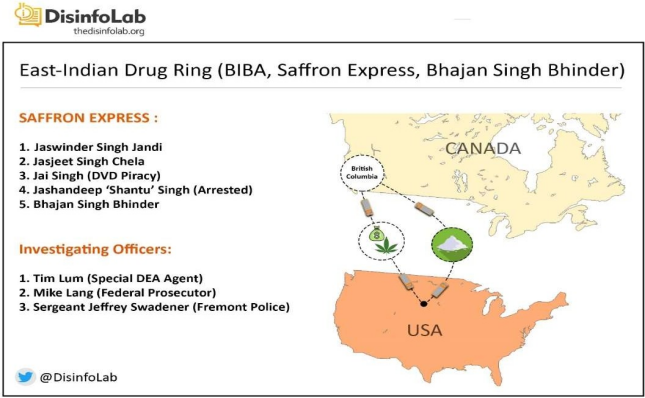

Bhinder has alleged connection with ISI & had records of owning inter-state drugs cartel & DVD piracy for terr0r funding. They also took control Fremont Gurudwara, US back in 2003 for millions of donation. Details of this 'Info-War' by @DisinfoLab

https://t.co/oIDFSoaDX2

3/9

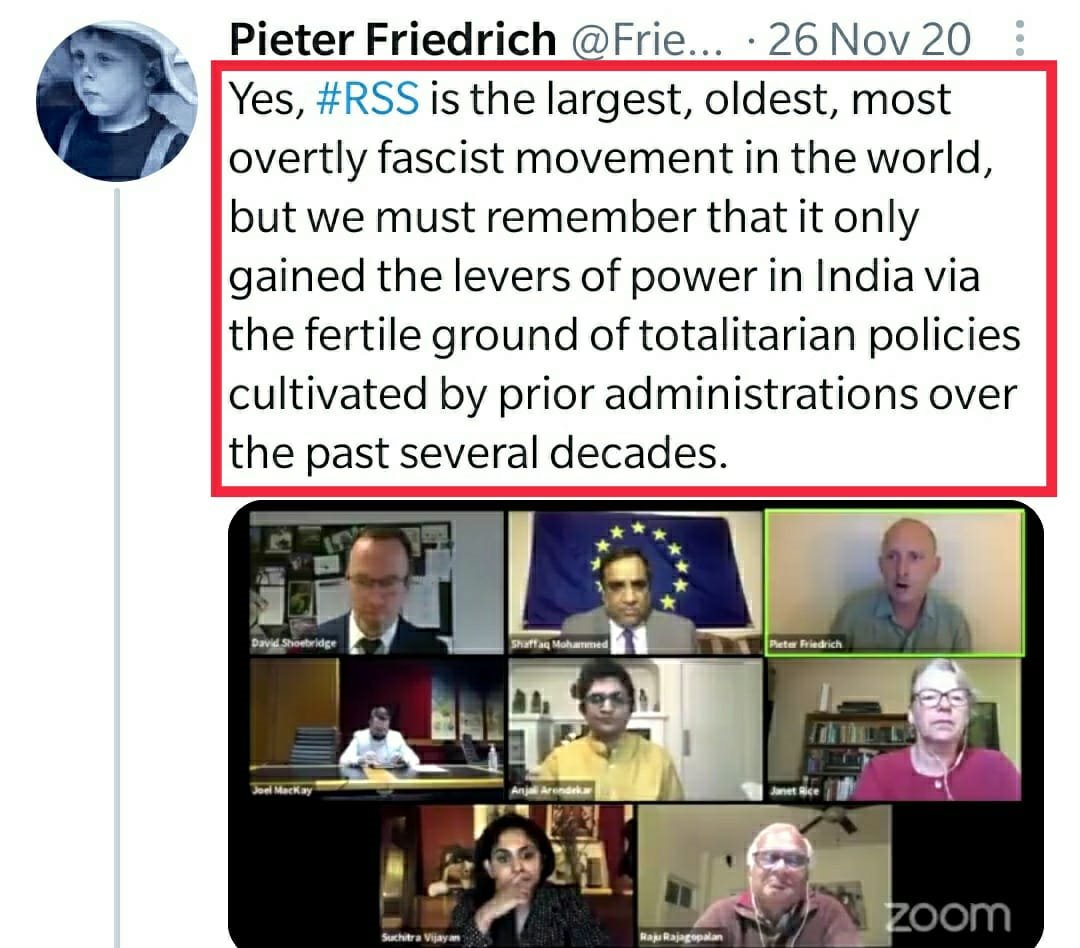

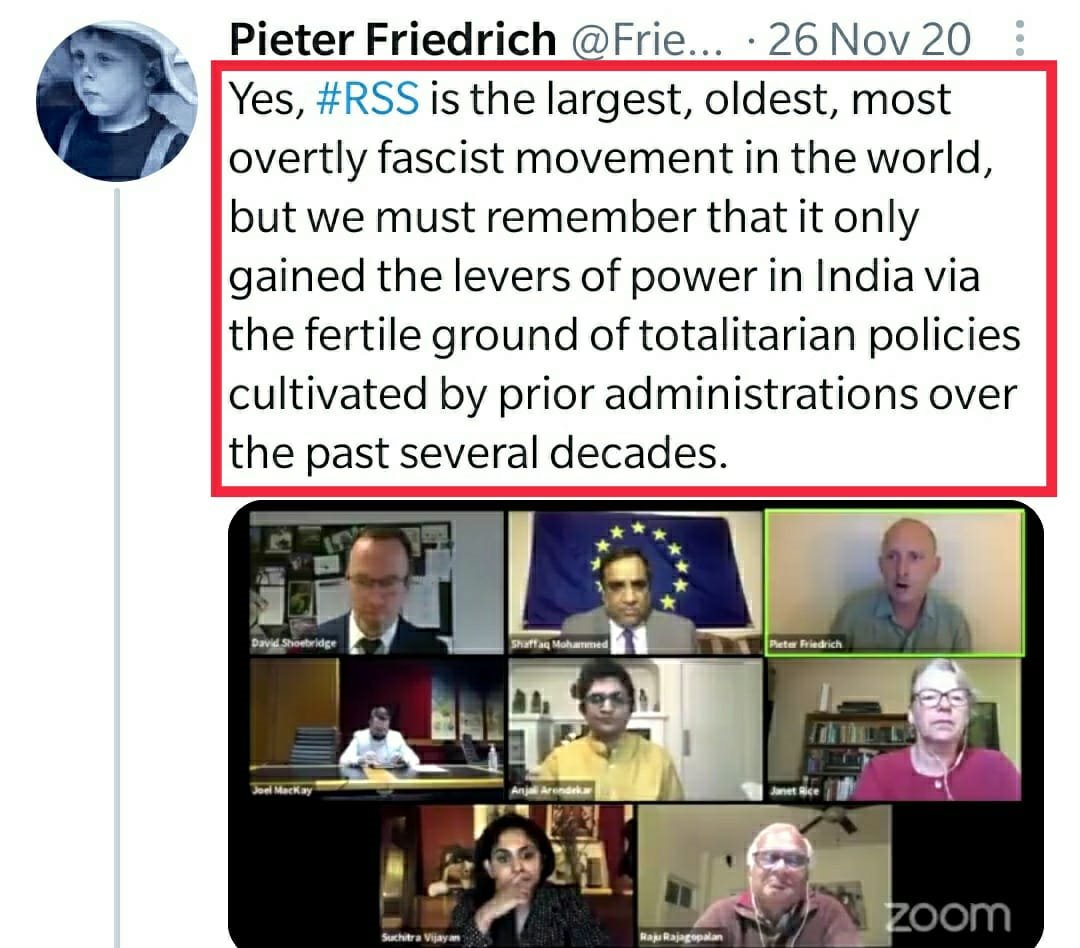

Back to Pieter. Take a glance at his work. His TL is filled with anti-BJP/RSS/Modi propaganda. From his speeches to articles, everything have few keywords in common- RSS/Fascism/gen0c!de/k!ll!ing/Kashmir/Hindutva, as if running a non-stop unrest in India is his bread & butter

4/9

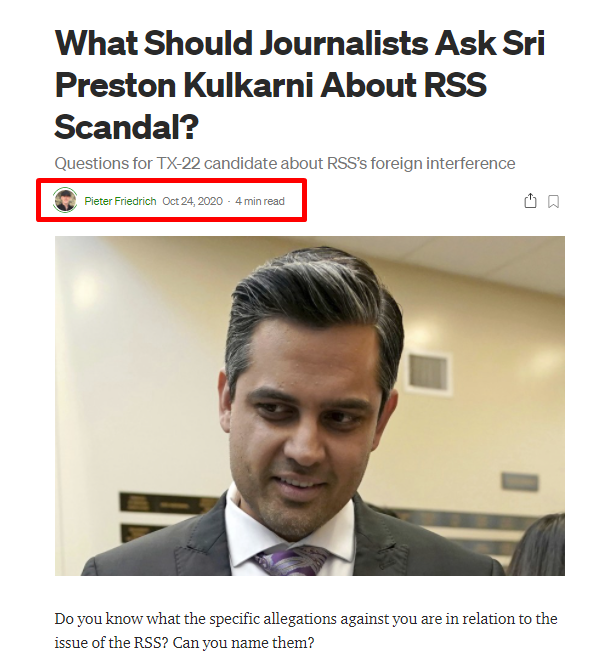

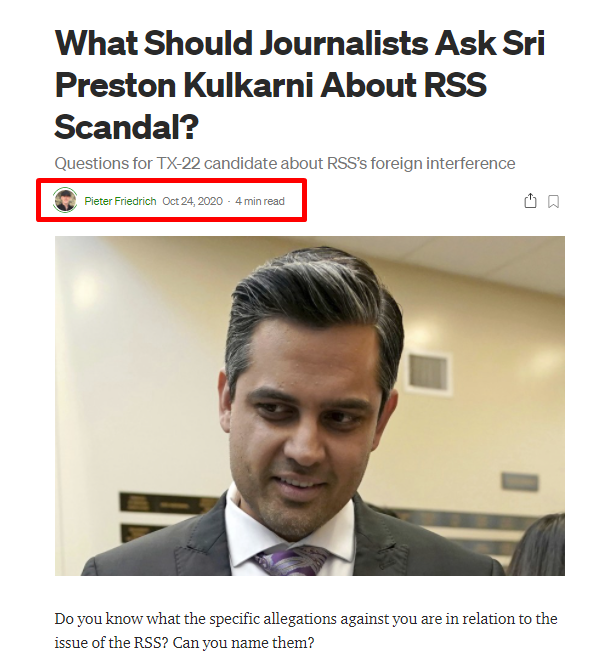

He picks every topic with an extreme narrative that potentially hurts integrity of nation, be it Kashmir,CAA,1984 & with his recent projects- Farmer protest & campaigning heavily against Sri Kulkarni. Despite all, Kulkarni appointed as Chief of Ext Affairs at Biden admin.

5/9

1/9

Pieter is close associate (read hired by) Bhajan Singh Bhindar, founder of OFMI (Org for Minorities of India) that considers itself an anti-Gandhi 'crusader' & is Pro-Khalistan. They also campaigned to free Bhullar (convicted Khalistani terr0r!st) & lobby against Modi in US.

2/9

Bhinder has alleged connection with ISI & had records of owning inter-state drugs cartel & DVD piracy for terr0r funding. They also took control Fremont Gurudwara, US back in 2003 for millions of donation. Details of this 'Info-War' by @DisinfoLab

https://t.co/oIDFSoaDX2

3/9

Back to Pieter. Take a glance at his work. His TL is filled with anti-BJP/RSS/Modi propaganda. From his speeches to articles, everything have few keywords in common- RSS/Fascism/gen0c!de/k!ll!ing/Kashmir/Hindutva, as if running a non-stop unrest in India is his bread & butter

4/9

He picks every topic with an extreme narrative that potentially hurts integrity of nation, be it Kashmir,CAA,1984 & with his recent projects- Farmer protest & campaigning heavily against Sri Kulkarni. Despite all, Kulkarni appointed as Chief of Ext Affairs at Biden admin.

5/9

Part of what is going on here is that large sectors of evangelicalism are poorly equipped to help people deal with basic struggles, let alone the ubiquitous pornography addictions that most of their men have been enslaved to for years.

On the one hand, there's a high standard of holiness. On the other hand, there's a model of growth that is basically "Try Harder to Mean it More." Identify the relevant scriptural truth & believe it with all of your sincerity so that you may access the Holy Spirit's help to obey.

Helping sincere believers believe and obey the Bible facts is pretty much all the Holy Spirit does these days, other than convict us of our sins in light of the Bible facts.

If you know you are sincere and hate your sin and believe the right Bible facts as hard as you can but continue to be enslaved to your pornography addiction, what else left for you to do? Just Really, Just Really, Just Really Trust God and Give it to Him?

To suggest that there are other strategies available sounds to those formed in this model of growth like one is also suggesting that the Bible is insufficient, but it also suggests something just as threatening- that there are aspects of reality that are not immediately apparent.

There\u2019s this crazy horseshoe where where having a strong emphasis on human sinfulness just turns into a power washer that blasts all harm and wrong-doing down to the same level of things people (re: men) inevitably do given half a chance. https://t.co/BLOWzpf1RA

— Laura Robinson (@LauraRbnsn) February 13, 2021

On the one hand, there's a high standard of holiness. On the other hand, there's a model of growth that is basically "Try Harder to Mean it More." Identify the relevant scriptural truth & believe it with all of your sincerity so that you may access the Holy Spirit's help to obey.

Helping sincere believers believe and obey the Bible facts is pretty much all the Holy Spirit does these days, other than convict us of our sins in light of the Bible facts.

If you know you are sincere and hate your sin and believe the right Bible facts as hard as you can but continue to be enslaved to your pornography addiction, what else left for you to do? Just Really, Just Really, Just Really Trust God and Give it to Him?

To suggest that there are other strategies available sounds to those formed in this model of growth like one is also suggesting that the Bible is insufficient, but it also suggests something just as threatening- that there are aspects of reality that are not immediately apparent.