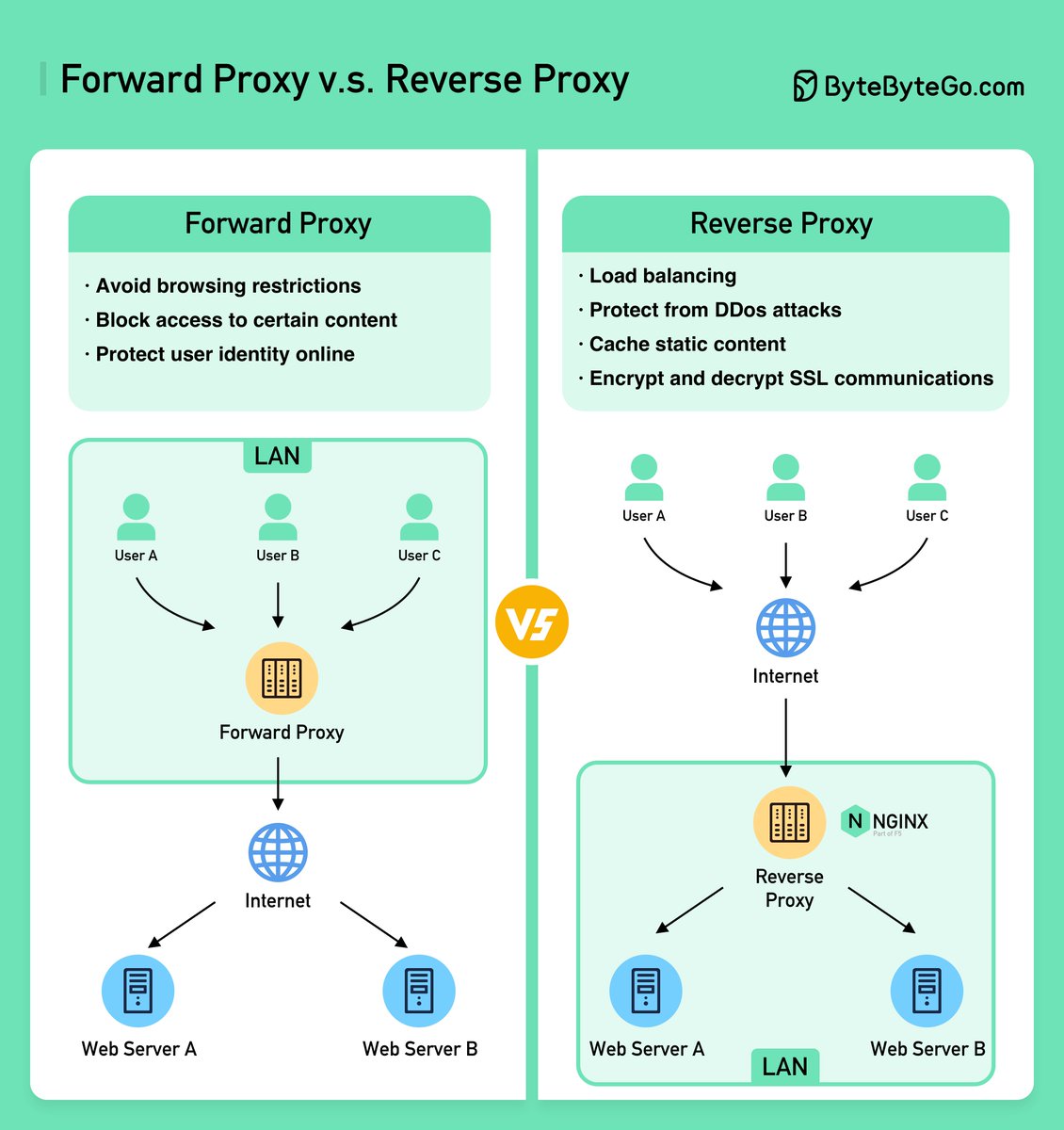

A forward proxy is good for:

1️⃣ Protect clients

2️⃣ Avoid browsing restrictions

3️⃣ Block access to certain content

/1 Why is Nginx called a \u201c\U0001d42b\U0001d41e\U0001d42f\U0001d41e\U0001d42b\U0001d42c\U0001d41e\u201d proxy?

— Alex Xu (@alexxubyte) September 29, 2022

The diagram below shows the differences between a \U0001d41f\U0001d428\U0001d42b\U0001d430\U0001d41a\U0001d42b\U0001d41d \U0001d429\U0001d42b\U0001d428\U0001d431\U0001d432 and a \U0001d42b\U0001d41e\U0001d42f\U0001d41e\U0001d42b\U0001d42c\U0001d41e \U0001d429\U0001d42b\U0001d428\U0001d431\U0001d432. pic.twitter.com/k8xQwBVgW2

Meet Yang Ruifu, CCP's biological weapons expert https://t.co/JjB9TLEO95 via @Gnews202064

— Billy Bostickson \U0001f3f4\U0001f441&\U0001f441 \U0001f193 (@BillyBostickson) October 11, 2020

Interesting expose of China's top bioweapons expert who oversaw fake pangolin research

Paper 1: https://t.co/TrXESKLYmJ

Paper 2:https://t.co/9LSJTNCn3l

Pangolinhttps://t.co/2FUAzWyOcv pic.twitter.com/I2QMXgnkBJ