Why: Better negotiation is the key to getting what you want.

https://t.co/fQf9wrFmdF

7 no BS tactics to become a world-class negotiator: \U0001f9f5

— Barrett O'Neill (@barrettjoneill) April 6, 2022

7 no BS tactics to become a world-class negotiator: \U0001f9f5

— Barrett O'Neill (@barrettjoneill) April 6, 2022

Authentic leaders create an organizational climate of:

— Coach Clint (@IAmCoachClint) April 5, 2022

\u2022 Productivity

\u2022 Commitment

\u2022 Job satisfaction

\u2022 Knowledge-sharing

\u2022 Higher performance

\u2022 Greater work engagement

Here are 9 ways for you to be an Authentic Leader:

There are endless ways to increase cash flow in your business, and

— Kurtis Hanni (@KurtisHanni) April 5, 2022

as a Chief Financial Officer, here are my 9 favorites:

15 Marketing Tactics Every Startup Should Know (and what you need to know about them):

— Brian Bourque \U0001f680 (@bbourque) April 3, 2022

Startups are losing top talent everyday to the Great Resignation.

— Greg Moran \U0001f30e Evergreen Mountain Equity Partners (@EvergreenMEP) April 8, 2022

Don't be a victim.

Stay Interviews can help you retain your talent. Here are the questions to ask:

Startups are all about learning fast. Founders are busy.

— Greg Moran \U0001f30e Evergreen Mountain Equity Partners (@EvergreenMEP) April 9, 2022

My weekly roundup of the week's top 5 impactful threads to help accelerate personal and business growth ...

Here we go ...

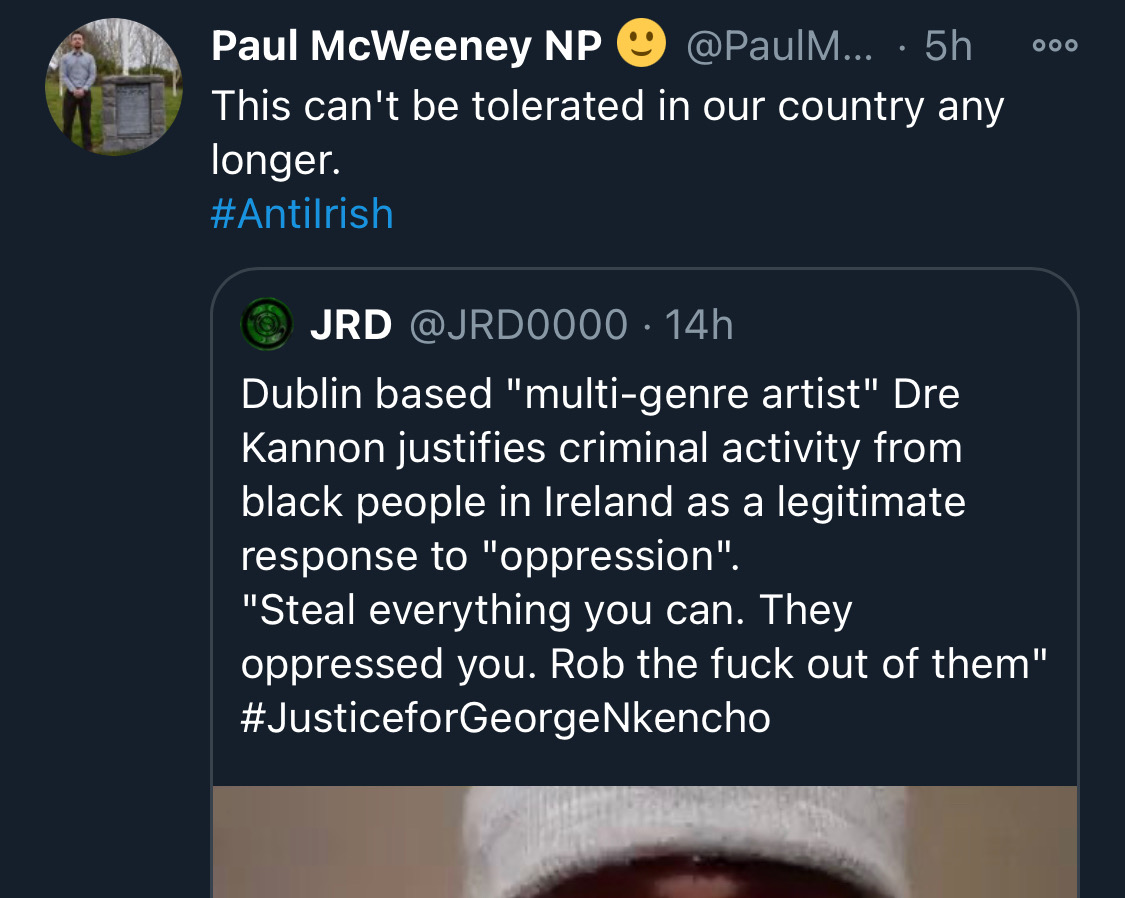

Be aware, the images the #farright are sharing in the hopes of starting a race war, are not of the SPAR employee that was punched. They\u2019re older photos of a Everton fan. Be aware of the information you\u2019re sharing and that it may be false. Always #factcheck #GeorgeNkencho pic.twitter.com/4c9w4CMk5h

— antifa.drone (@antifa_drone) December 31, 2020

There is a concerted effort in far-right Telegram groups to try and incite violence on street by targetting people for racist online abuse following the killing of George Nkencho

— Mark Malone (@soundmigration) January 1, 2021

This follows on and is part of a misinformation campaign to polarise communities at this time.

The stock exploded & went up as much as 63% from my price.

— Manas Arora (@iManasArora) June 22, 2020

Closed my position entirely today!#BroTip pic.twitter.com/CRbQh3kvMM

What an extended (away from averages) move looks like!!

— Manas Arora (@iManasArora) June 24, 2020

If you don't learn to sell into strength, be ready to give away the majority of your gains.#GLENMARK pic.twitter.com/5DsRTUaGO2

#HIKAL

— Manas Arora (@iManasArora) July 2, 2021

Closed remaining at 560

Reason: It is 40+% from 10wma. Super extended

Total revenue: 11R * 0.25 (size) = 2.75% on portfolio

Trade closed pic.twitter.com/YDDvhz8swT

When you see 15 green weeks in a row, that's the end of the move. *Extended*

— Manas Arora (@iManasArora) August 26, 2019

Simple price action analysis.#Seamecltd https://t.co/gR9xzgeb9K