https://t.co/k3sGW1m1d6

A list of cool websites you might now know about

A thread 🧵

https://t.co/k3sGW1m1d6

This website is made by me 🙈

https://t.co/inO0Uig6NQ

https://t.co/Bf1Ph0xxYv

Made by Me and @PinglrHQ

https://t.co/kEP8EnvKqO

Made by @Prajwxl and @krishnalohiaaa

Timeless articles from the belly of the internet. Served 5 at a time

Creator: @louispereira

one of my fav website

https://t.co/5ZR0tvr26b

Thanks @RK_382922 for suggesting!

https://t.co/Pi9Dpjo8A9

one of my fav website

https://t.co/gM20ZVT4lS

A list of cool websites you might now know about

— Sahil (@sahilypatel) August 14, 2021

A thread \U0001f9f5

This is cool let me add some more

— Aditya Bansal (@itsadityabansal) August 14, 2021

A continuation thread \U0001f9f5... https://t.co/vwmNZmslaY

More from All

You May Also Like

1/x Fort Detrick History

Mr. Patrick, one of the chief scientists at the Army Biological Warfare Laboratories at Fort Detrick in Frederick, Md., held five classified US patents for the process of weaponizing anthrax.

2/x

Under Mr. Patrick’s direction, scientists at Fort Detrick developed a tularemia agent that, if disseminated by airplane, could cause casualties & sickness over 1000s mi². In a 10,000 mi² range, it had 90% casualty rate & 50% fatality rate

3/x His team explored Q fever, plague, & Venezuelan equine encephalitis, testing more than 20 anthrax strains to discern most lethal variety. Fort Detrick scientists used aerosol spray systems inside fountain pens, walking sticks, light bulbs, & even in 1953 Mercury exhaust pipes

4/x After retiring in 1986, Mr. Patrick remained one of the world’s foremost specialists on biological warfare & was a consultant to the CIA, FBI, & US military. He debriefed Soviet defector Ken Alibek, the deputy chief of the Soviet biowarfare program

https://t.co/sHqSaTSqtB

5/x Back in Time

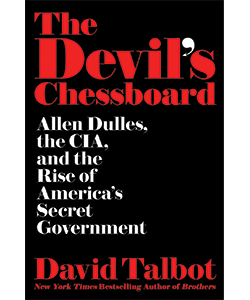

In 1949 the Army created a small team of chemists at "Camp Detrick" called Special Operations Division. Its assignment was to find military uses for toxic bacteria. The coercive use of toxins was a new field, which fascinated Allen Dulles, later head of the CIA

Mr. Patrick, one of the chief scientists at the Army Biological Warfare Laboratories at Fort Detrick in Frederick, Md., held five classified US patents for the process of weaponizing anthrax.

2/x

Under Mr. Patrick’s direction, scientists at Fort Detrick developed a tularemia agent that, if disseminated by airplane, could cause casualties & sickness over 1000s mi². In a 10,000 mi² range, it had 90% casualty rate & 50% fatality rate

3/x His team explored Q fever, plague, & Venezuelan equine encephalitis, testing more than 20 anthrax strains to discern most lethal variety. Fort Detrick scientists used aerosol spray systems inside fountain pens, walking sticks, light bulbs, & even in 1953 Mercury exhaust pipes

4/x After retiring in 1986, Mr. Patrick remained one of the world’s foremost specialists on biological warfare & was a consultant to the CIA, FBI, & US military. He debriefed Soviet defector Ken Alibek, the deputy chief of the Soviet biowarfare program

https://t.co/sHqSaTSqtB

5/x Back in Time

In 1949 the Army created a small team of chemists at "Camp Detrick" called Special Operations Division. Its assignment was to find military uses for toxic bacteria. The coercive use of toxins was a new field, which fascinated Allen Dulles, later head of the CIA