I could create an entire twitter feed of things Facebook has tried to cover up since 2015. Where do you want to start, Mark and Sheryl? https://t.co/1trgupQEH9

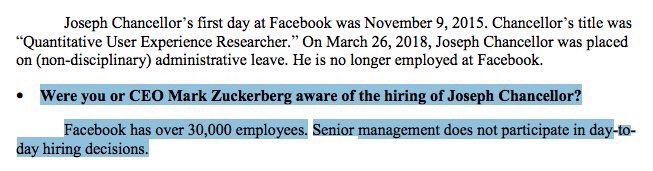

Answer "Facebook has over 30,000 employees. Senior management does not participate in day-today hiring decisions."

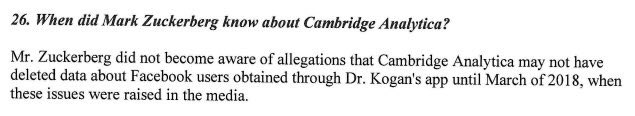

Answer: "He did not become aware of allegations CA may not have deleted data about FB users obtained through Dr. Kogan's app until March of 2018, when

these issues were raised in the media."

A company as powerful as @facebook should be subject to proper scrutiny. Mike Schroepfer, its CTO, told us that the buck stops with Mark Zuckerberg on the Cambridge Analytica scandal, which is why he should come and answer our questions @DamianCollins @IanCLucas pic.twitter.com/0H4VMhtIFu

— Digital, Culture, Media and Sport Committee (@CommonsCMS) May 23, 2018

More from Tech

It's all in French, but if you're up for it you can read:

• Their blog post (lacks the most interesting details): https://t.co/PHkDcOT1hy

• Their high-level legal decision: https://t.co/hwpiEvjodt

• The full notification: https://t.co/QQB7rfynha

I've read it so you needn't!

Vectaury was collecting geolocation data in order to create profiles (eg. people who often go to this or that type of shop) so as to power ad targeting. They operate through embedded SDKs and ad bidding, making them invisible to users.

The @CNIL notes that profiling based off of geolocation presents particular risks since it reveals people's movements and habits. As risky, the processing requires consent — this will be the heart of their assessment.

Interesting point: they justify the decision in part because of how many people COULD be targeted in this way (rather than how many have — though they note that too). Because it's on a phone, and many have phones, it is considered large-scale processing no matter what.

I put it together a long time ago, and it was very helpful! I sliced it apart a thousand times until things started to make sense.

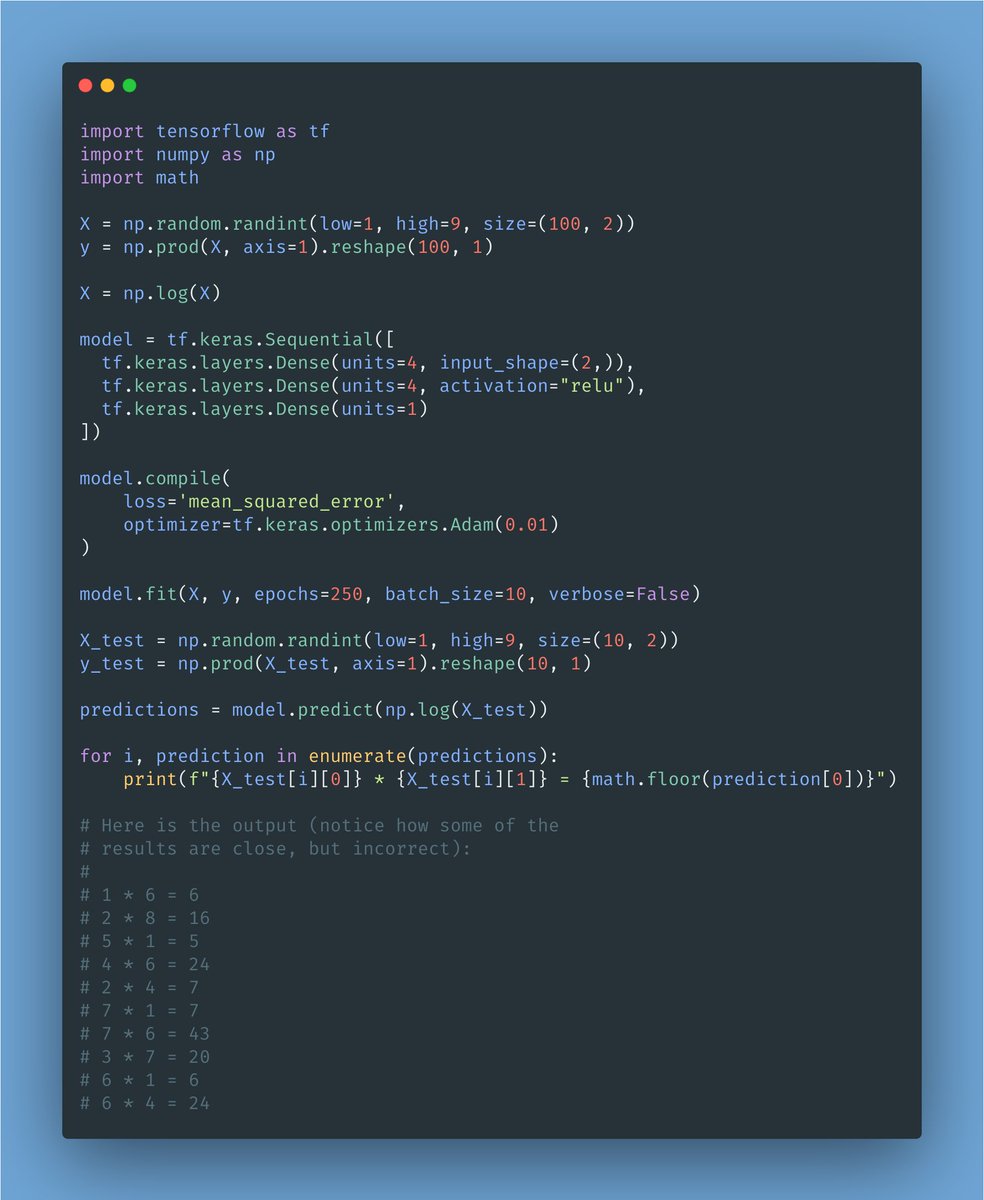

It's TensorFlow and Keras.

If you are starting out, this may be a good puzzle to solve.

The goal of this model is to learn to multiply one-digit

It is a good example of coding, what is the model?

— Freddy Rojas Cama (@freddyrojascama) February 1, 2021

You May Also Like

Covering one of the most unique set ups: Extended moves & Reversal plays

Time for a 🧵 to learn the above from @iManasArora

What qualifies for an extended move?

30-40% move in just 5-6 days is one example of extended move

How Manas used this info to book

The stock exploded & went up as much as 63% from my price.

— Manas Arora (@iManasArora) June 22, 2020

Closed my position entirely today!#BroTip pic.twitter.com/CRbQh3kvMM

Post that the plight of the

What an extended (away from averages) move looks like!!

— Manas Arora (@iManasArora) June 24, 2020

If you don't learn to sell into strength, be ready to give away the majority of your gains.#GLENMARK pic.twitter.com/5DsRTUaGO2

Example 2: Booking profits when the stock is extended from 10WMA

10WMA =

#HIKAL

— Manas Arora (@iManasArora) July 2, 2021

Closed remaining at 560

Reason: It is 40+% from 10wma. Super extended

Total revenue: 11R * 0.25 (size) = 2.75% on portfolio

Trade closed pic.twitter.com/YDDvhz8swT

Another hack to identify extended move in a stock:

Too many green days!

Read

When you see 15 green weeks in a row, that's the end of the move. *Extended*

— Manas Arora (@iManasArora) August 26, 2019

Simple price action analysis.#Seamecltd https://t.co/gR9xzgeb9K