Okay, here we go.

Neural Volume Rendering for Dynamic Scenes

NeRF has shown incredible view synthesis results, but it requires multi-view captures for STATIC scenes.

How can we achieve view synthesis for DYNAMIC scenes from a single video? Here is what I learned from several recent efforts.

Okay, here we go.

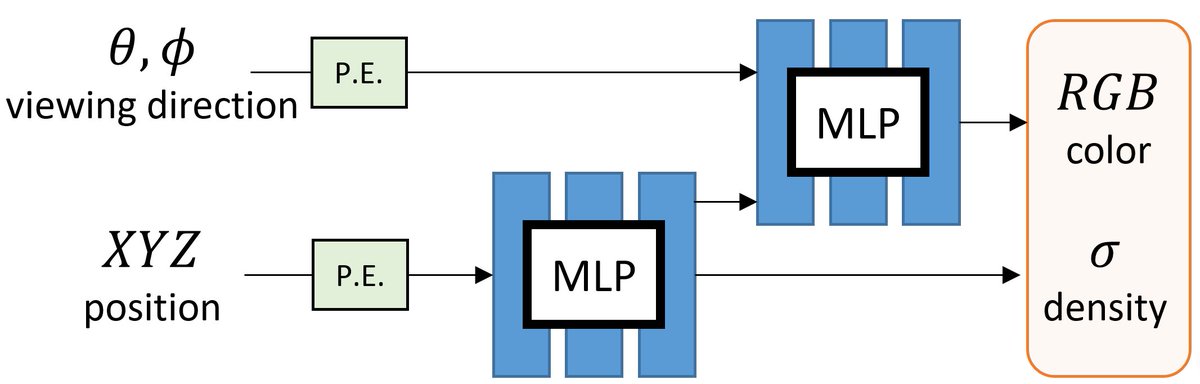

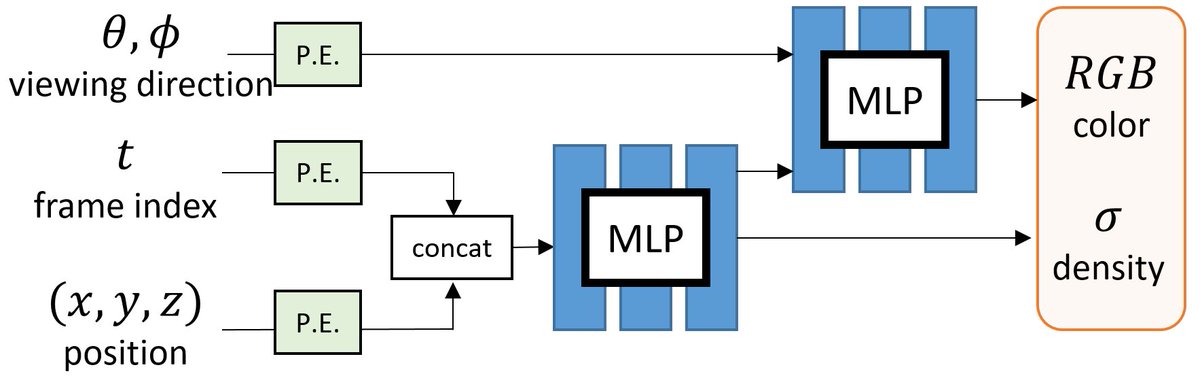

NeRF represents the scene as a 5D continuous volumetric scene function that maps the spatial position and viewing direction to color and density. It then projects the colors/densities to form an image with volume rendering.

Volumetric + Implicit -> Awesome!

Building on NeRF, one can extend it for handling dynamic scenes with two types of approaches.

A) 4D (or 6D with views) function.

One direct approach is to include TIME as an additional input to learn a DYNAMIC radiance field.

e.g., Video-NeRF, NSFF, NeRFlow

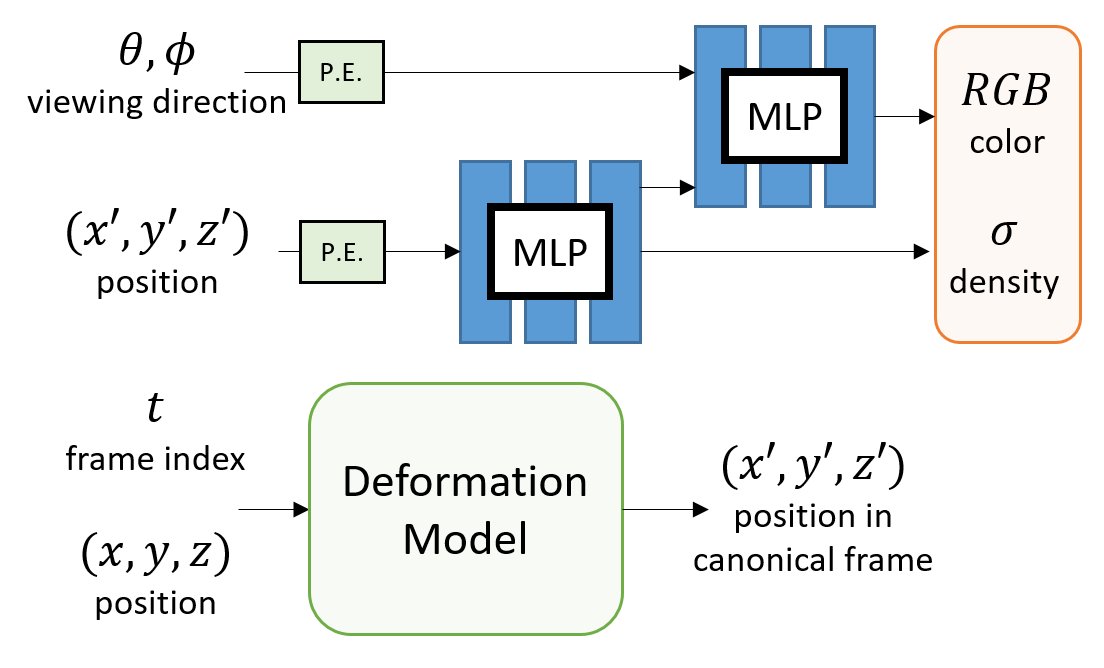

Inspired by non-rigid reconstruction methods, this type of approach learns a radiance field in a canonical frame (template) and predicts deformation for each frame to account for dynamics over time.

e.g., Nerfie, NR-NeRF, D-NeRF

All the methods use an MLP to encode the deformation field. But, how do they differ?

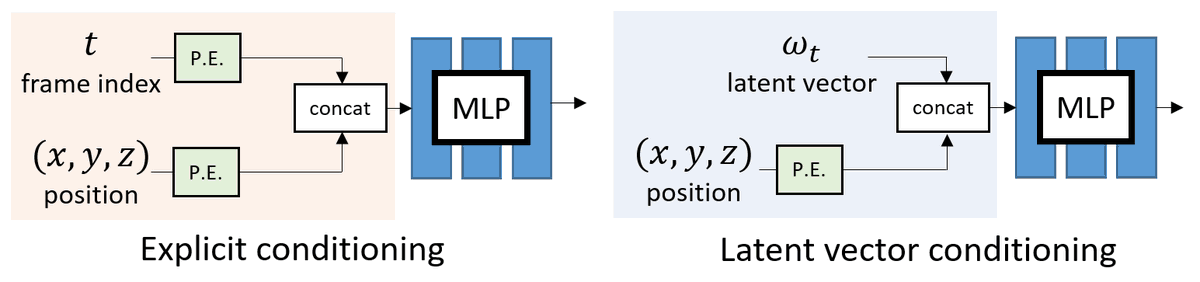

A) INPUT: How to encode the additional time dimension as input?

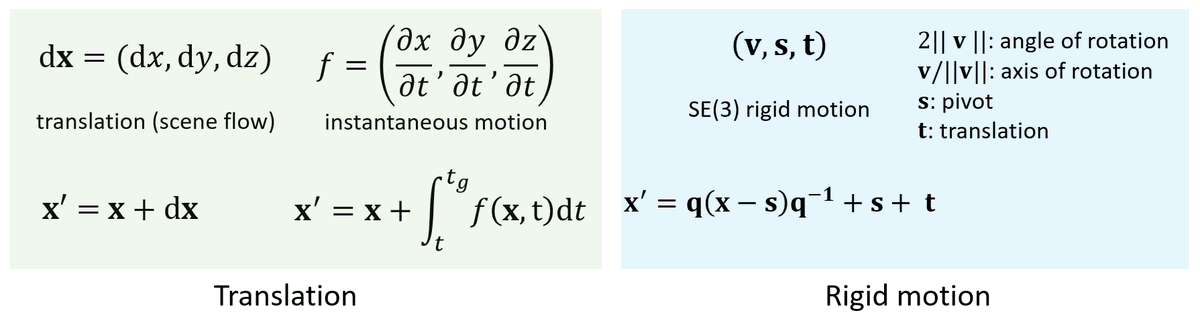

B) OUTPUT: How to parametrize the deformation field?

One can choose to use EXPLICIT conditioning by treating the frame index t as input.

Alternatively, one can use a learnable LATENT vector for each frame.

We can either use the MLP to predict

- dense 3D translation vectors (aka scene flow) or

- dense rigid motion field

More from Tech

Machine translation can be a wonderful translation tool, but its uses are widely misunderstood.

Let's talk about Google Translate, its current state in the professional translation industry, and why robots are terrible at interpreting culture and context.

Straight to the point: machine translation (MT) is an incredibly helpful tool for translation! But just like any tool, there are specific times and places for it.

You wouldn't use a jackhammer to nail a painting to the wall.

Two factors are at play when determining how useful MT is: language pair and context.

Certain language pairs are better suited for MT. Typically, the more similar the grammar structure, the better the MT will be. Think Spanish <> Portuguese vs. Spanish <> Japanese.

No two MT engines are the same, though! Check out how human professionals ranked their choice of MT engine in a Phrase survey:

https://t.co/yiVPmHnjKv

When it comes to context, the first thing to look at is the type of text you want to translate. Typically, the more technical and straightforward the text, the better a machine will be at working on it.

Let's talk about Google Translate, its current state in the professional translation industry, and why robots are terrible at interpreting culture and context.

Straight to the point: machine translation (MT) is an incredibly helpful tool for translation! But just like any tool, there are specific times and places for it.

You wouldn't use a jackhammer to nail a painting to the wall.

Two factors are at play when determining how useful MT is: language pair and context.

Certain language pairs are better suited for MT. Typically, the more similar the grammar structure, the better the MT will be. Think Spanish <> Portuguese vs. Spanish <> Japanese.

No two MT engines are the same, though! Check out how human professionals ranked their choice of MT engine in a Phrase survey:

https://t.co/yiVPmHnjKv

When it comes to context, the first thing to look at is the type of text you want to translate. Typically, the more technical and straightforward the text, the better a machine will be at working on it.