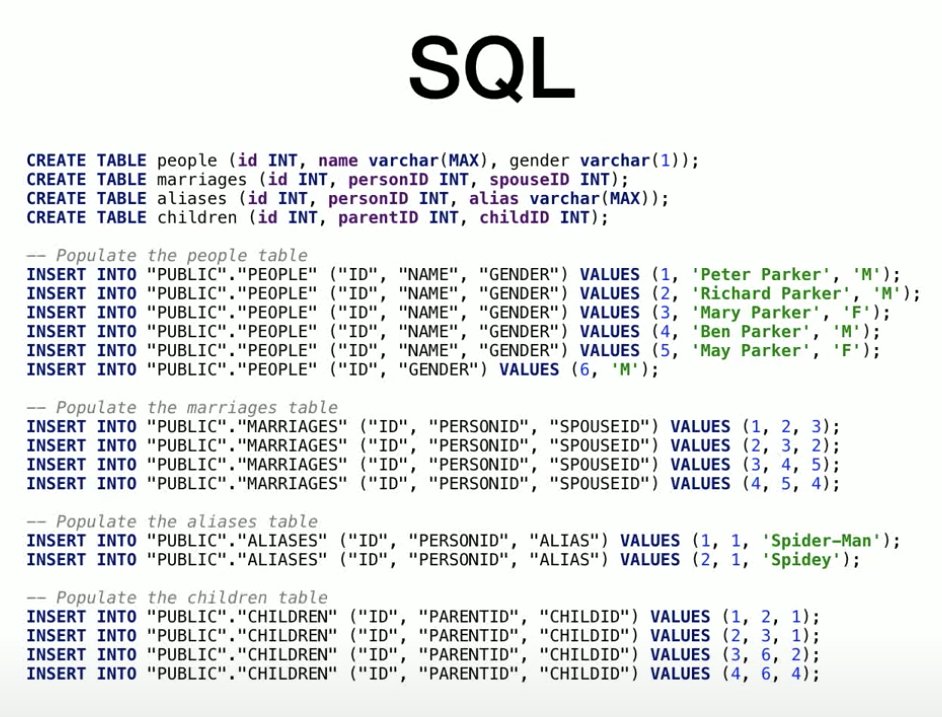

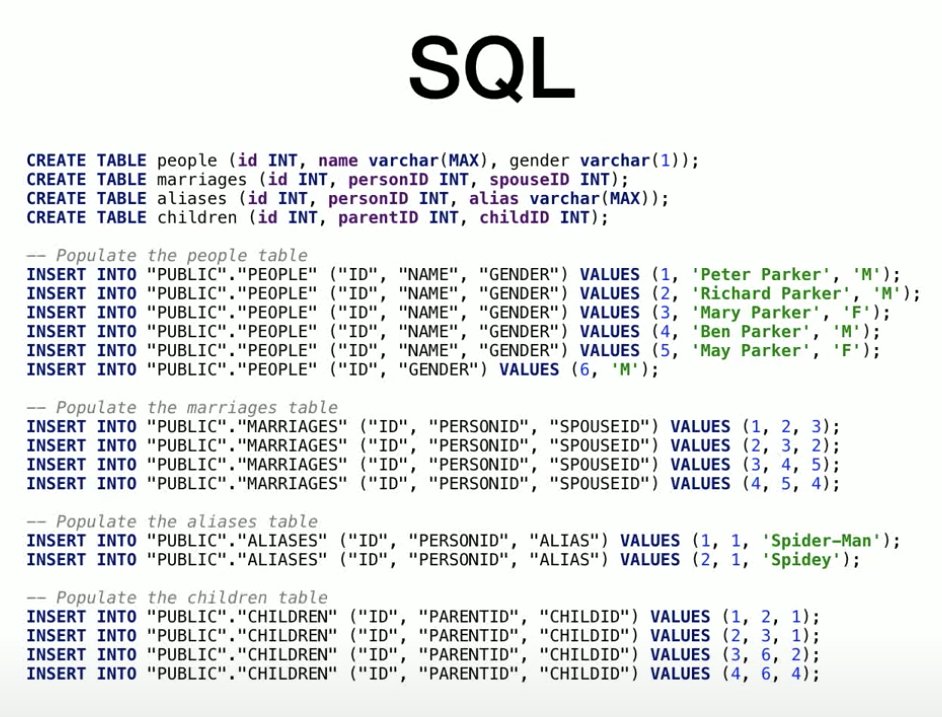

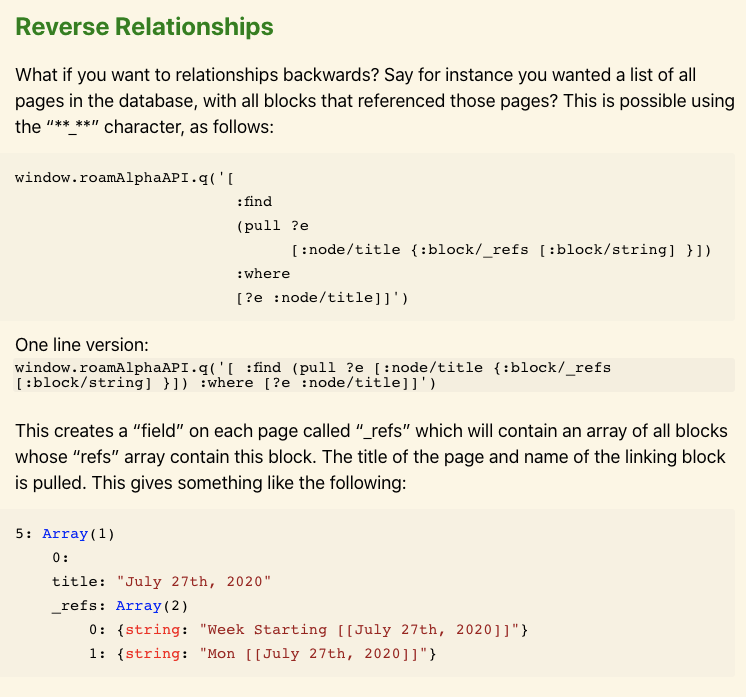

For people curious about the Roam API and confused by the syntax, or interested in why Conor went with Datomic/Datascript and not a traditional database, this older talk by Roam developer @mark_bastian is a great overview.

You should be able to model the entire Spiderman story in Roam.

Page title: Peter Parker

Child of:: [[Richard Parker]] [[Mary Parker]]

Aliases:: [[Spidey]]

etc, and do these kind of queries.

"Show me companies in Boise, Idaho, founded by women, whose evaluation is lower than 10X ARR"

"Show me a graph of my sleep quality versus days in which I ate foods that had gluten in them or not" (where [[bread]] has a page with ingredients::).

More from Tech

The entire discussion around Facebook’s disclosures of what happened in 2016 is very frustrating. No exec stopped any investigations, but there were a lot of heated discussions about what to publish and when.

In the spring and summer of 2016, as reported by the Times, activity we traced to GRU was reported to the FBI. This was the standard model of interaction companies used for nation-state attacks against likely US targeted.

In the Spring of 2017, after a deep dive into the Fake News phenomena, the security team wanted to publish an update that covered what we had learned. At this point, we didn’t have any advertising content or the big IRA cluster, but we did know about the GRU model.

This report when through dozens of edits as different equities were represented. I did not have any meetings with Sheryl on the paper, but I can’t speak to whether she was in the loop with my higher-ups.

In the end, the difficult question of attribution was settled by us pointing to the DNI report instead of saying Russia or GRU directly. In my pre-briefs with members of Congress, I made it clear that we believed this action was GRU.

The story doesn\u2019t say you were told not to... it says you did so without approval and they tried to obfuscate what you found. Is that true?

— Sarah Frier (@sarahfrier) November 15, 2018

In the spring and summer of 2016, as reported by the Times, activity we traced to GRU was reported to the FBI. This was the standard model of interaction companies used for nation-state attacks against likely US targeted.

In the Spring of 2017, after a deep dive into the Fake News phenomena, the security team wanted to publish an update that covered what we had learned. At this point, we didn’t have any advertising content or the big IRA cluster, but we did know about the GRU model.

This report when through dozens of edits as different equities were represented. I did not have any meetings with Sheryl on the paper, but I can’t speak to whether she was in the loop with my higher-ups.

In the end, the difficult question of attribution was settled by us pointing to the DNI report instead of saying Russia or GRU directly. In my pre-briefs with members of Congress, I made it clear that we believed this action was GRU.

Machine translation can be a wonderful translation tool, but its uses are widely misunderstood.

Let's talk about Google Translate, its current state in the professional translation industry, and why robots are terrible at interpreting culture and context.

Straight to the point: machine translation (MT) is an incredibly helpful tool for translation! But just like any tool, there are specific times and places for it.

You wouldn't use a jackhammer to nail a painting to the wall.

Two factors are at play when determining how useful MT is: language pair and context.

Certain language pairs are better suited for MT. Typically, the more similar the grammar structure, the better the MT will be. Think Spanish <> Portuguese vs. Spanish <> Japanese.

No two MT engines are the same, though! Check out how human professionals ranked their choice of MT engine in a Phrase survey:

https://t.co/yiVPmHnjKv

When it comes to context, the first thing to look at is the type of text you want to translate. Typically, the more technical and straightforward the text, the better a machine will be at working on it.

Let's talk about Google Translate, its current state in the professional translation industry, and why robots are terrible at interpreting culture and context.

Straight to the point: machine translation (MT) is an incredibly helpful tool for translation! But just like any tool, there are specific times and places for it.

You wouldn't use a jackhammer to nail a painting to the wall.

Two factors are at play when determining how useful MT is: language pair and context.

Certain language pairs are better suited for MT. Typically, the more similar the grammar structure, the better the MT will be. Think Spanish <> Portuguese vs. Spanish <> Japanese.

No two MT engines are the same, though! Check out how human professionals ranked their choice of MT engine in a Phrase survey:

https://t.co/yiVPmHnjKv

When it comes to context, the first thing to look at is the type of text you want to translate. Typically, the more technical and straightforward the text, the better a machine will be at working on it.

THREAD: How is it possible to train a well-performing, advanced Computer Vision model 𝗼𝗻 𝘁𝗵𝗲 𝗖𝗣𝗨? 🤔

At the heart of this lies the most important technique in modern deep learning - transfer learning.

Let's analyze how it

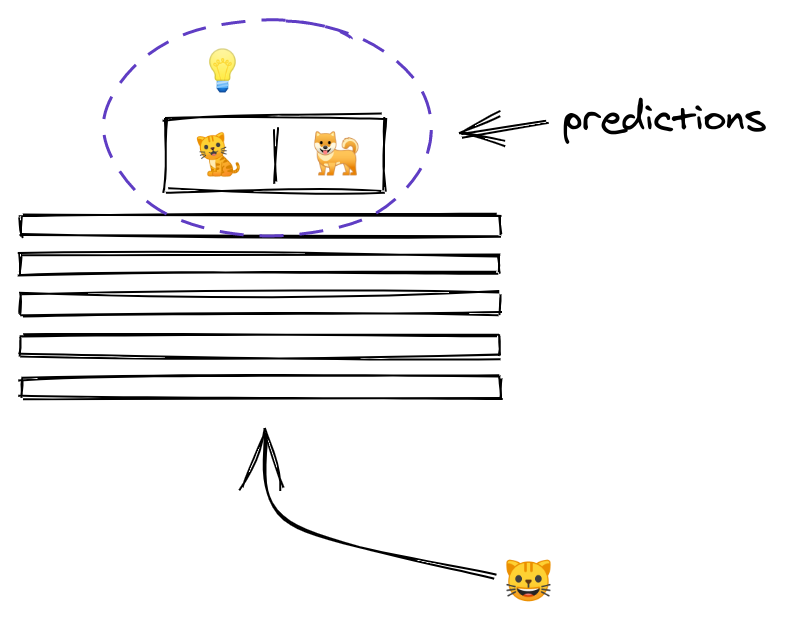

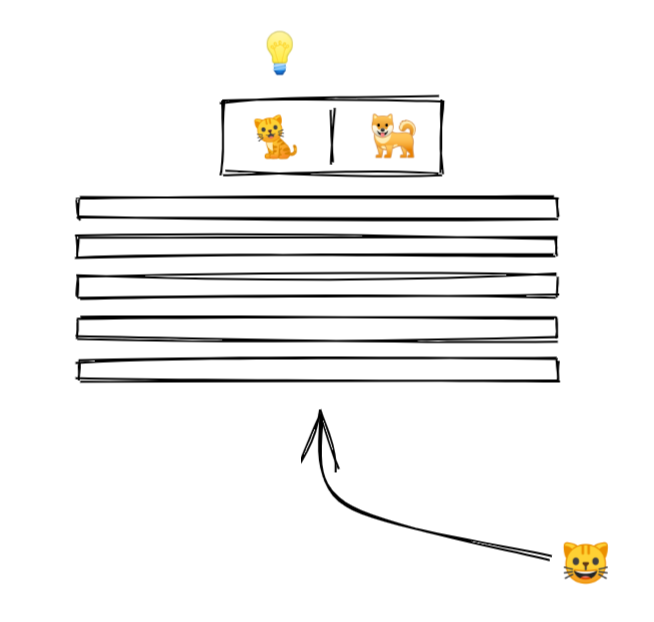

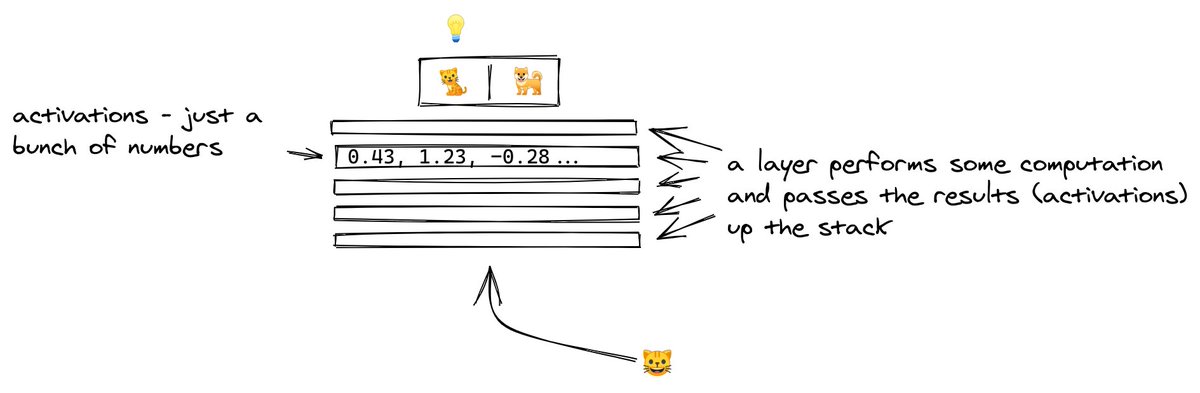

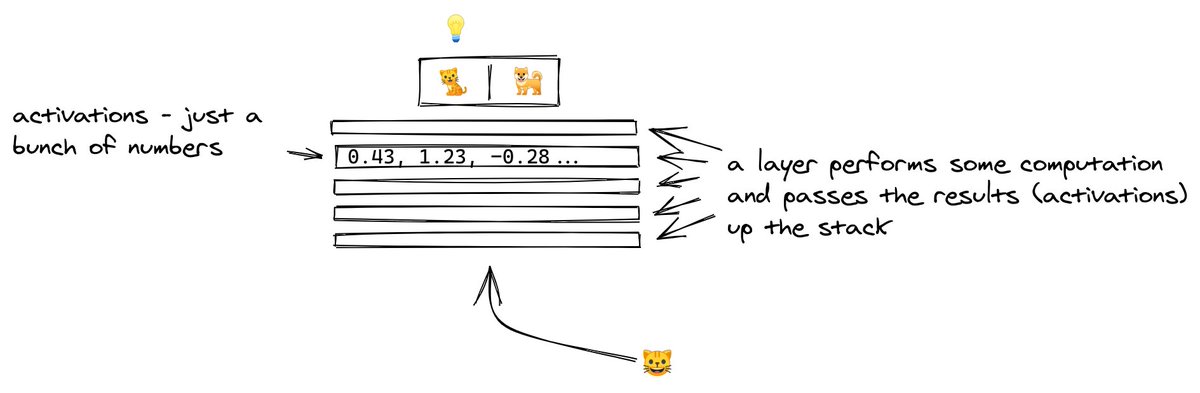

2/ For starters, let's look at what a neural network (NN for short) does.

An NN is like a stack of pancakes, with computation flowing up when we make predictions.

How does it all work?

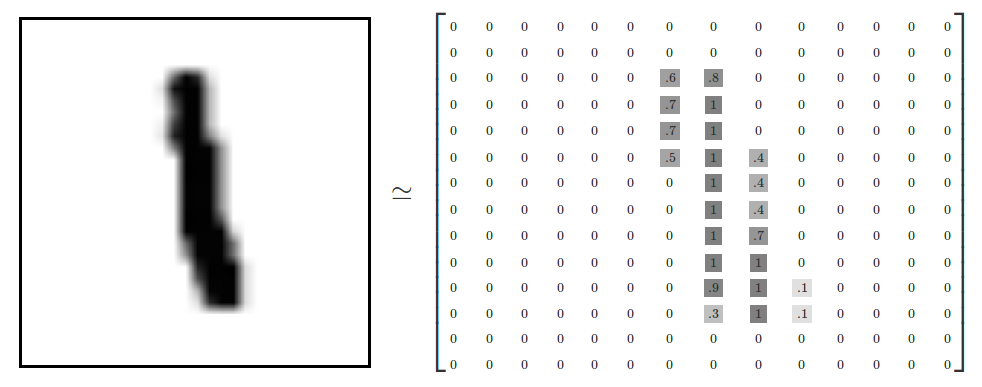

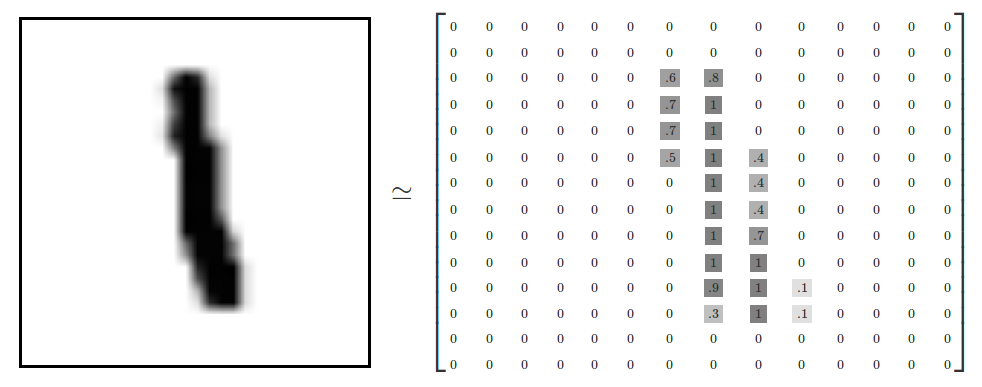

3/ We show an image to our model.

An image is a collection of pixels. Each pixel is just a bunch of numbers describing its color.

Here is what it might look like for a black and white image

4/ The picture goes into the layer at the bottom.

Each layer performs computation on the image, transforming it and passing it upwards.

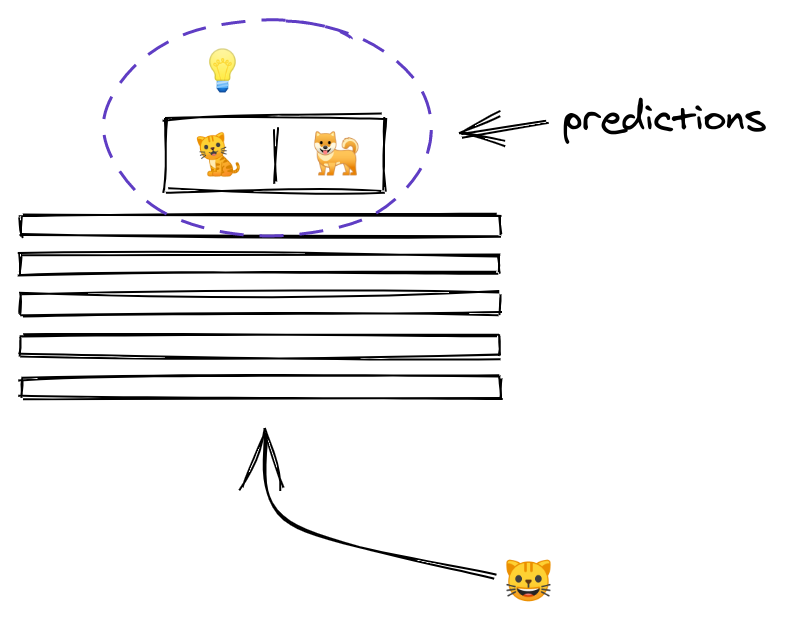

5/ By the time the image reaches the uppermost layer, it has been transformed to the point that it now consists of two numbers only.

The outputs of a layer are called activations, and the outputs of the last layer have a special meaning... they are the predictions!

At the heart of this lies the most important technique in modern deep learning - transfer learning.

Let's analyze how it

THREAD: Can you start learning cutting-edge deep learning without specialized hardware? \U0001f916

— Radek Osmulski (@radekosmulski) February 11, 2021

In this thread, we will train an advanced Computer Vision model on a challenging dataset. \U0001f415\U0001f408 Training completes in 25 minutes on my 3yrs old Ryzen 5 CPU.

Let me show you how...

2/ For starters, let's look at what a neural network (NN for short) does.

An NN is like a stack of pancakes, with computation flowing up when we make predictions.

How does it all work?

3/ We show an image to our model.

An image is a collection of pixels. Each pixel is just a bunch of numbers describing its color.

Here is what it might look like for a black and white image

4/ The picture goes into the layer at the bottom.

Each layer performs computation on the image, transforming it and passing it upwards.

5/ By the time the image reaches the uppermost layer, it has been transformed to the point that it now consists of two numbers only.

The outputs of a layer are called activations, and the outputs of the last layer have a special meaning... they are the predictions!