by Marc Peter Deisenroth, A. Aldo Faisal, and Cheng Soon Ong

https://t.co/zSpp67kJSg

Note: this is probably the place you want to start. Start slowly and work on some examples. Pay close attention to the notation and get comfortable with it.

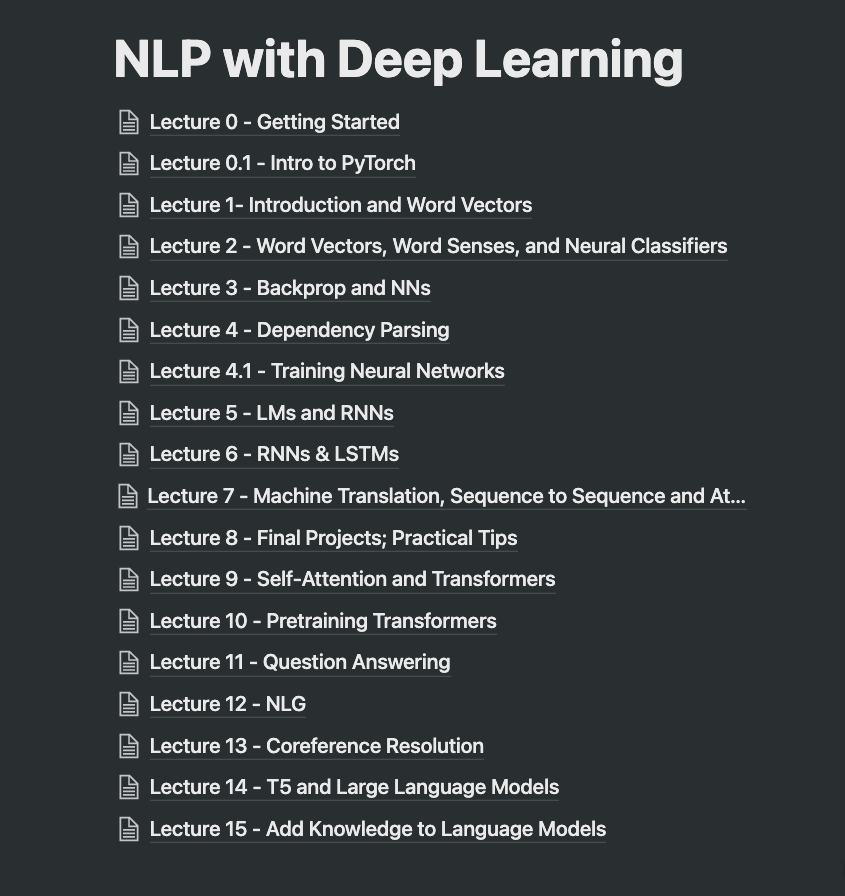

I've been writing notes for the latest Deep Learning for NLP course by Stanford.

— elvis (@omarsar0) January 14, 2022

For fun, I also started to add my own code snippets into the notes. I think this is a more efficient way to study: theory + code.

Plan to share these notes soon. Stay tuned! pic.twitter.com/hWzZDORbl6

When are you doing pie charts?

— #BlackLivesMatter (@surt_lab) October 13, 2020

If everyone was holding bitcoin on the old x86 in their parents basement, we would be finding a price bottom. The problem is the risk is all pooled at a few brokerages and a network of rotten exchanges with counter party risk that makes AIG circa 2008 look like a good credit.

— Greg Wester (@gwestr) November 25, 2018