This is SUCH a good essay.

This is an essay about designing AI with purpose, but really it's an essay about helping people clearly communicate their intentions. https://t.co/5L3FcrNJp6

— Josh Lovejoy (@jdlovejoy) January 20, 2021

The thing that fascinates me most about ML is that we want AI to be an angel, essentially--inhuman in its perfection but human in its compassion. Like us enough to care about us but without any of our flaws.

authoritarianism vs. partnership

"As parents and caregivers, we recognize that it’s our responsibility to advise and not simply admonish. "

but often it's not

But as the essay notes, ML reverses the logic of traditional programming, in that it starts with outcomes and works toward rules rather than vice versa.

More from Culture

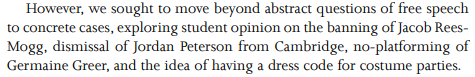

One of the authors of the Policy Exchange report on academic free speech thinks it is "ridiculous" to expect him to accurately portray an incident at Cardiff University in his study, both in the reporting and in a question put to a student sample.

Here is the incident Kaufmann incorporated into his study, as told by a Cardiff professor who was there. As you can see, the incident involved the university intervening to *uphold* free speech principles:

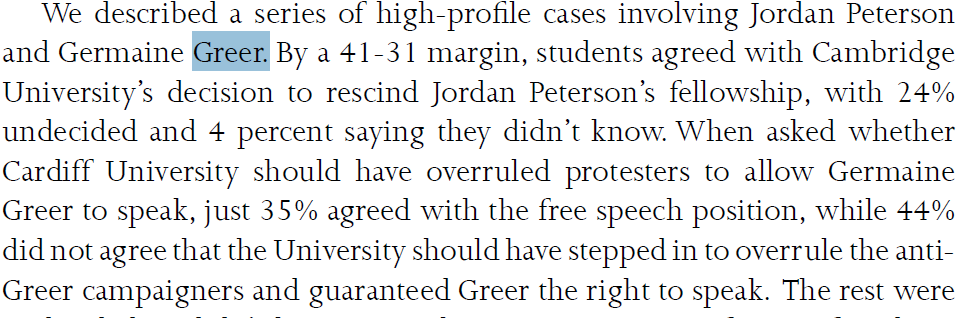

Here is the first mention of the Greer at Cardiff incident in Kaufmann's report. It refers to the "concrete case" of the "no-platforming of Germaine Greer". Any reasonable reader would assume that refers to an incident of no-platforming instead of its opposite.

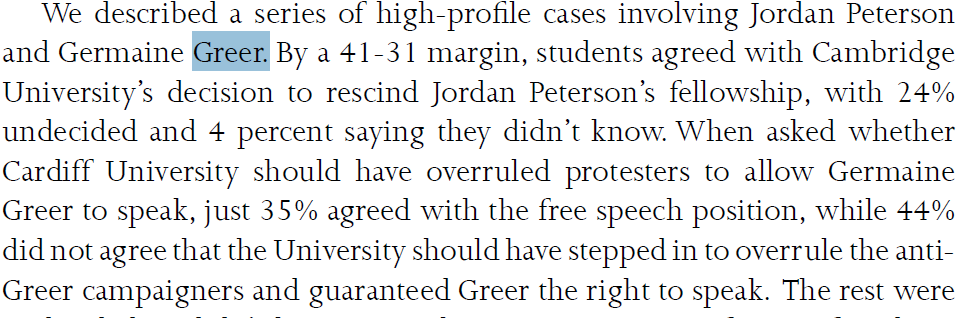

Here is the next mention of Greer in the report. The text asks whether the University "should have overruled protestors" and "stepped in...and guaranteed Greer the right to speak". Again the strong implication is that this did not happen and Greer was "no platformed".

The authors could easily have added a footnote at this point explaining what actually happened in Cardiff. They did not.

This is ridiculous. Students were asked for their views on this example and several others. The study findings and conclusions were about student responses not the substance of each case. Could\u2019ve used hypotheticals. The responses not the cases were the basis of the conclusions.

— Eric Kaufmann (@epkaufm) February 17, 2021

Here is the incident Kaufmann incorporated into his study, as told by a Cardiff professor who was there. As you can see, the incident involved the university intervening to *uphold* free speech principles:

The UK govt\u2019s paper on free speech in Unis (with implications for Wales) is getting a lot of attention.

— Richard Wyn Jones (@RWynJones) February 16, 2021

Worth noting then that an important part of the evidence-base on which it rests relates to (demonstrably false) claims about my own institution

1/https://t.co/buoGE7ocG7

Here is the first mention of the Greer at Cardiff incident in Kaufmann's report. It refers to the "concrete case" of the "no-platforming of Germaine Greer". Any reasonable reader would assume that refers to an incident of no-platforming instead of its opposite.

Here is the next mention of Greer in the report. The text asks whether the University "should have overruled protestors" and "stepped in...and guaranteed Greer the right to speak". Again the strong implication is that this did not happen and Greer was "no platformed".

The authors could easily have added a footnote at this point explaining what actually happened in Cardiff. They did not.