Once you move above that mark, it becomes exponentially, far and away beyond anything I dreamed, more difficult.

I think about this a lot, both in IT and civil infrastructure. It looks so trivial to “fix” from the outside. In fact, it is incredibly draining to do the entirely crushing work of real policy changes internally. It’s harder than drafting a blank page of how the world should be.

The tragedy of revolutionaries is they design a utopia by a river but discover the impure city they razed was on stilts for a reason.

— SwiftOnSecurity (@SwiftOnSecurity) June 19, 2016

Once you move above that mark, it becomes exponentially, far and away beyond anything I dreamed, more difficult.

God I wish I lived in that world of triviality. In moments, I find myself regretting leaving that place of self-directed autonomy.

More from Tech

1. One of the best changes in recent years is the GOP abandoning libertarianism. Here's GOP Rep. Greg Steube: “I do think there is an appetite amongst Republicans, if the Dems wanted to try to break up Big Tech, I think there is support for that."

2. And @RepKenBuck, who offered a thoughtful Third Way report on antitrust law in 2020, weighed in quite reasonably on Biden antitrust frameworks.

3. I believe this change is sincere because it's so pervasive and beginning to result in real policy changes. Example: The North Dakota GOP is taking on Apple's app store.

4. And yet there's a problem. The GOP establishment is still pro-big tech. Trump, despite some of his instincts, appointed pro-monopoly antitrust enforcers. Antitrust chief Makan Delrahim helped big tech, and the antitrust case happened bc he was recused.

5. At the other sleepy antitrust agency, the Federal Trade Commission, Trump appointed commissioners

@FTCPhillips and @CSWilsonFTC are both pro-monopoly. Both voted *against* the antitrust case on FB. That case was 3-2, with a GOP Chair and 2 Dems teaming up against 2 Rs.

2. And @RepKenBuck, who offered a thoughtful Third Way report on antitrust law in 2020, weighed in quite reasonably on Biden antitrust frameworks.

3. I believe this change is sincere because it's so pervasive and beginning to result in real policy changes. Example: The North Dakota GOP is taking on Apple's app store.

Republican North Dakota legislators have introduced #SB2333, a bill that prohibits large tech companies from locking their users into a single app store or payment processor.https://t.co/PgyhgOhFAl

— Cory Doctorow #BLM (@doctorow) February 11, 2021

1/ pic.twitter.com/KZ8BMFQoPO

4. And yet there's a problem. The GOP establishment is still pro-big tech. Trump, despite some of his instincts, appointed pro-monopoly antitrust enforcers. Antitrust chief Makan Delrahim helped big tech, and the antitrust case happened bc he was recused.

5. At the other sleepy antitrust agency, the Federal Trade Commission, Trump appointed commissioners

@FTCPhillips and @CSWilsonFTC are both pro-monopoly. Both voted *against* the antitrust case on FB. That case was 3-2, with a GOP Chair and 2 Dems teaming up against 2 Rs.

You May Also Like

So friends here is the thread on the recommended pathway for new entrants in the stock market.

Here I will share what I believe are essentials for anybody who is interested in stock markets and the resources to learn them, its from my experience and by no means exhaustive..

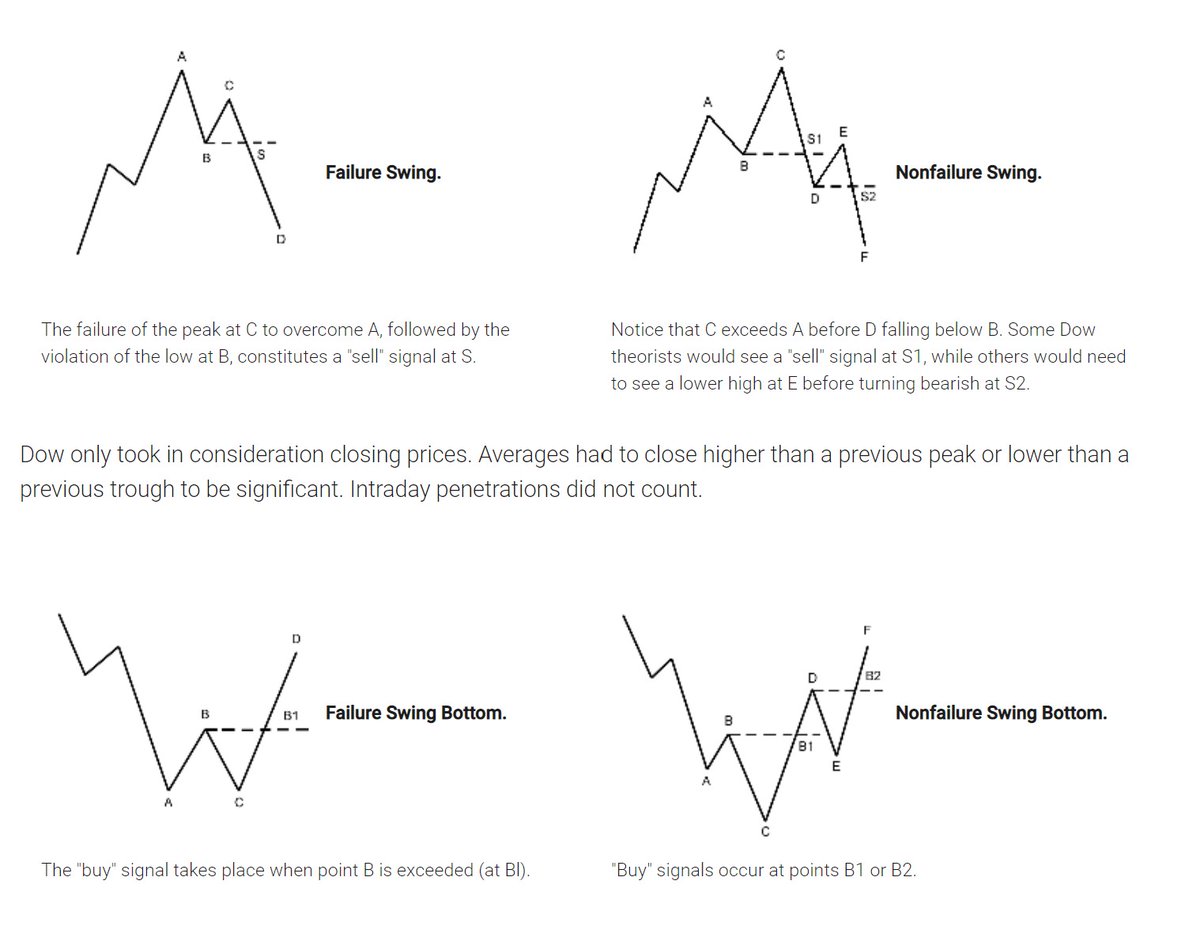

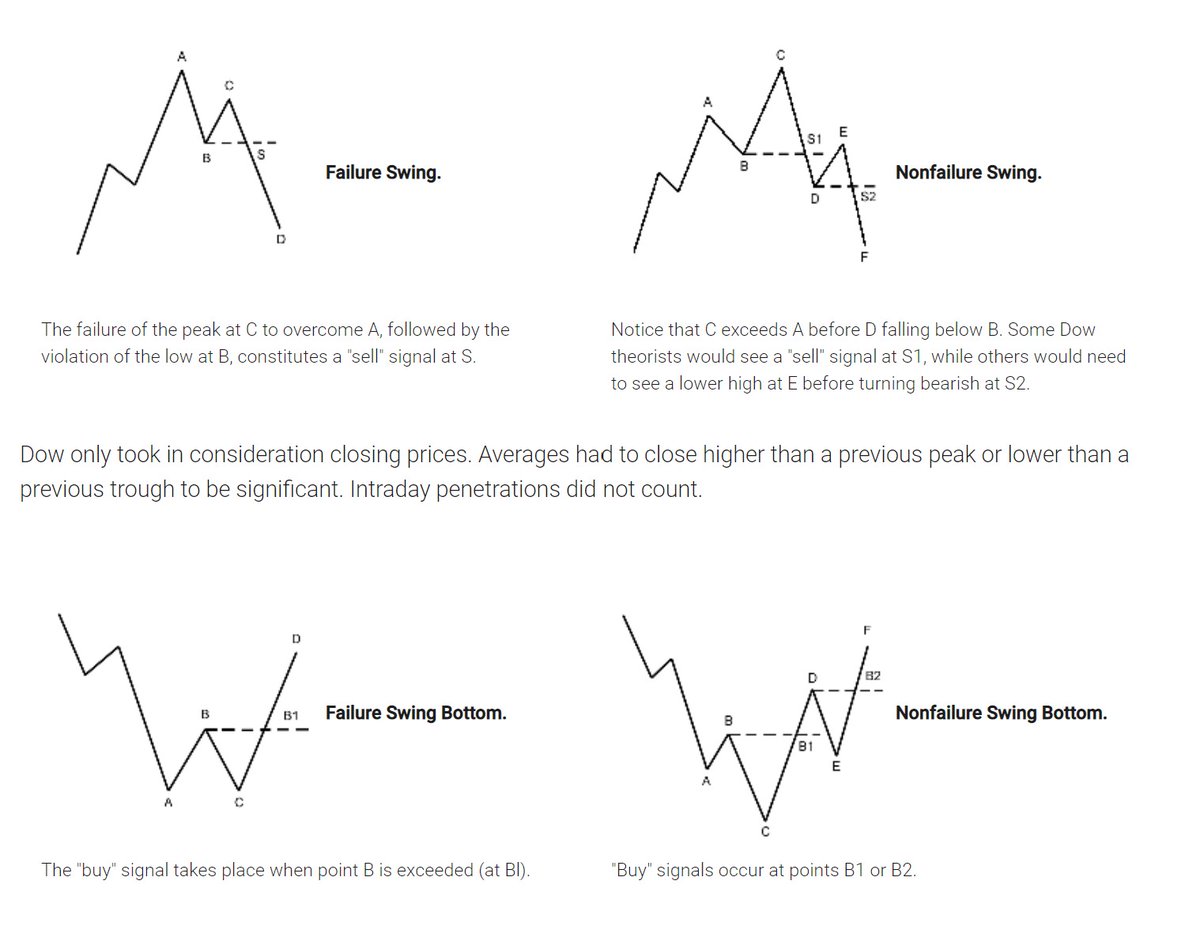

First the very basic : The Dow theory, Everybody must have basic understanding of it and must learn to observe High Highs, Higher Lows, Lower Highs and Lowers lows on charts and their

Even those who are more inclined towards fundamental side can also benefit from Dow theory, as it can hint start & end of Bull/Bear runs thereby indication entry and exits.

Next basic is Wyckoff's Theory. It tells how accumulation and distribution happens with regularity and how the market actually

Dow theory is old but

Here I will share what I believe are essentials for anybody who is interested in stock markets and the resources to learn them, its from my experience and by no means exhaustive..

First the very basic : The Dow theory, Everybody must have basic understanding of it and must learn to observe High Highs, Higher Lows, Lower Highs and Lowers lows on charts and their

Even those who are more inclined towards fundamental side can also benefit from Dow theory, as it can hint start & end of Bull/Bear runs thereby indication entry and exits.

Next basic is Wyckoff's Theory. It tells how accumulation and distribution happens with regularity and how the market actually

Dow theory is old but

Old is Gold....

— Professor (@DillikiBiili) January 23, 2020

this Bharti Airtel chart is a true copy of the Wyckoff Pattern propounded in 1931....... pic.twitter.com/tQ1PNebq7d