They sure will. Here's a quick analysis of how, from my perspective as someone who studies societal impacts of natural language technology:

I have no idea how, but these will end up being racist https://t.co/pjZN0WXnnE

— Michael Hobbes (@RottenInDenmark) December 16, 2020

More from Tech

🙂 Hey - have you heard of @RevolutApp Business before?

🌐 Great international transfer and 🏦 foreign #exchange rates, and various tools to manage your #business.

👉 https://t.co/dkuBrYrfMq

#banking #fintech #revolut #growth #startups

1/10

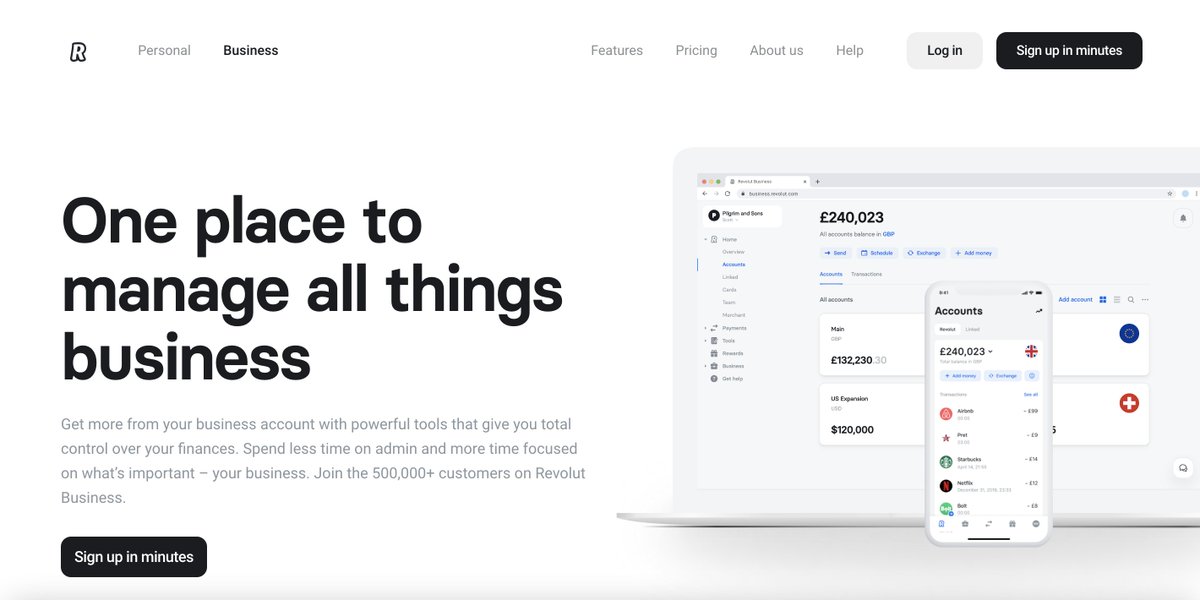

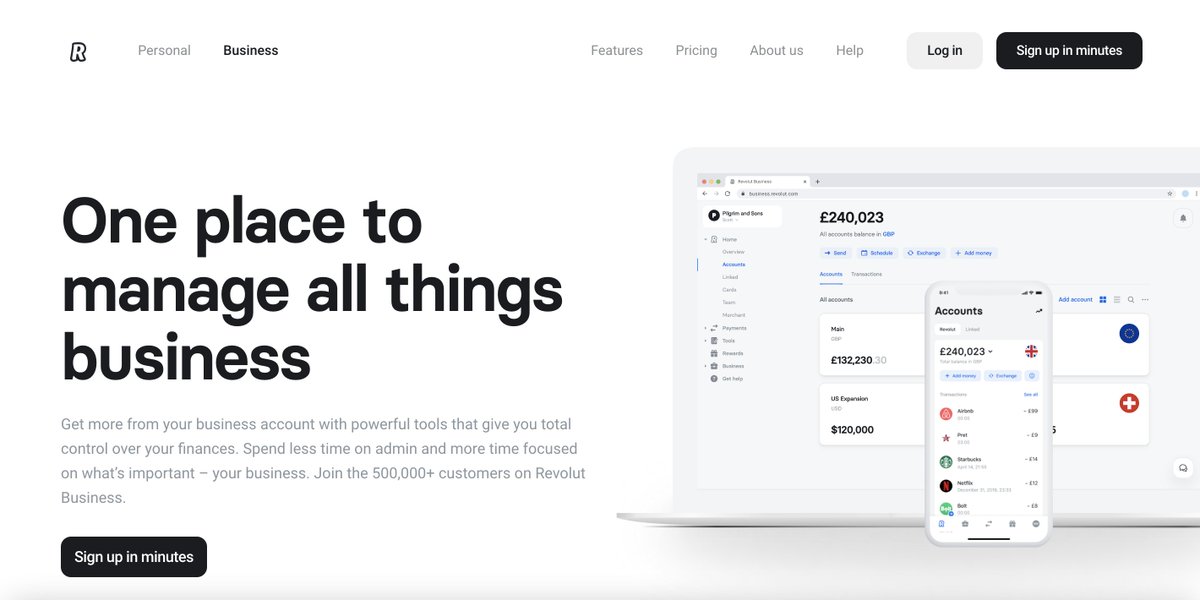

One place to manage all things business

Get more from your business account with powerful tools that give you total control over your finances.

👉 https://t.co/dkuBrYrfMq

2/10

Accept payments

online at great rates

Receive card payments from around the world with low fees and next-day settlement.

👉 https://t.co/dkuBrYrfMq

3/10

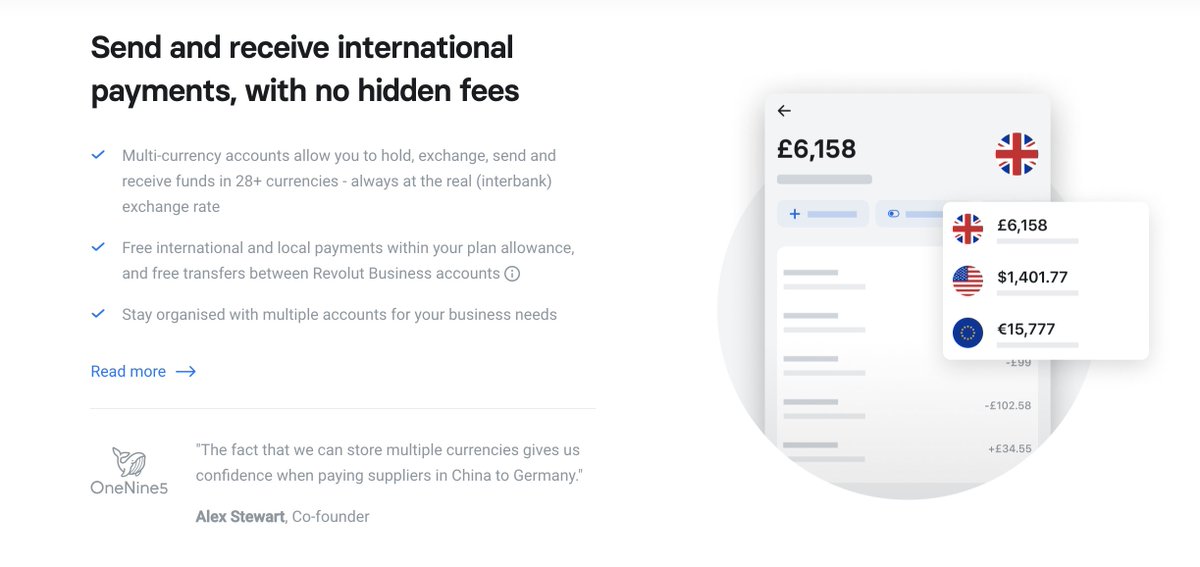

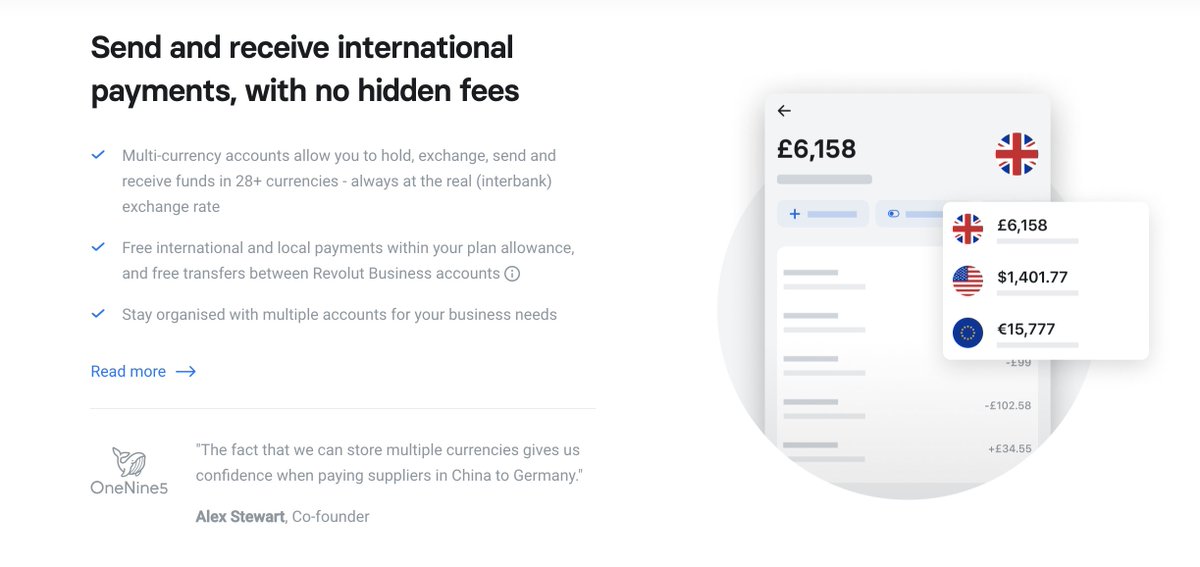

Send and receive international payments, with no hidden fees

Multi-currency accounts allow you to hold, exchange, send and receive funds in 28+ currencies - always at the real (interbank) exchange rate...

👉 https://t.co/dkuBrYrfMq

4/10

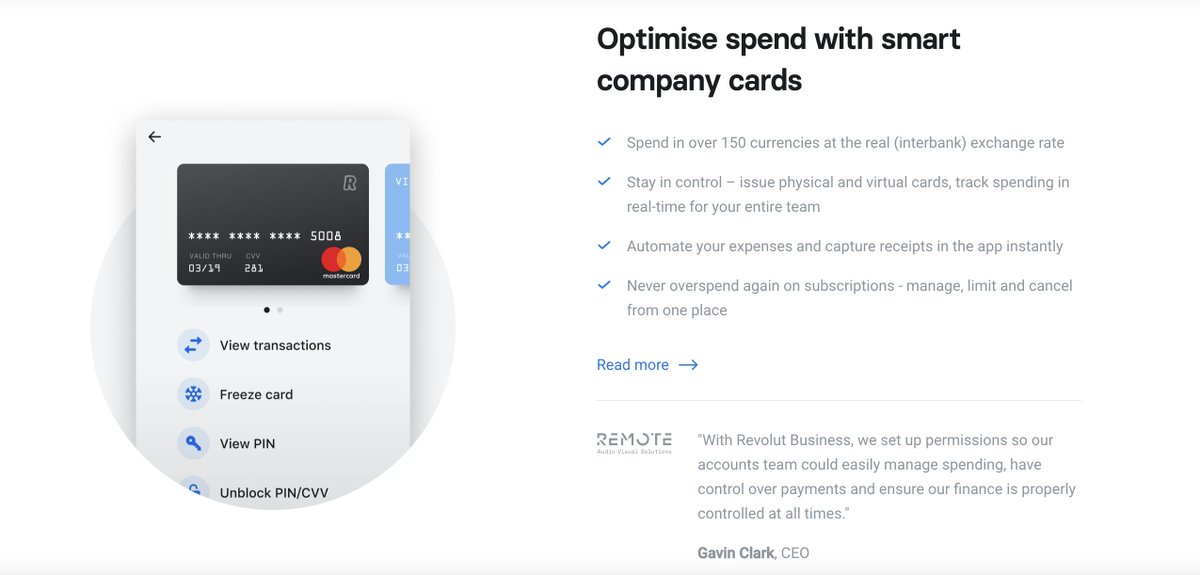

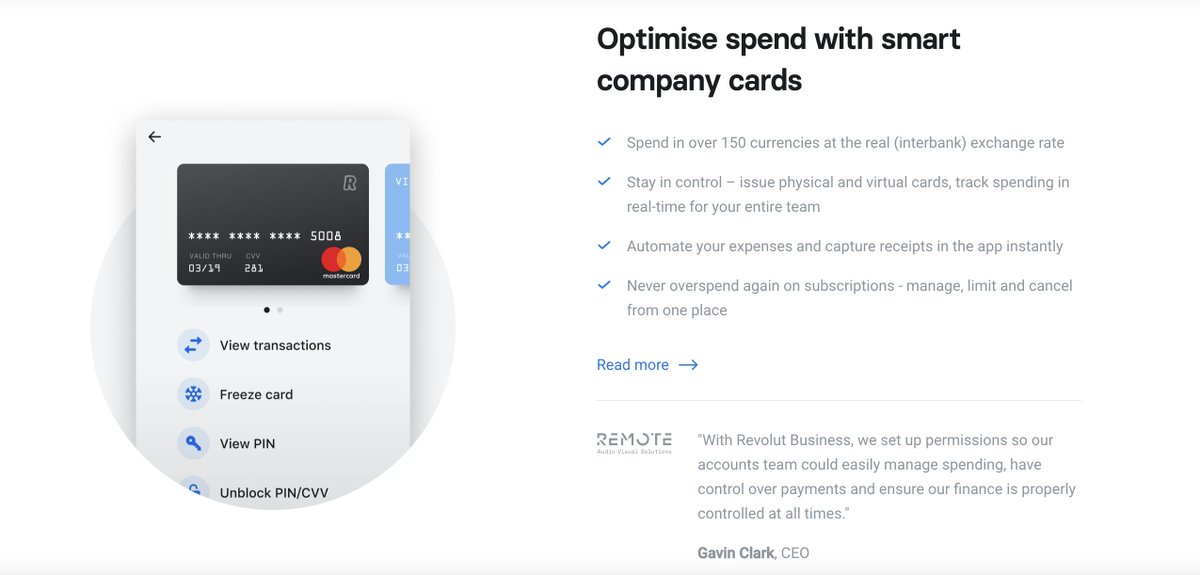

Optimise spend with smart company cards

Spend in over 150 currencies at the real (interbank) exchange rate

Stay in control – issue physical and virtual cards, track spending in real-time for your entire team...

👉 https://t.co/dkuBrYrfMq

5/10

🌐 Great international transfer and 🏦 foreign #exchange rates, and various tools to manage your #business.

👉 https://t.co/dkuBrYrfMq

#banking #fintech #revolut #growth #startups

1/10

One place to manage all things business

Get more from your business account with powerful tools that give you total control over your finances.

👉 https://t.co/dkuBrYrfMq

2/10

Accept payments

online at great rates

Receive card payments from around the world with low fees and next-day settlement.

👉 https://t.co/dkuBrYrfMq

3/10

Send and receive international payments, with no hidden fees

Multi-currency accounts allow you to hold, exchange, send and receive funds in 28+ currencies - always at the real (interbank) exchange rate...

👉 https://t.co/dkuBrYrfMq

4/10

Optimise spend with smart company cards

Spend in over 150 currencies at the real (interbank) exchange rate

Stay in control – issue physical and virtual cards, track spending in real-time for your entire team...

👉 https://t.co/dkuBrYrfMq

5/10

You May Also Like

THREAD PART 1.

On Sunday 21st June, 14 year old Noah Donohoe left his home to meet his friends at Cave Hill Belfast to study for school. #RememberMyNoah💙

He was on his black Apollo mountain bike, fully dressed, wearing a helmet and carrying a backpack containing his laptop and 2 books with his name on them. He also had his mobile phone with him.

On the 27th of June. Noah's naked body was sadly discovered 950m inside a storm drain, between access points. This storm drain was accessible through an area completely unfamiliar to him, behind houses at Northwood Road. https://t.co/bpz3Rmc0wq

"Noah's body was found by specially trained police officers between two drain access points within a section of the tunnel running under the Translink access road," said Mr McCrisken."

Noah's bike was also found near a house, behind a car, in the same area. It had been there for more than 24 hours before a member of public who lived in the street said she read reports of a missing child and checked the bike and phoned the police.

On Sunday 21st June, 14 year old Noah Donohoe left his home to meet his friends at Cave Hill Belfast to study for school. #RememberMyNoah💙

He was on his black Apollo mountain bike, fully dressed, wearing a helmet and carrying a backpack containing his laptop and 2 books with his name on them. He also had his mobile phone with him.

On the 27th of June. Noah's naked body was sadly discovered 950m inside a storm drain, between access points. This storm drain was accessible through an area completely unfamiliar to him, behind houses at Northwood Road. https://t.co/bpz3Rmc0wq

"Noah's body was found by specially trained police officers between two drain access points within a section of the tunnel running under the Translink access road," said Mr McCrisken."

Noah's bike was also found near a house, behind a car, in the same area. It had been there for more than 24 hours before a member of public who lived in the street said she read reports of a missing child and checked the bike and phoned the police.