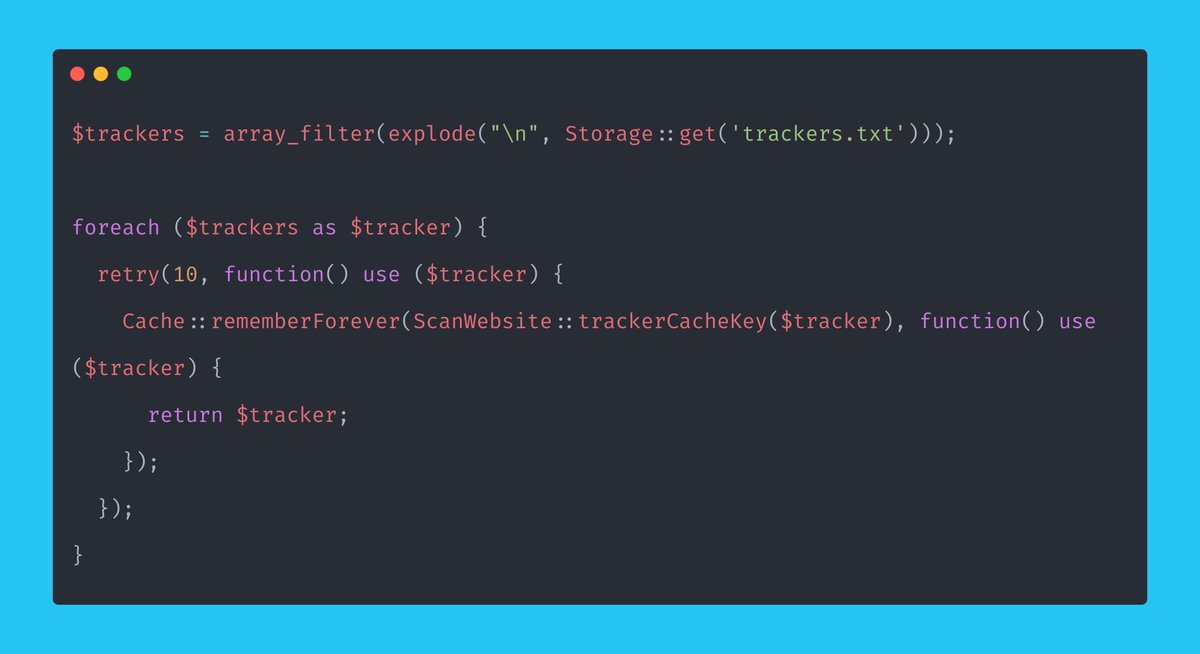

We just launched a fun little tool called Phantom Analyzer. It’s a 100% serverless tool that scans websites for hidden tracking pixels.

I want to talk about how we built it 👇

> Laravel Vapor

> ChipperCI for deployment

> SQS for queues

> DynamoDB for the database

We went with DynamoDB as we don’t want to worry about our database scaling!

> How will we scan websites for tracking pixels?

> How will we utilize the queue and check the job is done?

> How will we validate the URL?

Wait a minute...

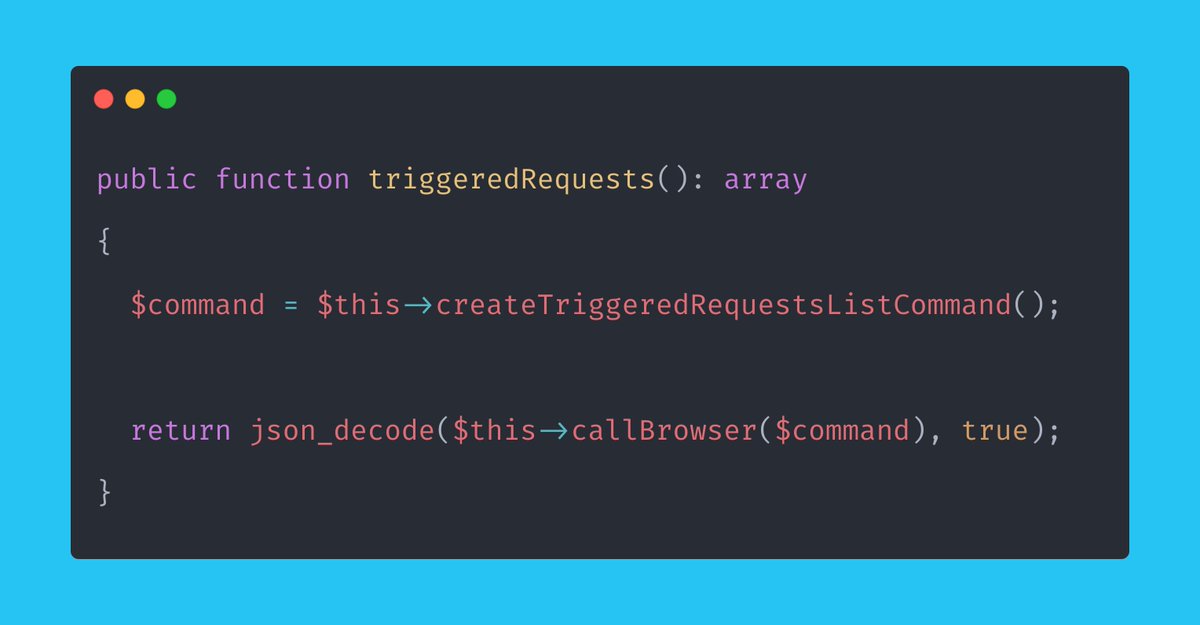

Yes, out of the box, Browsershot already had what I needed. Are you kidding me?

> 1024MB of RAM

> 2048 of RAM for the queue (could likely reduce!)

> Warm of 500

> CLI Timeout of 180 seconds

Those settings all worked nicely.

More from Tech

I think about this a lot, both in IT and civil infrastructure. It looks so trivial to “fix” from the outside. In fact, it is incredibly draining to do the entirely crushing work of real policy changes internally. It’s harder than drafting a blank page of how the world should be.

I’m at a sort of career crisis point. In my job before, three people could contain the entire complexity of a nation-wide company’s IT infrastructure in their head.

Once you move above that mark, it becomes exponentially, far and away beyond anything I dreamed, more difficult.

And I look at candidates and know-everything’s who think it’s all so easy. Or, people who think we could burn it down with no losses and start over.

God I wish I lived in that world of triviality. In moments, I find myself regretting leaving that place of self-directed autonomy.

For ten years I knew I could build something and see results that same day. Now I’m adjusting to building something in my mind in one day, and it taking a year to do the due-diligence and edge cases and documentation and familiarization and roll-out.

That’s the hard work. It’s not technical. It’s not becoming a rockstar to peers.

These people look at me and just see another self-important idiot in Security who thinks they understand the system others live. Who thinks “bad” designs were made for no reason.

Who wasn’t there.

The tragedy of revolutionaries is they design a utopia by a river but discover the impure city they razed was on stilts for a reason.

— SwiftOnSecurity (@SwiftOnSecurity) June 19, 2016

I’m at a sort of career crisis point. In my job before, three people could contain the entire complexity of a nation-wide company’s IT infrastructure in their head.

Once you move above that mark, it becomes exponentially, far and away beyond anything I dreamed, more difficult.

And I look at candidates and know-everything’s who think it’s all so easy. Or, people who think we could burn it down with no losses and start over.

God I wish I lived in that world of triviality. In moments, I find myself regretting leaving that place of self-directed autonomy.

For ten years I knew I could build something and see results that same day. Now I’m adjusting to building something in my mind in one day, and it taking a year to do the due-diligence and edge cases and documentation and familiarization and roll-out.

That’s the hard work. It’s not technical. It’s not becoming a rockstar to peers.

These people look at me and just see another self-important idiot in Security who thinks they understand the system others live. Who thinks “bad” designs were made for no reason.

Who wasn’t there.