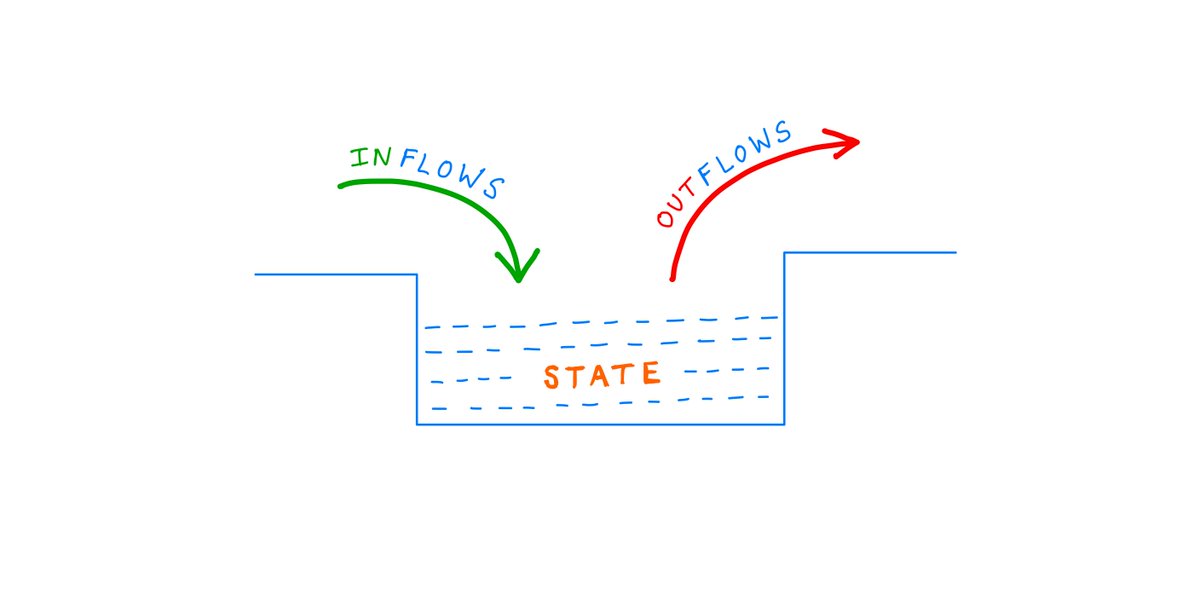

A short thread on what I think is particularly useful intuition for the application of computational statistics.

More from Science

💥and so it begins..💥

It's time, my friends 🤩🤩

[Thread] #ProjectOdin

https://t.co/fO90N78fta

new quantum-based internet #ElonMusk #QVS #QFS

Political justification ⏬⏬

#ProjectOdin

#ProjectOdin #Starlink #ElonMusk #QuantumInternet

It's time, my friends 🤩🤩

[Thread] #ProjectOdin

The Alliance has Project Odin ready to go - the new quantum-based internet. #ElonMusk #QVS #QFS #ProjectOdin

— Der Preu\xdfe Parler: @DerPreusse (@DerPreusse1963) January 12, 2021

https://t.co/fO90N78fta

new quantum-based internet #ElonMusk #QVS #QFS

Political justification ⏬⏬

#ProjectOdin

#ProjectOdin #Starlink #ElonMusk #QuantumInternet

#DDDD $LBPS $LOAC

A thread on the potential near term catalysts behind why I have increased my position in 4d Pharma @4dpharmaplc (LON: #DDDD):

1) NASDAQ listing. This is the most obvious.

The idea behind this is that the huge pool of capital and institutional interest in the NASDAQ will enable a higher per-share valuation for #DDDD than was achievable in the UK.

Comparators to @4dpharmaplc #DDDD (market capitalisation £150m) on the NASDAQ and their market capitalisation:

Seres Therapeutics: $2.33bn = £1.72bn (has had a successful phase 3 C. difficile trial); from my previous research (below) the chance of #DDDD achieving this at least once is at least

Kaleido Biosciences: $347m = £256m. 4 products under consideration, compared to #DDDD's potential 16. When you view @4dpharmaplc's 1000+ patents and AI-driven MicroRx platform (not to mention their end-to-end manufacturing capability), 4d's undervaluation is clear.

A thread on the potential near term catalysts behind why I have increased my position in 4d Pharma @4dpharmaplc (LON: #DDDD):

1) NASDAQ listing. This is the most obvious.

The idea behind this is that the huge pool of capital and institutional interest in the NASDAQ will enable a higher per-share valuation for #DDDD than was achievable in the UK.

Comparators to @4dpharmaplc #DDDD (market capitalisation £150m) on the NASDAQ and their market capitalisation:

Seres Therapeutics: $2.33bn = £1.72bn (has had a successful phase 3 C. difficile trial); from my previous research (below) the chance of #DDDD achieving this at least once is at least

While looking at speculative pharmaceutical stocks I am reminded of why I am averse to these risky picks.#DDDD was compelling enough, though, to break this rule. The 10+ treatments under trial, industry-leading IP portfolio, and comparable undervaluation are inescapable.

— Shrey Srivastava (@BlogShrey) December 16, 2020

Kaleido Biosciences: $347m = £256m. 4 products under consideration, compared to #DDDD's potential 16. When you view @4dpharmaplc's 1000+ patents and AI-driven MicroRx platform (not to mention their end-to-end manufacturing capability), 4d's undervaluation is clear.

Hi, I'm #MarvellousMarthy & this is a mini #GlobalScienceShow to celebrate @WomenScienceDay. I'd like to tell you about my STEM Role Model @MarineMumbles. Stick around for @philjemmett who’s up next. #WomenInSTEM #WomenInScience4SDGs #WomenInScience #girlsinSTEM

Go to https://t.co/fAM7lPSznm to watch my film. I love Rockpooling now as a hobby & I have got Mummy & Daddy into it too. I have learnt loads about marine life over the last year & Elizabeth @marinemumbles has shared her ❤️ of the oceans with me. I LOVE crabs 🦀 🦀🦀!!

This is Gem, Marthy’s Mummy. There have been so many other STEM women who have truly inspired #MarvellousMarthy over the past year: @DrJoScience has ignited a love of experiments, @ScienceAmbass has brought giggles with some fab experiment-alongs, @HanaAyboob for introducing her

to some amazing #SciArt, @BryonyMathew for releasing some fabulous books to help raise aspirations, @Astro_Nicole & @Victrix75 for allowing her to interview them as part of #worldspaceweek & @AmeliaJanePiper for the ongoing support since she won the SciComm presenter competition.

So, as you can tell from the film, Marthy adores Elizabeth & is truly inspired by her. Since engaging with her for the first time about 10 months ago, Marthy has developed a very keen & passionate interest for all things Marine! The @angleseyseazoo can vouch for this!!!!

Go to https://t.co/fAM7lPSznm to watch my film. I love Rockpooling now as a hobby & I have got Mummy & Daddy into it too. I have learnt loads about marine life over the last year & Elizabeth @marinemumbles has shared her ❤️ of the oceans with me. I LOVE crabs 🦀 🦀🦀!!

This is Gem, Marthy’s Mummy. There have been so many other STEM women who have truly inspired #MarvellousMarthy over the past year: @DrJoScience has ignited a love of experiments, @ScienceAmbass has brought giggles with some fab experiment-alongs, @HanaAyboob for introducing her

to some amazing #SciArt, @BryonyMathew for releasing some fabulous books to help raise aspirations, @Astro_Nicole & @Victrix75 for allowing her to interview them as part of #worldspaceweek & @AmeliaJanePiper for the ongoing support since she won the SciComm presenter competition.

So, as you can tell from the film, Marthy adores Elizabeth & is truly inspired by her. Since engaging with her for the first time about 10 months ago, Marthy has developed a very keen & passionate interest for all things Marine! The @angleseyseazoo can vouch for this!!!!

Recently I learned something about DNA that blew my mind, and in this thread, I'll attempt to blow your mind as well. Behold: Chargaff's 2nd Parity Rule for DNA N-Grams.

If you are into cryptography or reverse engineering, you should love this.

Thread:

DNA consists of four different 'bases', A, C, G and T. These bases have specific meaning within our biology. Specifically, within the 'coding part' of a gene, a triplet of bases encodes for an amino acid

Most DNA is stored redundantly, in two connected strands. Wherever there is an A on one strand, you'll find a T on the other one. And similarly for C and G:

T G T C A G T

A C A G T C A

(note how the other strand is upside down - this matters!)

If you take all the DNA of an organism (both strands), you will find equal numbers of A's and T's, as well as equal numbers of C's and G's. This is true by definition.

This is called Chargaff's 1st parity rule.

https://t.co/jD4cMt0PJ0

Strangely enough, this rule also holds per strand! So even if you take away the redundancy, there are 99% equal numbers of A/T and C/G * on each strand *. And we don't really know why.

This is called Chargaff's 2nd parity rule.

If you are into cryptography or reverse engineering, you should love this.

Thread:

DNA consists of four different 'bases', A, C, G and T. These bases have specific meaning within our biology. Specifically, within the 'coding part' of a gene, a triplet of bases encodes for an amino acid

Most DNA is stored redundantly, in two connected strands. Wherever there is an A on one strand, you'll find a T on the other one. And similarly for C and G:

T G T C A G T

A C A G T C A

(note how the other strand is upside down - this matters!)

If you take all the DNA of an organism (both strands), you will find equal numbers of A's and T's, as well as equal numbers of C's and G's. This is true by definition.

This is called Chargaff's 1st parity rule.

https://t.co/jD4cMt0PJ0

Strangely enough, this rule also holds per strand! So even if you take away the redundancy, there are 99% equal numbers of A/T and C/G * on each strand *. And we don't really know why.

This is called Chargaff's 2nd parity rule.

You May Also Like

Took me 5 years to get the best Chartink scanners for Stock Market, but you’ll get it in 5 mminutes here ⏰

Do Share the above tweet 👆

These are going to be very simple yet effective pure price action based scanners, no fancy indicators nothing - hope you liked it.

https://t.co/JU0MJIbpRV

52 Week High

One of the classic scanners very you will get strong stocks to Bet on.

https://t.co/V69th0jwBr

Hourly Breakout

This scanner will give you short term bet breakouts like hourly or 2Hr breakout

Volume shocker

Volume spurt in a stock with massive X times

Do Share the above tweet 👆

These are going to be very simple yet effective pure price action based scanners, no fancy indicators nothing - hope you liked it.

https://t.co/JU0MJIbpRV

52 Week High

One of the classic scanners very you will get strong stocks to Bet on.

https://t.co/V69th0jwBr

Hourly Breakout

This scanner will give you short term bet breakouts like hourly or 2Hr breakout

Volume shocker

Volume spurt in a stock with massive X times