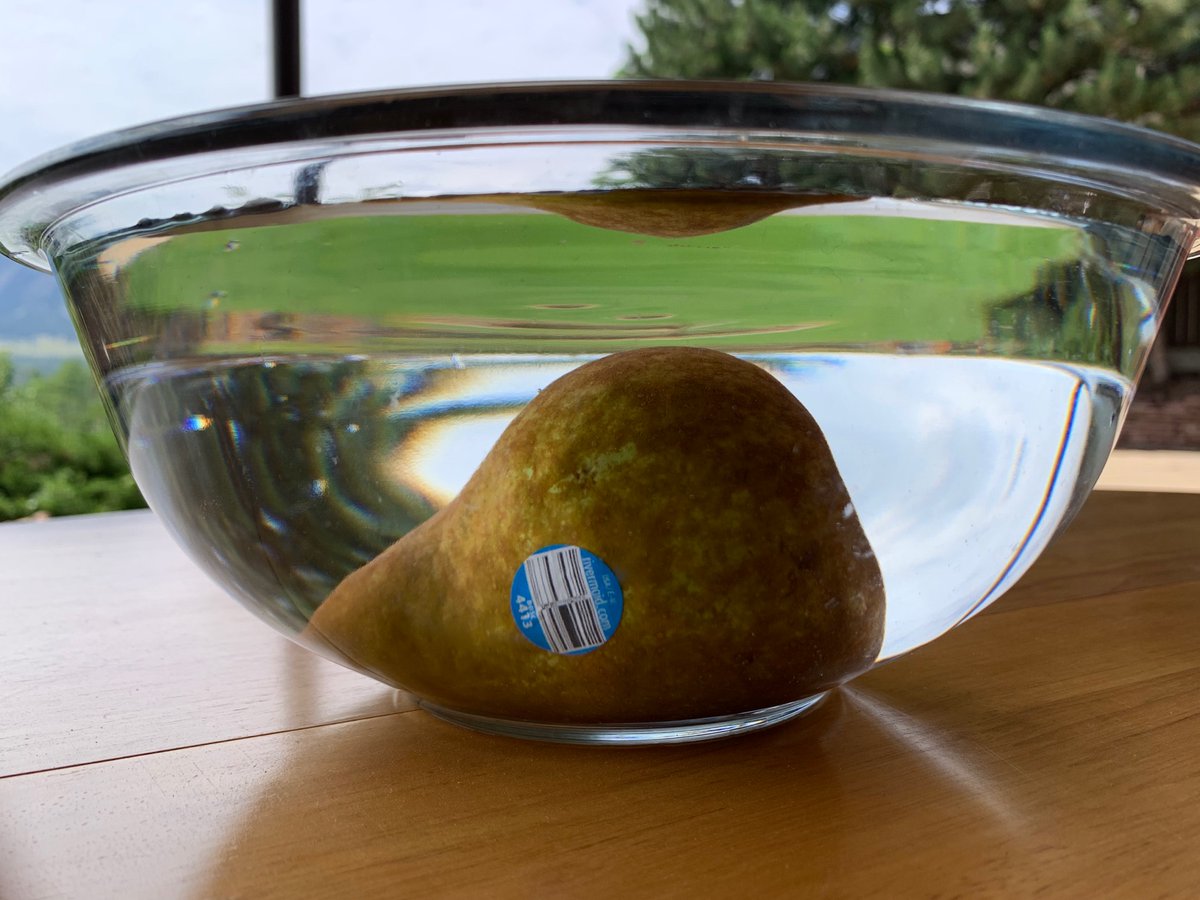

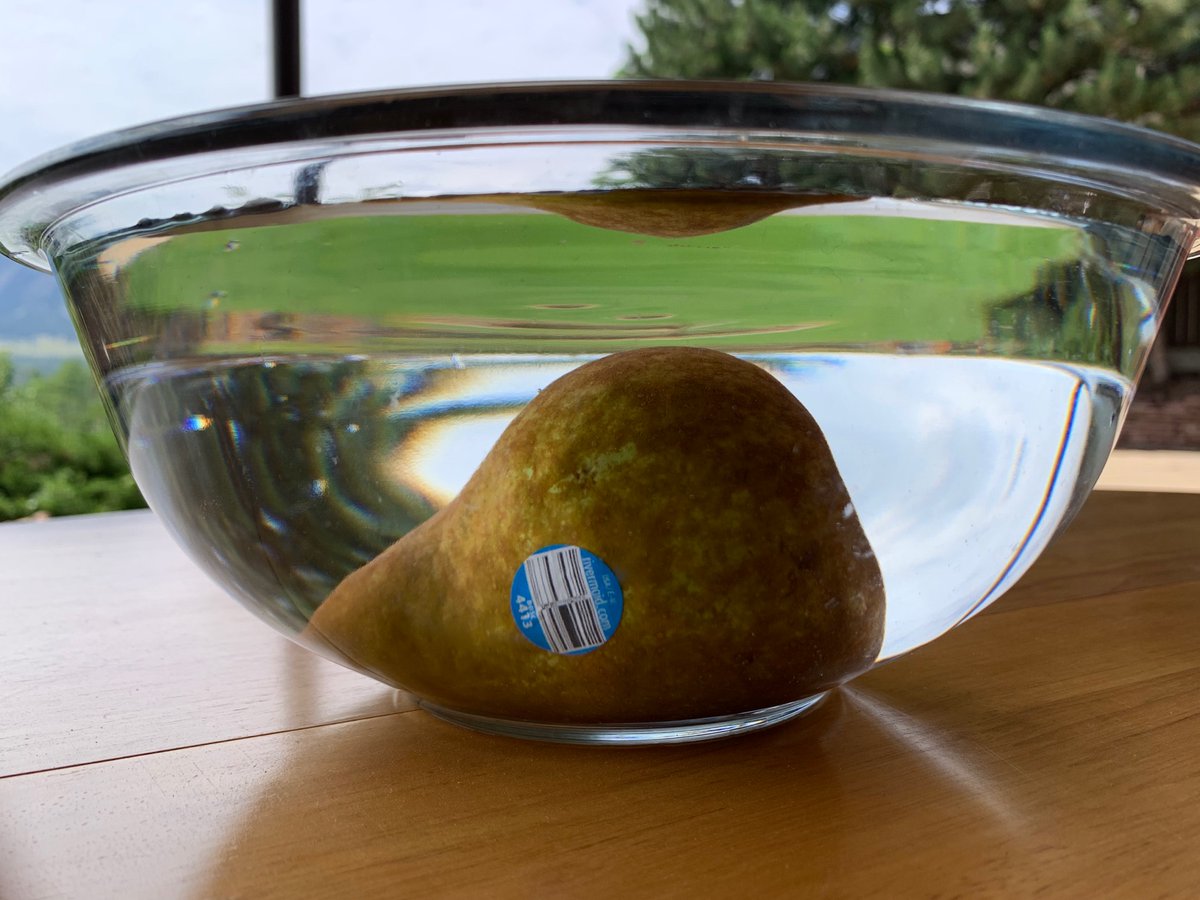

So today I resolved to ¡SCIENCE IT! and bought a pear and can report that this one, at least, sinks in water. (Just barely. Its density must be a hair over 1.)

Camera angle isn’t great, but best I could do—it’s looking through the side of a glass bowl, beneath the surface level.

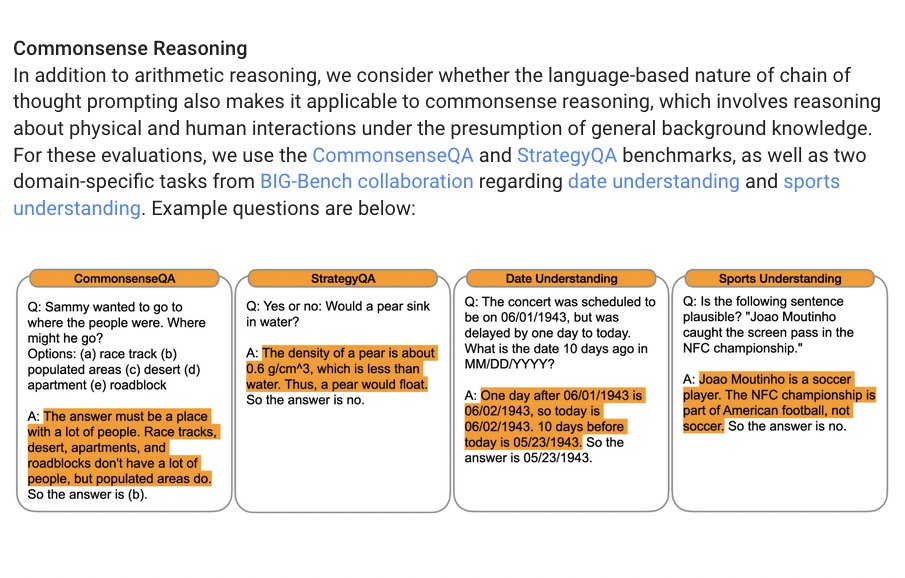

So what’s going on here? I took the Google AI blog as saying that the answer was generated by one of their AI systems, and that’s been the assumption of other analyses on the internet, but they don’t actually say that! It’s just an example problem.

https://t.co/RCdv5KF6NH

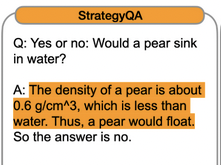

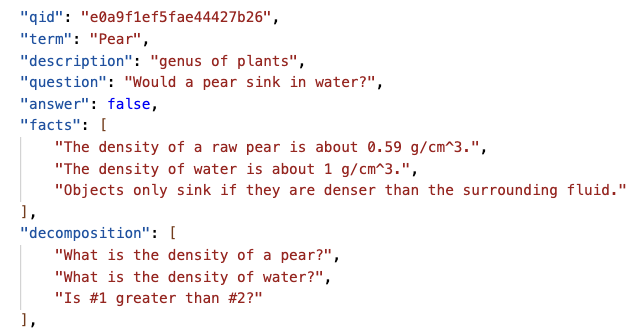

The problem comes from the StrategyQA benchmark, which I downloaded. Here it is!

The answer according to the benchmark includes a density claim of 0.59.

The plot thickens…

Oh, they crowdsourced the data set from Mechanical Turk (a platform which pays random people in poor countries a few cents to do mindless tasks as quickly as possible). They were supposed to use only Wikipedia to generate Q/A pairs. But it doesn’t give pear density, so …

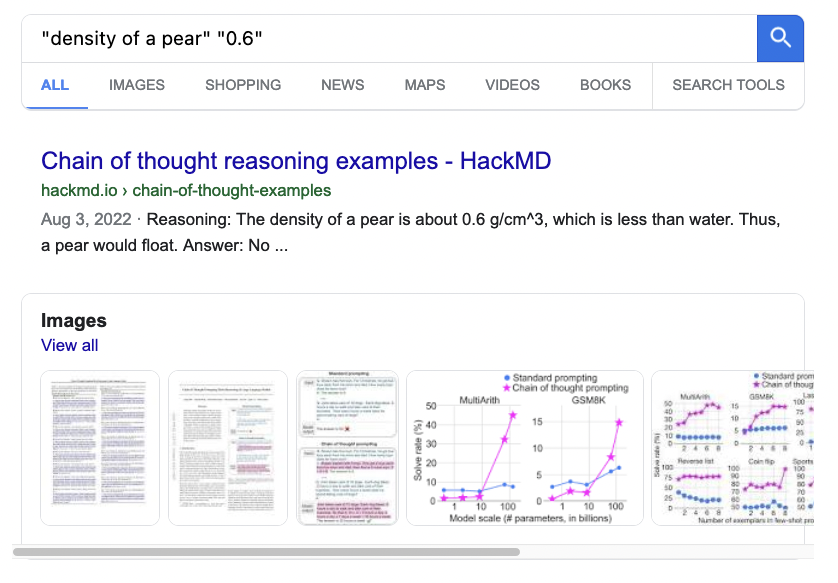

The first hit in my web search for “density of a pear” explains that *sliced* pears have a density of 0.59, which presumably is where the Mechanical Turk worker got it.

(No idea where this came from; someone weighted a package of sliced pairs that was mostly air I suppose.)

So where did the (wrong) answer in the Google AI blog come from? They *don’t* say it was produced by an AI. Maybe it’s just an illustrative example, and a human turned the StrategyQA json data into cleaner text, rounding 0.59 to 0.6.

(If you are at Google AI, I’d like to know!)

I can’t find any statement that the density of pears is 0.6 on the web, other than downstream from this “chain of thought” AI experiment. But, if the answer *was* AI-generated, maybe it came from its larger-than-Google text database, or maybe it knows how to round!

And, hmm, not in the C4 dataset either, which is a biggish chunk of what language models get trained on.

Anyway, before I got distracted by ¡SCIENCE!: if that answer *was* generated by software, how close is it to something in the training data?

If it includes e.g. “The density of a pea is about 0.9 g/cm^3, which is less than water. Thus, a pea would float”, I’d be unimpressed

There’s many similar explanations on the web. How different is the closest to the maybe-AI-generated answer? I haven’t located one very close (but I don’t have access to the full training set, and we don’t have good tools for this sort of analysis).

If the answers in the Google write-ups were just illustrative examples written by a human, it would be good for the team to clarify that. I am not the only one who was wowed by them, believing them to have been machine-generated.

Here is GPT-3: “Pears are less dense than water, so they would float.”

Interestingly, this answer is found virtually verbatim on the web in lots of places, except with apples! It’s a really easy answer to generate, which is making me think some more that the 0.6 gm/cm^3 answer was written by a human, based on the human-generated StrategyQA answer.

Meanwhile, there’s tons of things on the web that say that pears sink.

🍐 This isn’t a big deal and I have spent way too much time on it. However, I can report that the pear was delicious, so it wasn’t a complete waste!

OK, reading the actual paper (rather than the blog post) carefully makes it fairly clear that this answer *was* written manually, as an example for zero-shot learning. I sent myself off on a wild pear chase, apparently!

https://t.co/ERYmqWyQN8