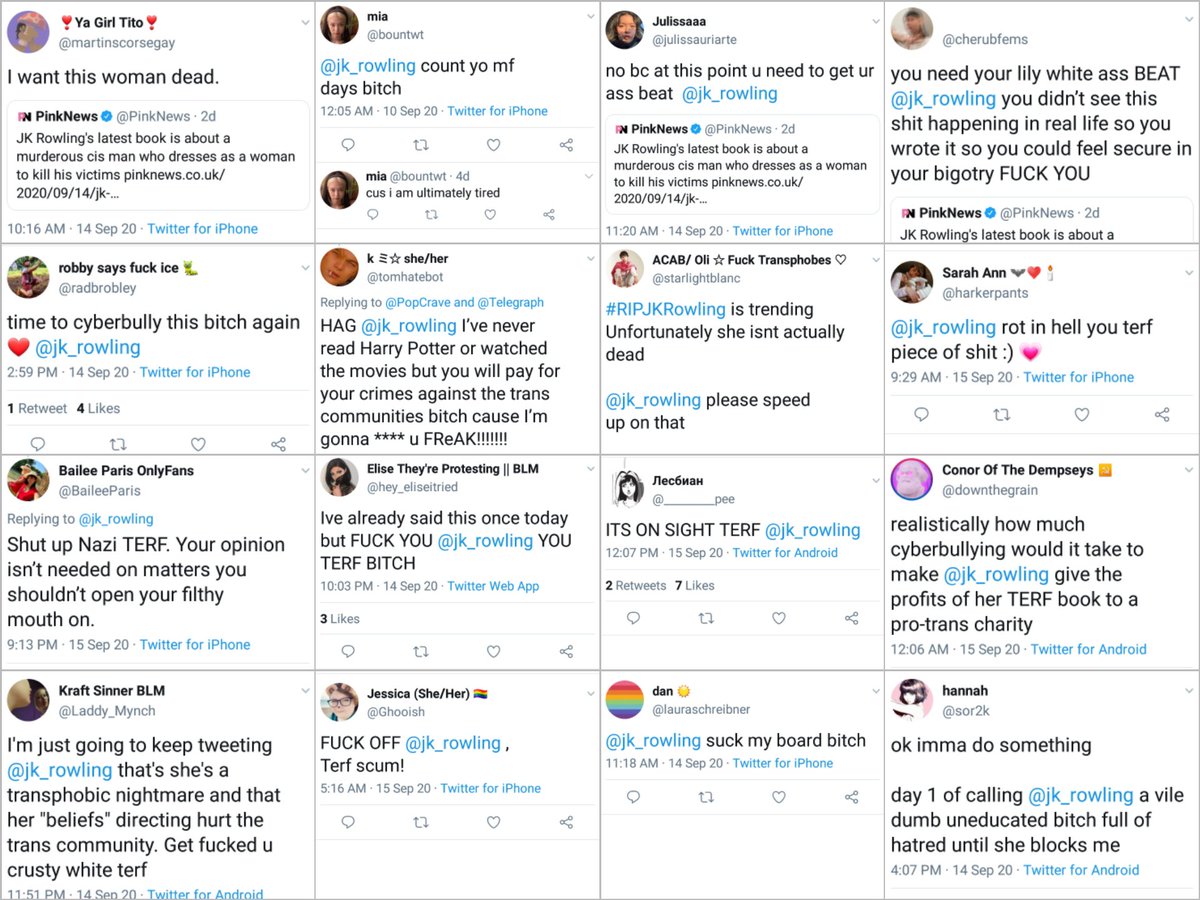

LRT: One of the problems with Twitter moderation - and I'm not suggesting this is an innocent cause that is accidentally enabling abuse, but rather that it's a feature, from their point of view - is that the reporting categories available for us do not match up to the rules.

Because the rule's existence creates the impression that we have protections we don't.

Meanwhile, people who aren't acting in good faith can, will, and DO game the automated aspects of the system to suppress and harm their targets.

A death threat is not supposed to be allowed on here even if it's a joke. That's Twitter's premise, not mine.

A guy going "Haha get raped." is part of the plan. It's normal.

His target replying "FUCK OFF" is not. It's radical.

Things that strike the moderator as "That's just how it is on this bitch of an earth." get a pass.

And then the ultra-modern ones like "Banned from Minecraft in real life."

https://t.co/5xdHZmqLmM

Helicopter rides.

— azteclady (@HerHandsMyHands) October 3, 2020

More from 12 Foot Tall Giant Alexandra Erin

More from Social media

You May Also Like

H was always unseen in S2NL :)

Those who exited at 1500 needed money. They can always come back near 969. Those who exited at 230 also needed money. They can come back near 95.

Those who sold L @ 660 can always come back at 360. Those who sold S last week can be back @ 301

Those who exited at 1500 needed money. They can always come back near 969. Those who exited at 230 also needed money. They can come back near 95.

Those who sold L @ 660 can always come back at 360. Those who sold S last week can be back @ 301

Sir, Log yahan.. 13 days patience nhi rakh sakte aur aap 2013 ki baat kar rahe ho. Even Aap Ready made portfolio banakar bhi de do to bhi wo 1 month me hi EXIT kar denge \U0001f602

— BhavinKhengarSuratGujarat (@IntradayWithBRK) September 19, 2021

Neuland 2700 se 1500 & Sequent 330 to 230 kya huwa.. 99% retailers/investors twitter par charcha n EXIT\U0001f602